OpenMind is building a universal operating system for robots, enabling them not only to perceive and act but also to collaborate safely and at scale in any environment through decentralized cooperation.

Why We Need an Open Era for Robotics

In the next 5–8 years, the number of robots on Earth will exceed 1 billion, marking a turning point from "single-machine demonstrations" to "social division of labor." Robots will not just be mechanical arms on assembly lines but will be "colleagues, teachers, and partners" capable of perceiving, understanding, making decisions, and collaborating with humans.

In recent years, robotic hardware has rapidly advanced like muscle, with more dexterous hands, steadier gaits, and richer sensors. However, the real bottleneck lies not in metal and motors but in how to enable them to possess a mindset for sharing and collaboration:

Software from different manufacturers is incompatible, preventing robots from sharing skills and intelligence;

Decision-making logic is locked in closed systems, making it impossible for the outside world to verify or optimize;

Centralized control architectures limit the speed of innovation and increase trust costs.

This fragmentation makes it difficult for the robotics industry to translate advancements in AI models into replicable productivity: while there are countless single-machine demos, there is a lack of cross-device migration, verifiable decision-making, and standardized collaboration, making scaling challenging. What OpenMind aims to solve is this "last mile." Our goal is not to create a robot that dances better but to provide a unified software foundation and collaboration standards for a vast array of heterogeneous robots worldwide:

Enabling robots to understand context and learn from each other;

Allowing developers to quickly build applications on an open-source, modular architecture;

Ensuring safe collaboration and settlement between humans and machines under decentralized rules.

In short, OpenMind is building a universal operating system for robots, enabling them not only to perceive and act but also to collaborate safely and at scale in any environment through decentralized cooperation.

Who is Betting on This Path: $20M Funding and a Global Team

Currently, OpenMind has completed $20 million (Seed + Series A) in funding, led by Pantera Capital, with a lineup of top global technology and capital forces:

Western Technology and Capital Ecosystem: Ribbit, Coinbase Ventures, DCG, Lightspeed Faction, Anagram, Pi Network Ventures, Topology, Primitive Ventures, and Amber Group, among others, have long been deeply involved in crypto and AI infrastructure, betting on the underlying paradigm of "agent economy and machine internet";

Eastern Industrial Energy: Sequoia China and others are deeply engaged in the robotics supply chain and manufacturing systems, understanding the full difficulties and thresholds of "building a machine and delivering it at scale."

At the same time, OpenMind maintains close communication with traditional capital market participants like KraneShares, exploring pathways to incorporate the long-term value of "robots + agents" into structured financial products, thus achieving a dual connection between cryptocurrencies and stocks. In June 2025, when KraneShares launched the Global Humanoid and Embodied Intelligence Index ETF (KOID), they chose the humanoid robot "Iris," co-customized by OpenMind and RoboStore, to ring the opening bell at NASDAQ, marking the first time in exchange history that a humanoid robot performed this ceremony.

As Pantera Capital partner Nihal Maunder stated:

"If we want intelligent machines to operate in open environments, we need an open intelligent network. What OpenMind is doing for robots is akin to what Linux did for software and Ethereum did for blockchain."

Team and Advisors: From Lab to Production Line

OpenMind's founder Jan Liphardt is an associate professor at Stanford University and a former professor at Berkeley, with extensive research in data and distributed systems, deeply engaged in both academia and engineering. He advocates for advancing open-source reuse, replacing black boxes with auditable and traceable mechanisms, and integrating AI, robotics, and cryptography through interdisciplinary approaches.

OpenMind's core team comes from institutions such as OKX Ventures, Oxford Robotics Institute, Palantir, Databricks, and Perplexity, covering key areas such as robotic control, perception and navigation, multimodal and LLM scheduling, distributed systems, and on-chain protocols. Additionally, a team of advisors composed of experts from academia and industry (such as Stanford Robotics head Steve Cousins, Oxford Blockchain Center's Bill Roscoe, and Imperial College's AI safety professor Alessio Lomuscio) ensures the "safety, compliance, and reliability" of the robots.

OpenMind's Solution: Two-Tier Architecture, One Set of Order

OpenMind has built a reusable infrastructure that allows robots to collaborate and share information across devices, manufacturers, and even national borders:

Device Side: Provides an AI-native operating system for physical robots, OM1, which connects the entire link from perception to execution, enabling different types of machines to understand their environment and complete tasks;

Network Side: Constructs a decentralized collaboration network, FABRIC, providing identity, task allocation, and communication mechanisms to ensure that robots can recognize each other, allocate tasks, and share states during collaboration.

This combination of "operating system + network layer" allows robots not only to act independently but also to cooperate, align processes, and complete complex tasks together within a unified collaboration network.

OM1: AI-Native Operating System for the Physical World

Just as smartphones need iOS or Android to run applications, robots also require an operating system to run AI models, process sensor data, make reasoning decisions, and execute actions.

OM1 was born for this purpose; it is an AI-native operating system for real-world robots, enabling them to perceive, understand, plan, and complete tasks in various environments. Unlike traditional, closed robotic control systems, OM1 is open-source, modular, and hardware-agnostic, capable of running on various forms such as humanoid, quadruped, wheeled, and robotic arms.

Four Core Steps: From Perception to Execution

OM1 breaks down robotic intelligence into four general steps: Perception → Memory → Planning → Action. This process is fully modularized in OM1 and connected through a unified data language, enabling the construction of composable, replaceable, and verifiable intelligent capabilities.

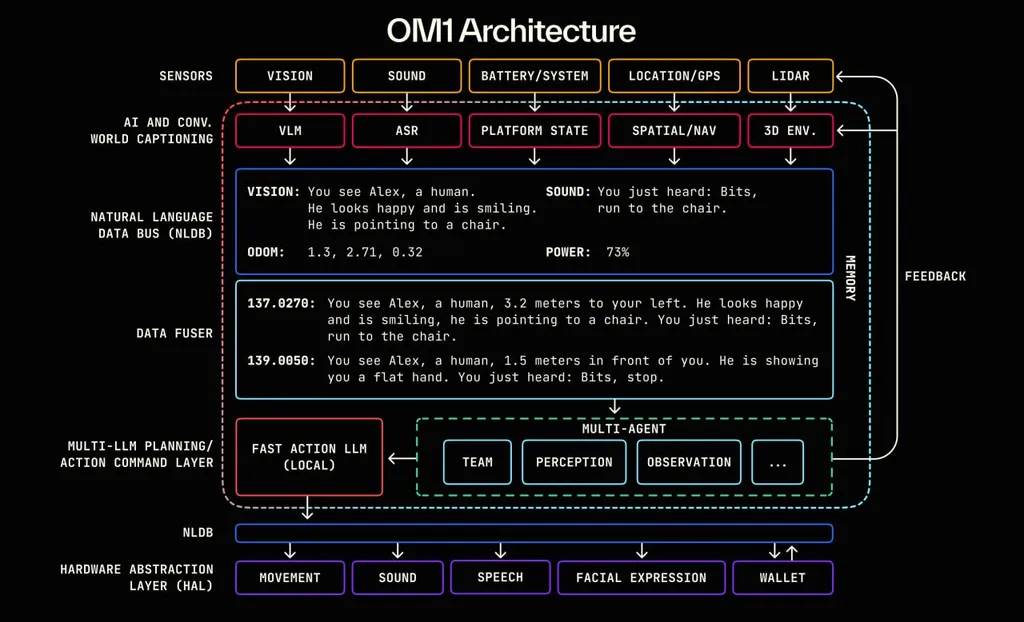

OM1 Architecture

Specifically, the seven-layer link of OM1 is as follows:

Sensor Layer: Collects information through cameras, LIDAR, microphones, battery status, GPS, and other multimodal inputs.

AI + World Captioning Layer: Translates information into natural language descriptions (e.g., "You see a person waving") using multimodal models.

Natural Language Data Bus: Transmits all perceptions as timestamped language fragments between different modules.

Data Fuser: Combines multiple sources of input to generate a complete context for decision-making (prompt).

Multi-AI Planning/Decision Layer: Multiple LLMs read the context and generate action plans based on on-chain rules.

NLDB Downlink: Passes decision results to the hardware execution system through a language intermediary layer.

Hardware Abstraction Layer: Converts language instructions into low-level control commands to drive hardware actions (movement, voice broadcasting, transactions, etc.).

Quick Start, Broad Implementation

To enable an idea to quickly translate into tasks executed by robots, OM1 comes with these built-in tools:

Rapid Skill Addition: Using natural language and large models, new behaviors can be added to robots within hours instead of months of hard coding.

Multimodal Integration: Easily combines LiDAR, vision, sound, and other perceptions, allowing developers to avoid writing complex sensor fusion logic themselves.

Preconfigured Large Model Interfaces: Built-in interfaces for language/vision models like GPT-4o, DeepSeek, and VLMs support voice interaction.

Wide Software and Hardware Compatibility: Supports mainstream protocols like ROS2 and Cyclone DDS, seamlessly integrating with existing robotic middleware. Whether it's the Unitree G1 humanoid, Go2 quadruped, or Turtlebot, robotic arms can all connect directly.

Integration with FABRIC: OM1 natively supports identity, task coordination, and on-chain payments, allowing robots not only to complete tasks independently but also to participate in a global collaboration network.

Currently, OM1 has been implemented in several real-world scenarios:

Frenchie (Unitree Go2 quadruped robot): Completed complex site tasks at the USS Hornet Defense Technology Exhibition 2024.

Iris (Unitree G1 humanoid robot): Conducted live human-robot interaction demonstrations at the EthDenver 2025 Coinbase booth and plans to enter university curricula across the U.S. through RoboStore's educational program.

FABRIC: Decentralized Human-Robot Collaboration Network

Even with a powerful brain, if robots cannot collaborate safely and reliably with each other, they will still be fighting their own battles. In reality, robots from different manufacturers often build their own systems and operate independently, making skill and data sharing impossible; cross-brand and even cross-national collaboration lacks trustworthy identities and standard rules. As a result, some challenges arise:

Identity and Location Proof: How do robots prove who they are, where they are, and what they are doing?

Skill and Data Sharing: How can robots be authorized to share data and invoke skills?

Defining Control: How do we set the frequency, scope, and conditions for data feedback when using skills?

FABRIC is designed to address these issues. It is a decentralized human-robot collaboration network created by OpenMind, providing a unified infrastructure for identity, tasks, communication, and settlement for robots and intelligent systems. You can think of it as:

Like GPS, allowing robots to know where each other are, whether they are close, and if they are suitable for collaboration;

Like a VPN, enabling robots to connect securely without needing public IPs and complex network setups;

Like a task scheduling system, automatically publishing, receiving, and recording the entire process of task execution.

Core Application Scenarios

FABRIC is already adaptable to various practical scenarios, including but not limited to:

Remote Control and Monitoring: Safely control robots from anywhere without a dedicated network.

Robot-as-a-Service Market: Call robots like hailing a ride to complete tasks such as cleaning, inspection, and delivery.

Crowdsourced Mapping and Data Collection: Fleets or robots upload real-time road conditions, obstacles, and environmental changes to generate shareable high-precision maps.

On-Demand Scanning/Surveying: Temporarily call nearby robots to complete 3D modeling, architectural surveying, or evidence collection in insurance scenarios.

FABRIC allows for verification and traceability of "who is doing what, where, and what has been completed," and provides clear boundaries for skill invocation and task execution.

In the long run, FABRIC will become the App Store for machine intelligence: skills can be authorized for global invocation, and the data generated from these invocations will feed back into models, driving the continuous evolution of the collaboration network.

Web3 is Writing "Openness" into the Robotic Society

In reality, the robotics industry is accelerating towards centralization, with a few platforms controlling hardware, algorithms, and networks, while external innovation is kept at bay. The significance of decentralization lies in the fact that regardless of who manufactures the robots or where they operate, they can collaborate, exchange skills, and settle payments in an open network without being tied to a single platform.

OpenMind uses on-chain infrastructure to write collaboration rules, skill access permissions, and reward distribution methods into a public, verifiable, and improvable "network order."

Verifiable Identity: Each robot and operator will register a unique identity on-chain (ERC-7777 standard), with hardware features, responsibilities, and permission levels being transparent and traceable.

Public Task Allocation: Tasks are not assigned in a closed black box but are published, bid on, and matched under public rules; all collaboration processes will generate encrypted proofs with timestamps and locations, stored on-chain.

Automatic Settlement and Profit Sharing: After task completion, the release or deduction of profits, insurance, and deposits will be executed automatically, allowing any participant to verify results in real-time.

Free Flow of Skills: New skills can be set through on-chain contracts to define invocation frequency, applicable devices, etc., protecting intellectual property while allowing skills to flow freely on a global scale.

This is a collaborative order that all participants can use, supervise, and improve. For Web3 users, this means that the robotic economy is born with anti-monopoly, composable, and verifiable genes—this is not only a track opportunity but also a chance to engrave "openness" into the foundational layer of robotic society.

Letting Embodied Intelligence Step Out of Isolation

Whether patrolling hospital wards, learning new skills in schools, or completing inspections and modeling in urban neighborhoods, robots are gradually stepping out of "showroom demonstrations" to become stable components of human daily labor. They operate 24/7, follow rules, have memory and skills, and can naturally collaborate with humans and other machines.

To truly scale these scenarios, it requires not only smarter machines but also a foundational order that allows them to be trustworthy, interoperable, and collaborative with each other. OpenMind has already laid the first "roadbed" on this path with OM1 and FABRIC: the former enables robots to truly understand the world and act autonomously, while the latter allows these capabilities to circulate in a global network. The next step is to extend this path to more cities and networks, making machines reliable long-term partners in the social network.

OpenMind's roadmap is clear:

Short-term: Complete the core functional prototype of OM1 and the MVP of FABRIC, launching on-chain identity and basic collaboration capabilities;

Mid-term: Implement OM1 and FABRIC in education, homes, and enterprises, connecting early nodes and gathering developer communities;

Long-term: Establish OM1 and FABRIC as global standards, allowing any machine to connect to this open robotic collaboration network like connecting to the internet, forming a sustainable global machine economy.

In the Web2 era, robots were often locked into closed systems of single manufacturers, with functions and data unable to flow across platforms; however, in the world built by OpenMind, they are equal nodes in an open network: able to join, learn, collaborate, and settle freely, forming a trustworthy interconnected global robotic society alongside humans. What OpenMind provides is the powerful capability to make this transformation scalable.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。