When the system no longer "waits for you to finish speaking" but can grasp the rhythm, hear emotions, and catch interruptions during communication, the quality of online encounters is quietly rewritten. Cornell University's Metya AI Lab has released a new social large model that simultaneously embodies the "smoothness" of conversation and the "discretion" of governance within the same dialogue: the voice side truly achieves full duplex, allowing for simultaneous listening and speaking, natural interruptions, and self-termination of speech; the content side provides clear and traceable evidence, no longer just asking "can it be done," but presenting "why" and "how to do it better" in sync. The launch event did not pile up jargon but used demonstrations close to real scenarios to clarify: entering a cross-language interest room, the system provides lightweight opening topics and a natural speaking order; when someone posts a suspicious short link, the sidebar immediately displays the relevant clauses and suggested actions without interrupting the ongoing conversation; if there is a lull, it appropriately uses "relay questions" to prompt the previous round's non-speaker, warming up the atmosphere.

When the system no longer "waits for you to finish speaking" but can grasp the rhythm, hear emotions, and catch interruptions during communication, the quality of online encounters is quietly rewritten. Cornell University's Metya AI Lab has released a new social large model that simultaneously embodies the "smoothness" of conversation and the "discretion" of governance within the same dialogue: the voice side truly achieves full duplex, allowing for simultaneous listening and speaking, natural interruptions, and self-termination of speech; the content side provides clear and traceable evidence, no longer just asking "can it be done," but presenting "why" and "how to do it better" in sync. The launch event did not pile up jargon but used demonstrations close to real scenarios to clarify: entering a cross-language interest room, the system provides lightweight opening topics and a natural speaking order; when someone posts a suspicious short link, the sidebar immediately displays the relevant clauses and suggested actions without interrupting the ongoing conversation; if there is a lull, it appropriately uses "relay questions" to prompt the previous round's non-speaker, warming up the atmosphere.

The head of the Metya AI Lab stated: "Socializing is not about answering questions, and dating is even less so. We hope to solidify two things—conversations flow smoothly and governance is clear. Smoothness means a stable rhythm that can be interrupted and seamlessly picked back up; clarity means every reminder has a basis and can be verified. Putting both back into the same conversation makes online encounters truly warm and orderly."

1. Full Duplex Communication and Explainable Judgments: Putting Rhythm and Basis Back into the Same Conversation

Traditional human-computer dialogues often rely on "turn control" and silence thresholds, where the user speaks, and the system responds afterward; once multiple people are present or emotions run high, this "walkie-talkie style" interaction can feel stiff. Metya's social large model uses full duplex as the interaction foundation, allowing the system to listen and speak simultaneously without interrupting the flow of information: when the other party reaches a key point and naturally pauses, it can provide appropriate agreement or questions; if it detects a strong desire to express from the other party, it will self-terminate, yielding the floor. This is not about talking over others or mechanically inserting pleasantries, but rather a continuous alignment of "rhythmic collaboration."

In multi-person voice scenarios, the system takes on the role of a "restrained host." Restraint means it does not overshadow others but uses lightweight timing control, calling names, summarizing, and saving awkward moments to help the group see and be seen at a comfortable rhythm. If a lull exceeds a threshold, the system throws out a "relay question"; if the discussion strays off-topic, the system uses a summarizing statement to bring the conversation back; when emotions run high, it will "reduce noise" with a lower volume and slower speech to restore order to balance. Meanwhile, cross-language simultaneous interpretation operates in the background—participants speaking in their native languages, with the system aligning semantics in real-time, avoiding extra subtitles and delays, making language no longer the first barrier.

Beyond "smooth," there must be "clear." Metya upgrades content governance from a black-box determination of "pass/fail" to an explainable evidence chain. When there are boundary-crossing expressions, risky links, or inducements to jump out, the system does not abruptly interrupt the conversation but synchronously presents the original sentence, relevant clauses, judgment reasons, confidence levels, and suggested actions in the sidebar. If it is merely a slip of the tongue or a contextual joke, it suggests a verbal reminder first; if it recurs, it escalates to muting or removal. This "tiered handling" clarifies "why" without disrupting the atmosphere and explains "what to do next." The operational side and partners can directly click to verify evidence segments; users can also view relevant explanations and initiate appeals when needed. Rather than being a "restrictive chain," it is more of a "transparent chain": all actions can revert to their basis, and all bases can be verified.

On the engineering side, stability comes from a complete set of combinations: speaker separation, echo suppression, and environmental noise reduction form the acoustic front end, ensuring the accuracy and stability of interruption detection; the "rhythm state machine" schedules between different speaking turns, preventing "listening and speaking" from evolving into "fighting for the microphone"; hosting styles can be configured (restrained, active, professional) to adapt to different room types and atmospheres; the content side breaks down community norms into an executable checklist of "items—counterexamples—boundary explanations," reducing the randomness of "feeling-based" enforcement. All of these converge under one goal: to let the system keep the complexity to itself and leave the dignity to the users.

2. Full Chain Connection with Metya: From "Being Seen" to "Being Able to Speak" to "Good Review"

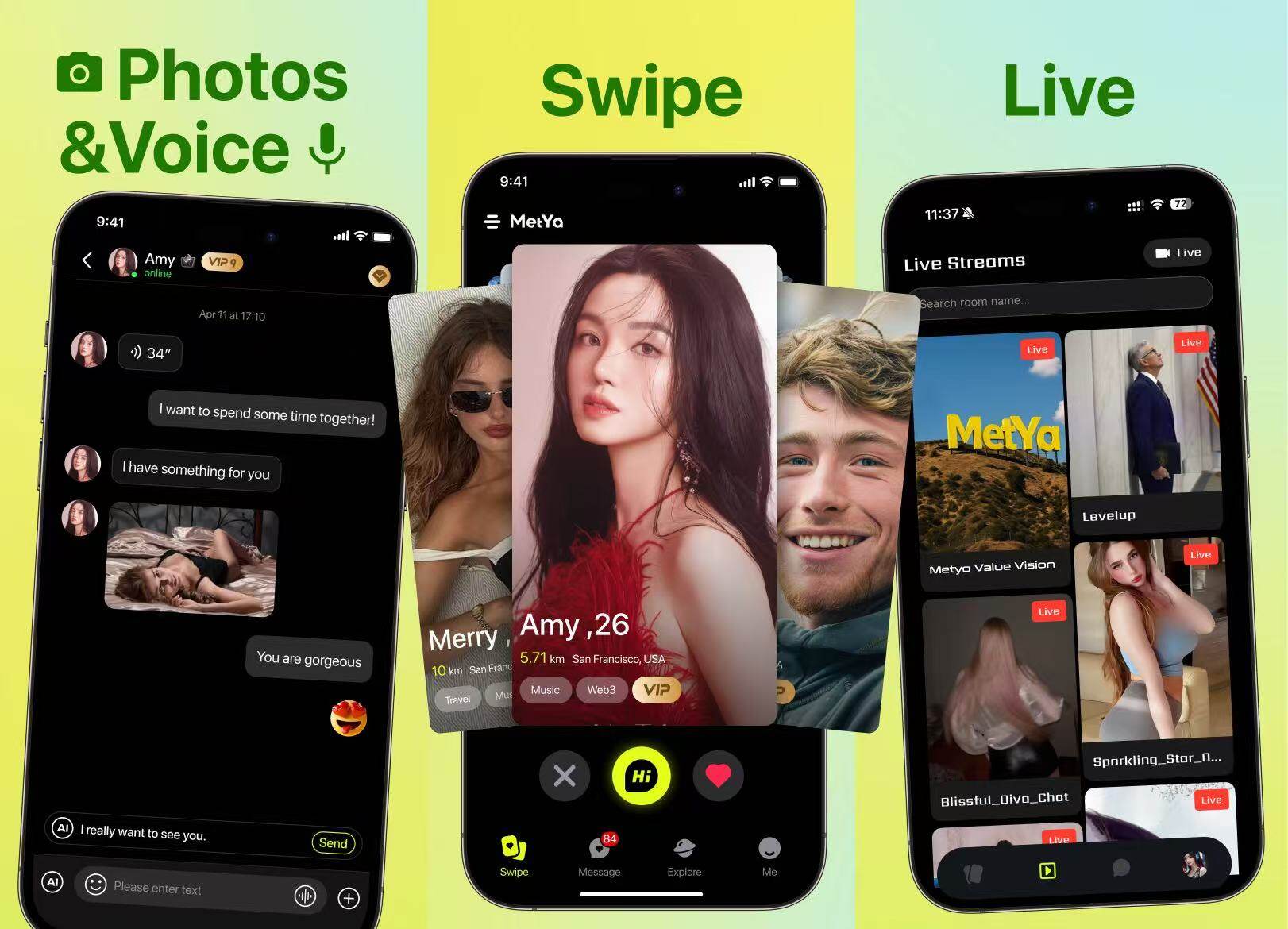

This is not a function in a demonstration room but a real journey embedded in Metya's dating product. Starting from mutual visibility, to the first time speaking, and then deciding whether to meet again, the system provides appropriate support at key points.

In the Being Seen phase, the system combines data, recent activities, and topic preferences to generate a "compatibility profile," prioritizing people who are easier to "chat with" in front of each other. For matches with different languages, cross-language simultaneous interpretation is automatically enabled before the conversation to avoid being blocked by language from the start. For combinations that are prone to awkward silences, candidate cards will preset three lightweight topics, such as "the last unexpected joy," "a movie you watch repeatedly," and "where to go for half a day on the weekend," providing a graspable and warm opening. Many users find not an infusion of "techniques" here but a courage to "speak up."

Entering the Being Able to Speak phase, in a 1v1 or party room, "smart opening" immediately generates speaking order and opening topics; the system plays the role of a "restrained host," stabilizing the rhythm in a full duplex manner: when someone wants to interrupt, they do not need to wait for a prompt; the system naturally yields space; as the topic strays further, the system gently summarizes the conversation with a keyword-laden statement; when encouragement for participation is needed, it points "relay questions" to the previous round's non-speaker, preventing a few individuals from monopolizing the microphone for too long. More importantly, when boundary-crossing expressions or external links occur, the sidebar immediately displays the basis for the hit and suggested actions without interrupting the ongoing conversation. The host receives low-interference prompts (e.g., "ask the other party to summarize key points," "respond from a different angle," "give a specific example"), which are not broadcast to everyone but quietly help the host maintain the flow and politeness.

Good Review is the premise of turning a conversation into a better encounter. As the end approaches, the system automatically generates a conversation summary: effective rounds, average speaking time, interruption delays, aggregated discussion topics, points of humor and disagreement, intercepted risky content (with original sentences and evidence segments), and "suggestions for manual review" for jokes/sensitive segments. The summary seen by users is not a "score" but a reference "review": which areas resonated with each other, which areas had differing but non-conflicting views, and the next steps are to continue with voice, switch to text, or schedule an offline coffee, with the system providing two sets of polite phrases—one for natural progression and one for dignified closure. The operational side and creators can also export the summary with one click, eliminating the need to rely on memory to "recall the atmosphere" the next day, instead having evidence to rely on for review.

Using two real process-oriented lenses, one can more intuitively see its usage: the first is cross-language speed-matching rooms. Ice-breaking is completed in 60 seconds; by the third minute, a member posts a suspected link for redirection, and the sidebar provides "basis for the hit + suggested actions," with the host giving a verbal reminder first, escalating if it recurs, without interrupting the rhythm; after a 20-second lull, the system throws out a "relay question," prompting the previous round's silent participant, quickly lifting the atmosphere. The second is a 1v1 first call. The system prompts in-ear, "please ask the other party to specify," helping to ground vague expressions in specific scenarios; when the other party touches on privacy boundaries, the interface simultaneously pushes "boundary reminders + polite refusal phrases," clarifying boundaries without being rude. Before concluding, both parties receive conversation summaries and "next step suggestions," with the decision always in the users' hands.

The head of the Metya AI Lab emphasized during discussions about product philosophy: "We do not pursue exaggerated anthropomorphism, nor do we use flashy effects to obscure the reality of conversation. Taking a half-step back with technology removes obstacles like waiting, misunderstandings, and language barriers, allowing attention to return to 'what to say,' rather than 'how to talk to the system.'"

3. Data, Boundaries, and Openness: Making Trust Verifiable and Progress Measurable

Brand promises must withstand verification. The lab and Metya will disclose four core indicators to the public with the same caliber, same demographics, and same time frame: Governance (violation exposure rate, false positives/missed judgments), Interaction (interruption response delay, average speaking time, speaking coverage), Retention (room stay duration, next-day/7-day revisit), Conversion (path from browsing to following/inviting). Each conclusion can be traced back to samples and evidence, with quarterly reports accompanied by sampling methods and confidence interval explanations to avoid creating an illusion of progress through "changing indicators."

Boundaries are also clearly defined. First, in extremely noisy or multi-person speaking scenarios, mishearing or sentence breaks may still occur; the team has included speaker separation, noise reduction, and echo suppression in a continuous iteration plan and has built an "abnormal conversation playback" mechanism on the platform for rapid troubleshooting. Second, cultural scales of humor, sarcasm, and regional references vary; the lab and partners will co-build a "sample library and gray rules" to clarify the boundaries of "what can be said/what cannot be said," rather than a "one-size-fits-all" approach. Third, enterprises can choose not to participate in training and localized storage; all sensitive handling will leave traces, be auditable, and be appealable, with third-party audits introduced when necessary to ensure governance has both strength and boundaries.

In terms of external openness, the lab will gradually provide capability interfaces: content checks to determine if violations occur and complete evidence chains; voice hosting to provide full duplex rhythm control and style configuration; simultaneous interpretation flow to support multi-language parallel conversations; record export to provide conversation summaries and evidence segments (CSV/JSON). These interfaces adopt a "pluggable" design, allowing for standalone integration or deep integration with the same platform. For verticals like education, online events, and language exchange, the lab will provide "scenario templates" and "polite phrase libraries" to shorten the time from PoC to launch. Openness is not a declaration but turning capabilities into standardized building blocks, allowing more real scenarios to reuse "smoothness and clarity."

The R&D roadmap is also clear: the current phase focuses on the stability and experience refinement of 1v1, interest matching, and multi-person party rooms; the subsequent phase will open interfaces and hosting style configurations, launch "boundary/polite refusal phrase libraries," and expand multi-language coverage; further down the line, quarterly evaluation reports will be released, accompanied by sample explanations and counterexample library updates, along with announcements of typical success cases and "lessons learned" lists. A real product does not shy away from problems; writing out the problems is often the beginning of solving them.

Ultimately, this release is about one thing: putting the "rhythm of conversation" and the "basis for handling" back into the same dialogue and ensuring it lands in Metya's real dating scenarios from day one. If users wait one second less, misunderstand once less, and feel one less awkward reminder, relationships move forward a step. The head of the Metya AI Lab emphasized in conclusion: "We would rather write one more line of basis than create one more misunderstanding. When judgments are no longer black boxes, conversations no longer queue, and cross-language barriers no longer exist, a more relaxed encounter will happen more often."

About Cornell University's Metya AI Lab

The Metya AI Lab is part of Cornell University's related research system, focusing on the foundational research and productization of "AI + social/dating," continuously investing in full duplex voice, explainable content judgment, cross-language understanding, and multimodal interaction. The team advocates for an open, restrained, and verifiable technical paradigm, dedicated to serving the establishment and maintenance of real relationships with practical engineering capabilities.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。