# Outlook

1. Macroeconomic Summary and Future Predictions

Last week, the U.S. stock market performed positively, with major indices reaching new highs, although the U.S. dollar index experienced some fluctuations while remaining strong overall. The Federal Reserve is expected to begin cutting interest rates later this year to alleviate some economic downward pressure, but overall policy will still depend on changes in inflation and employment data. The U.S. macroeconomic environment shows characteristics of slowing growth but stable employment, with inflationary pressures still significant due to tariff impacts. Market expectations for Federal Reserve rate cuts are gradually becoming a consensus, but the risks of trade frictions still require close attention.

2. Market Changes and Warnings in the Crypto Industry

Last week, Bitcoin continued to maintain a high-level consolidation, while Ethereum showed stronger capital momentum than Bitcoin, rapidly enhancing its capital inflow and market performance. ETFs experienced net inflows for six consecutive days, indicating that the capital landscape may be shifting from Bitcoin to Ethereum. The crypto market continued to exhibit characteristics of active capital and deepening institutional influence, but high leverage and increased market volatility pose short-term risk challenges. Investors need to be cautious of changes in Federal Reserve policies and the uncertain impacts of the macro environment.

3. Industry and Sector Hotspots

Twyne is a non-custodial credit authorization protocol aimed at maximizing capital efficiency in the lending market. Twyne is directly integrated into established lending markets like Euler, allowing users to deposit IOU tokens (such as euler_USDC) that represent their underlying market positions. CodexField is built on BNB Greenfield and BNB Smart Chain, aiming to achieve on-chain ownership confirmation, authorized use, transaction circulation, and revenue mapping of content.

## Market Hotspot Sectors and Potential Projects of the Week

1. Overview of Potential Projects

1.1. Analysis of Twyne, led by Euler—Releasing Idle Lending Capacity and Enhancing Capital Efficiency through a Credit Authorization Protocol

Introduction

Twyne is a non-custodial credit authorization protocol designed to maximize capital efficiency in the lending market.

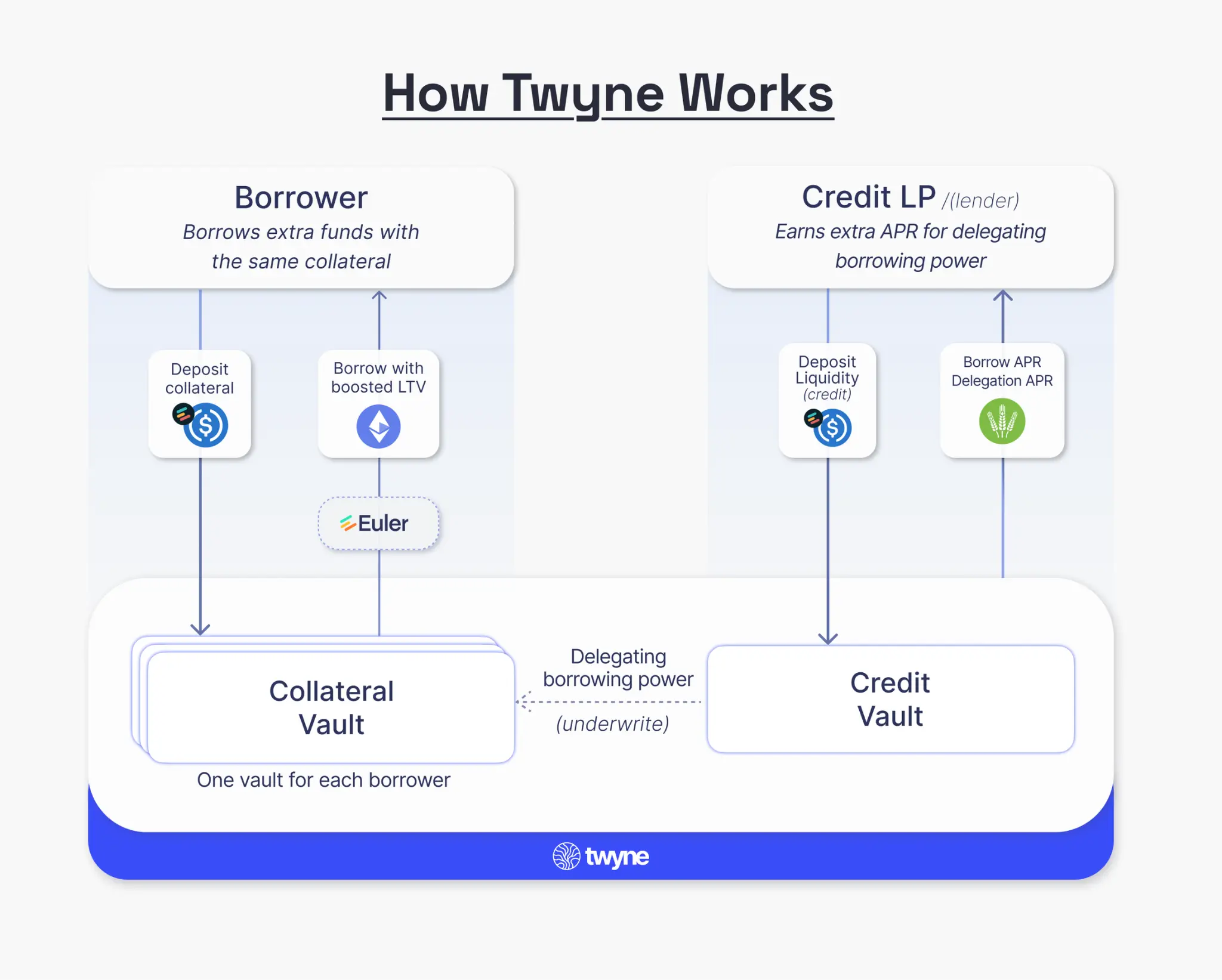

As an immutable intermediary layer built on lending protocols like Euler, it creates a trading market for lending capabilities. Its core functions include:

- Lenders (Credit LPs): Can use their unused lending capacity to support loans for others, thereby earning additional income.

- Borrowers: Can obtain capital beyond the limits of traditional lending platforms for use as a liquidation buffer or to enhance leverage.

This authorization layer releases the value of idle liquidity while maintaining the security and risk isolation of the underlying lending protocols.

Architecture Overview

Twyne is directly integrated into established lending markets like Euler, allowing users to deposit IOU tokens (such as euler_USDC) that represent their underlying market positions. By depositing these tokens and authorizing their unused lending capacity, users can earn additional annual percentage rates (APR) from borrowers, while borrowers can obtain a loan-to-value ratio (LTV) higher than the original market, using the same collateral.

This provides users with a second revenue stream:

Base Yield (Supply APY) + Authorized Yield (Twyne Delegation APY).

Three main use cases:

- Pure Credit Lenders (Credit LP)—Maximizing Returns by Fully Authorizing Lending Capacity Deposit IOU tokens into the Credit Vault,

relinquishing their lending rights,

providing guaranteed loans to borrowers through Twyne,

and receiving twyneeulerUSDC tokens,

→ In addition to the base lending annual yield (APY), earn extra authorized yield. - Pure Borrowers—Enhancing Lending Capacity for Leverage or Increased Liquidation Buffer Deposit IOU tokens into the Collateral Vault,

reserving additional lending capacity from the Credit Vault,

Twyne will temporarily add these authorized credit limits to your account,

→ Achieve a higher borrowing limit than allowed in the original protocol. - Hybrid Model—Simultaneously Authorizing and Borrowing Split assets into two vaults:

- Credit Vault: Authorize part of the lending capacity to earn returns;

- Collateral Vault: Borrow without using the entire lending capacity,

→ Borrowing while earning stable returns from credit authorization.

- Credit LP (Lender)

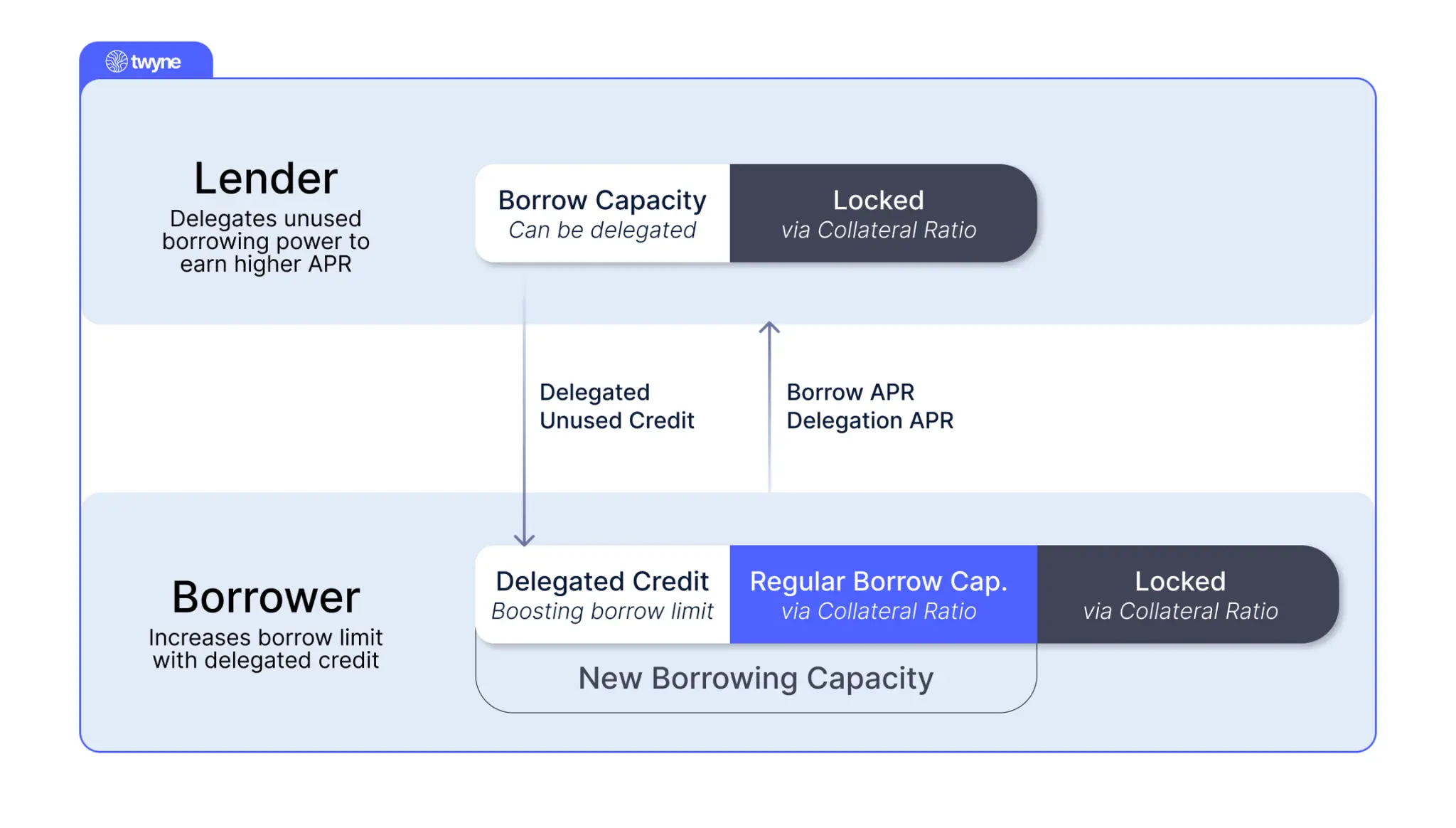

In Twyne, Credit LP (CLP) refers to users who provide assets to lending protocols like Euler or Aave but do not use collateral to borrow. They typically seek simple, passive income methods. In Twyne, they can go further:

CLPs can stake their receipt tokens (such as eUSDC, aETH) obtained in the lending market and authorize their unused lending capacity to other users.

How It Works

When CLPs authorize their lending capacity, they are essentially providing guarantees for borrowers' loans. In return for taking on additional risk, CLPs can earn an extra interest rate—referred to as the CLP Supply Rate—in addition to the base lending yield.

Total earnings for CLPs = Base Market Annual Yield (APY) + Authorized Annual Yield (APR)

- Borrowers

Borrowers on Twyne can obtain leverage beyond what their collateral allows in the original lending protocol. With Twyne, they can utilize the lending capacity authorized by CLPs (lenders) to temporarily enhance their loan-to-value ratio (LTV).

LTV Levels Enhanced by Twyne:

- High Volatility Asset Pairs (e.g., WETH-USDC): Up to 94% LTV → Achieving 14x Leverage (compared to 5.9x on Aave)

- Highly Correlated Asset Pairs (e.g., WETH-stETH): Up to 99% LTV

Workflow:

- Deposit collateral assets (e.g., eUSDC) into Twyne's Collateral Vault

- Reserve authorized lending capacity from CLPs

- Obtain a borrowing limit higher than the native limit allowed by the underlying protocol

Significantly enhance borrowing capacity, achieving higher leverage or stronger liquidation buffer.

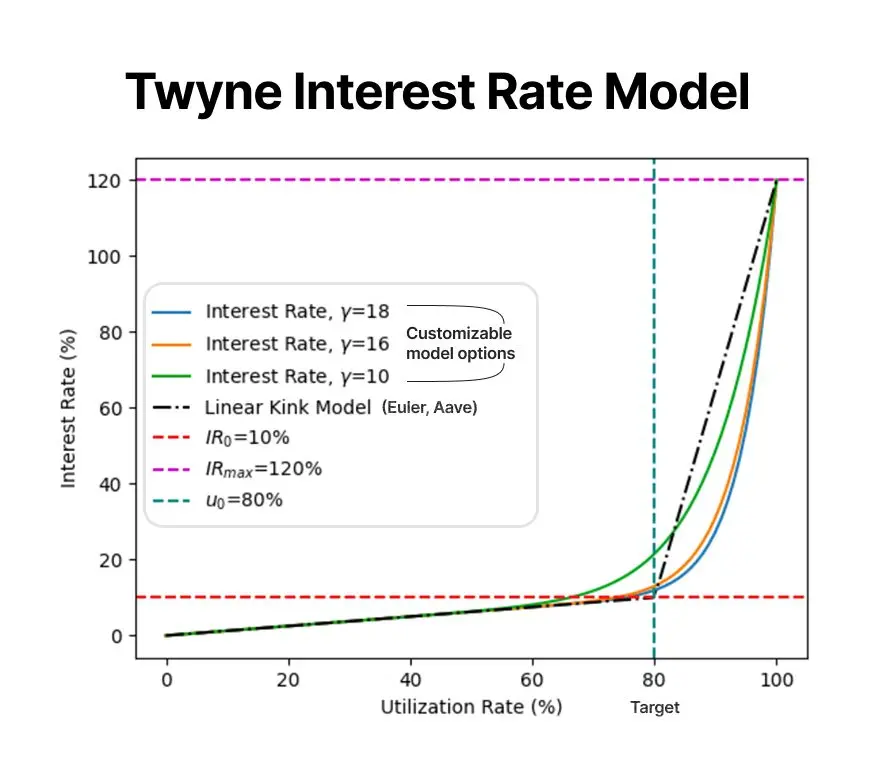

- Borrowing Interest Rate

On Twyne, borrowers must pay two parts of interest:

- Base Borrowing Rate—from the underlying lending protocol (e.g., Euler, Aave)

- CLP Supply Rate—the fee paid for obtaining authorized lending capacity

About the CLP Supply Rate:

- This rate is dynamically adjusted and increases as resource utilization rises

- When Demand = Target Utilization: Lower rates

- When Demand > Target Utilization: Rates rise sharply

Borrowers only need to pay the CLP Supply Rate for the additional authorized credit capacity,

not for all liabilities. Therefore, even under a steep rate curve, the actual net interest cost is much lower than the surface number.

Commentary

Twyne effectively releases idle lending capacity in the lending market through a non-custodial credit authorization layer, enhancing capital utilization efficiency. Lenders (CLPs) can earn additional returns while ensuring risk isolation; borrowers can leverage authorized capacity for higher leverage or liquidation buffers, significantly enhancing lending flexibility and capital efficiency.

However, lenders must bear higher risks than traditional passive lending, and if liquidation is not timely, they may face losses. Borrowing rates will also fluctuate with the utilization of authorized capacity. Overall, Twyne balances yield enhancement with risk control, providing innovative capital utilization solutions for both lending parties.

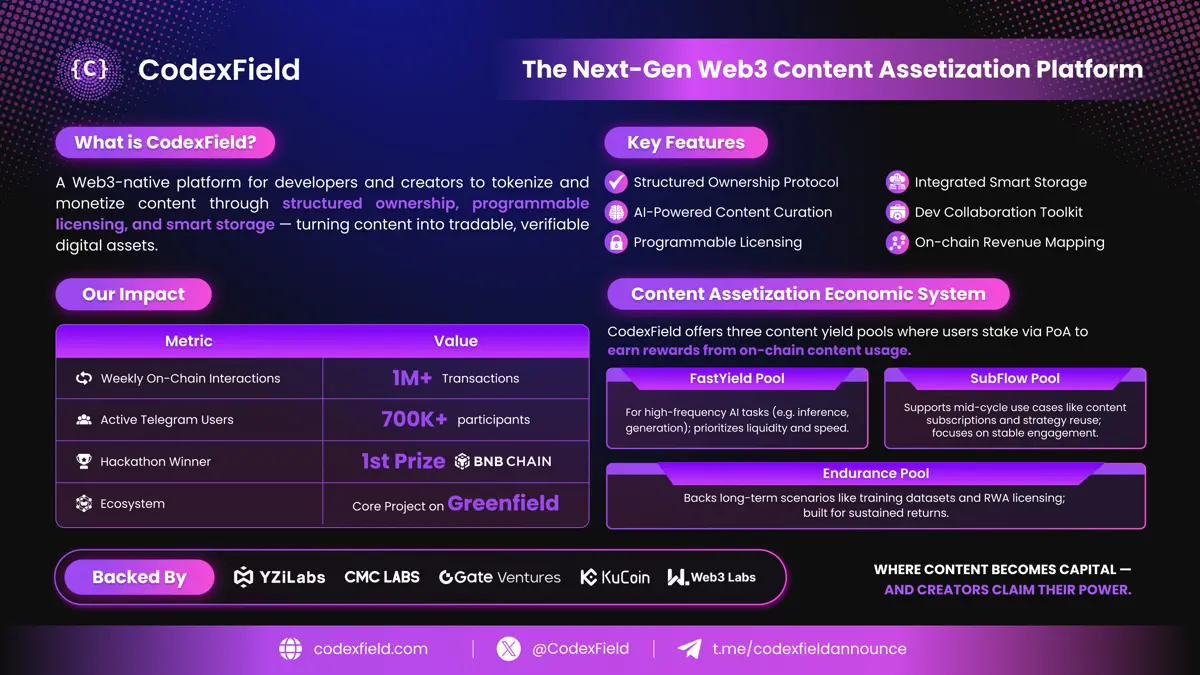

1.2. Interpretation of CodexField, led by Gate.io—A Decentralized Code and Content Creation Platform Built on BNB Greenfield

Introduction

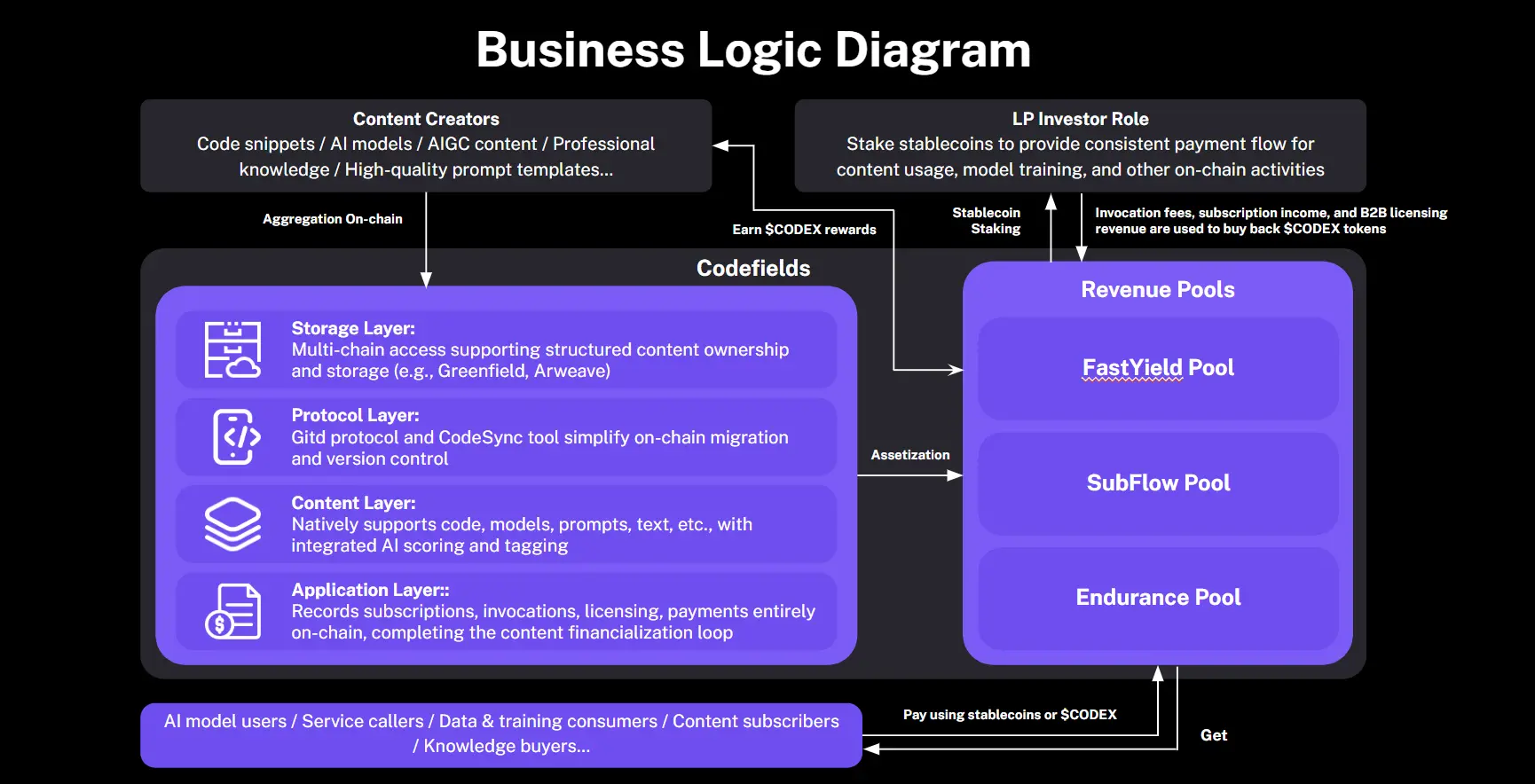

CodexField is built on BNB Greenfield and BNB Smart Chain. Its goal is to achieve on-chain ownership confirmation, authorized use, transaction circulation, and revenue mapping of content. We are redefining "digital content," viewing it as a new type of real-world asset (RWA). The platform supports the standardization and assetization of various content forms, including code, AI models, AIGC works, and knowledge assets, and transforms usage behavior into verifiable economic value through on-chain mechanisms, driving the fundamental shift of information into assets.

CodexField provides full-stack Web3 infrastructure to achieve ownership confirmation, permission management, and financialization of content assets—empowering developers, creators, and AI innovators to unlock new value in their work.

Architecture Overview

- Four-Layer Architecture Design

Storage Layer: Multi-Link Entry and Smart Distribution

In the storage layer, CodexField integrates multiple mainstream decentralized storage protocols (such as BNB Greenfield, Arweave, Filecoin, CESS, etc.), building a powerful and adaptable content hosting infrastructure. The platform introduces a "hot and cold data layering" mechanism, dynamically matching the optimal storage path based on content usage frequency and permission levels, ensuring rapid response for high-frequency content and efficient archiving for long-term data.

Additionally, the platform supports on-chain ownership confirmation of structured content, with all content files bound to metadata and hash signatures, ensuring verifiability and immutability between the platform and application scenarios. By combining off-chain CDN and DePIN accelerated networks, CodexField achieves global censorship-resistant content distribution and ensures low-latency access.

Protocol Layer: Ownership, Collaboration, and Permission Control

The protocol layer is the core pillar of the CodexField content asset framework, primarily consisting of two modules:

- Gitd Protocol: A local on-chain Git collaboration protocol that supports version control, merge reviews, and contribution records based on code assets. It ensures the security of on-chain data while providing developers with a complete collaboration pipeline.

- CodeSync Tool: Supports one-click migration of GitHub projects to the blockchain, retaining complete commit history, branch structure, and contributor identities, ensuring a smooth transition from Web2 to Web3.

CodexField also introduces programmable authorization contracts, allowing creators to define customized licensing strategies (such as read-only, usage calls, training rights, commercial distribution, etc.). These contracts execute fine-grained control and automatic settlement on-chain, ensuring transparency and traceability of content interactions.

Content Layer: Structured Asset Framework and AI Recognition Engine

The content layer supports various asset types supported by CodexField, including code, models, prompts, AIGC content, and strategic documents. The platform defines unified structural standards, metadata templates, and tagging systems for each content type, ensuring composability and recognizability during ownership confirmation, indexing, referencing, and composable calls.

CodexField also integrates an AI-based scoring and semantic tagging system that automatically evaluates and annotates the quality, credibility, and applicable scenarios of content. This provides important inputs for content recommendations, risk alerts, and financial pricing. Content behavior data (such as usage frequency, citation counts, and revenue) is also used for reputation scoring and asset valuation models.

Application Layer: Integrated Platform for Usage and Financial Circulation

The application layer connects users with content assets, forming a complete "creation—subscription—usage—revenue" feedback loop. The platform supports various on-chain interactions, including subscription access, call authorizations, model training, and secondary citations. All usage behaviors are recorded on-chain and serve as the basis for revenue distribution and reputation accumulation.

By integrating DeFi modules, social layer modules (such as developer profiles and content influence indices), and platform governance structures, CodexField is building a content asset application platform that balances usage efficiency, incentive alignment, and governance consensus.

- Supported Content Asset Types and Structural Definitions

CodexField adopts a modular design to handle content asset types, introducing a unified metadata protocol, on-chain behavior tracking system, and revenue mapping mechanism to construct abstract standards across content types.

The platform currently supports the following main asset types:

- Native Code Assets:

Includes function code snippets, open-source modules, smart contract templates, and strategy scripts. These assets support native version management and collaboration tracking through the Gitd protocol. Using the CodeNFT structure, on-chain ownership proofs are generated, enabling modular calls, composable deployments, and on-chain transactions.

- AI Models and Data Assets:

Includes model weights, training corpora, inference APIs, and annotated datasets. Ownership and licensing logic are represented through ModelNFTs and DatasetNFTs, while the zkAccess protocol ensures permission verification and privacy protection for call behaviors.

- AIGC Generated Content:

Assets such as text, images, audio, and video generated by AI models are automatically bound to their original prompts and generation path metadata. Hash snapshots are recorded on-chain, supporting a standardized "snapshot generation → ownership certification → snapshot transaction" process.

- Structured Knowledge Assets:

Includes courses, tutorials, research papers, and trading strategies, which can be packaged into ContentBundle structures and put on-chain. They support periodic subscriptions, usage tracking, and revenue mapping, managed through the SubscriptionProtocol.

- Prompt Templates and Generation Records:

Prompt engineering assets in large language models and image generation models are tokenized through PromptNFTs. These assets support ownership certification, reuse, and tracking, equipped with version control and chain management mechanisms that link "prompt → output."

Under this system, each asset type is abstracted as an on-chain object, containing the following core modules:

- Proof of Ownership:

Each asset generates an ERC-721 or ERC-1155 NFT certificate, binding the content hash, author identity (DID), and original timestamp (T0) together, ensuring atomic-level ownership confirmation.

- Authorization Rules System:

A programmable permission control layer built on the CodexAuth protocol, supporting multi-dimensional authorizations—such as viewing, training, commercial use, and composable calls. These permissions are registered and verified on-chain through smart contracts.

- Usage Behavior Tracking:

All interaction behaviors (such as calls, subscriptions, and training) are recorded on-chain through the TraceIndex system, supporting fine-grained tracking and historical visualization of content usage behaviors.

- Revenue Mapping:

All usage behaviors are mapped to the platform's three core DeFi pools through FlowContract, creating real cash flow paths for content assets and enabling automatic revenue distribution on-chain.

Additionally, CodexField's asset standard design fully integrates cross-chain compatibility and modular composability. Asset metadata follows standards such as EIP-721 Metadata and DID-ContentMapping, ensuring consistency and traceability in a multi-chain environment.

- Operational Mechanism

CodexField has established an on-chain operational framework centered around content "certification—usage—revenue distribution," transforming creative behaviors into traceable, tradable, and settleable "real-world asset paths" (RWA Path), building a structured, closed-loop content asset ecosystem.

During the content creation and upload phase, creators can upload various materials through the platform, including code modules, AI models, multimedia content, training data, etc., uniformly accessing decentralized storage networks. CodexField natively supports protocols like Greenfield, Arweave, and Filecoin, leveraging multi-chain storage compatibility mechanisms to achieve censorship resistance and globally accessible content hosting. Additionally, the platform provides the Gitd protocol and CodeSync tool to help developers migrate GitHub repositories, model documents, and knowledge assets with one click, ensuring smooth on-chain integration of content assets.

In the certification and structural standardization phase, each piece of content automatically generates an on-chain certification proof upon upload, binding the creator's identity. The platform adopts a unified data structure and metadata standards to structurally define content types, usage methods, version management, dependencies, etc. This standardization process enhances the recognizability and composability of content assets, laying the foundation for subsequent transactions and authorizations.

The platform supports on-chain authorization and trading of content assets. Creators can set different levels of access and usage permissions as needed (e.g., browsing, citing, calling, training, commercial distribution, etc.). All authorization operations are executed and recorded through smart contracts, ensuring that every usage is automatically settled and traceable on-chain. Users can interact with content assets through subscription models, pay-per-use access, composable calls, and more.

In the revenue distribution phase, CodexField transforms all content usage behaviors into on-chain data streams and maps them to the platform's native revenue architecture. The income generated from content calls is automatically distributed according to preset logic, with revenues attributable to uploaders, collaborators, asset holders, or injected into the platform's structured revenue pool to incentivize quality content and liquidity providers.

Under this mechanism, CodexField achieves a deep coupling of content usage and economic incentives, ensuring that every genuine interaction directly rewards creators and supporters, truly realizing the content financial logic of "usage equals monetization."

- Content Certification Standards and Smart Contract Models

CodexField has built a complete set of content certification and behavior binding mechanisms, supporting the certification, calling, trading, and behavior tracking of heterogeneous content such as code, models, and audio-visual materials.

- Core Structure: CodexID + ERC-7260 Extension Standard

After uploading any content, the system automatically generates a unique identity ID (CodexID), which not only proves you are the original creator but also binds the content's version records, calling paths, metadata templates, and other information. Through CodexField's self-developed ERC-7260 standard, these assets can support advanced features such as composable calls, permission control, and state tracking.

- On-Chain Certification Process

The certification process is very simple: you upload content → the tool automatically formats and adds metadata → local signature generates an "upload declaration" → one-click submission for registration → obtain an independent CodexID. If needed, it can also be synchronized to other chains like Ethereum and Solana.

- Native Features

Every piece of content can track who used it, how it was used, and how much money was earned. For example, you can see who cited your model, who trained it, and who resold it, thus opening up the revenue logic of "usage equals monetization." It also supports DAO governance for dynamic adjustment of authorization strategies.

- Authorization Models and Behavior Binding Mechanisms

CodexField has built a programmable authorization mechanism based on smart contracts, allowing for detailed control over content access, calling, training, commercial use, and more, while achieving automatic settlement and revenue distribution.

- Authorization Dimension Design: You can set "who can see, who can use, whether training is allowed, whether commercial use is permitted" for your content, and you can also set access prices, calling frequencies, time windows, and other conditions. The authorization strategy is written into the NFT smart contract and is fully traceable on-chain.

- Smart Contract Verification and Behavior Credentials: Each usage behavior automatically generates an "on-chain receipt," which can be used for revenue sharing, settlement, and subsequent credit accumulation. Any cooperating party (such as an AI platform) can verify permissions and make payments in real-time.

- Abuse Prevention Mechanism: The platform introduces a "pre-authorization + real-time payment" mechanism: users first lock the payment credentials, and only after the calling action is completed does the system trigger the payment, ensuring that every usage is paid for, and creators do not suffer losses.

Commentary

CodexField's advantage lies in its construction of a complete, structured on-chain content asset system that covers the entire process of content ownership confirmation, usage authorization, behavior tracking, and revenue distribution. It supports on-chain transactions for various types of content, including code, models, AIGC, and knowledge, and implements a "pay-as-you-use" content financial mechanism through the ERC-7260 standard and smart contracts, greatly incentivizing creators to produce and circulate high-quality content. The platform also possesses powerful technical features such as Git toolchain integration, multi-chain storage compatibility, and behavior auditability.

However, its challenges include a relatively high user entry barrier, the need to strengthen content quality control and intellectual property dispute resolution mechanisms, and the liquidity of content assets and external protocol adaptation still being in the early stages.

2. Key Project Details of the Week

2.1. Consensys and Starknet Invest in Trusta.AI Analysis Report—Blockchain and AI-Based Identity Verification and Sybil Defense Solutions

Introduction

Trusta.AI's vision is to establish a crypto-smart (Crypto+AI) network based on trusted identities. In this ecosystem encompassing human intelligence and artificial intelligence, all data, reputation, and credit will be accumulated based on Trusta identities. Ultimately, it aims to create a universal credit infrastructure for all agents (human intelligence + artificial intelligence).

Feature Analysis

- Proof of Humanity: AI and Data-Driven Framework

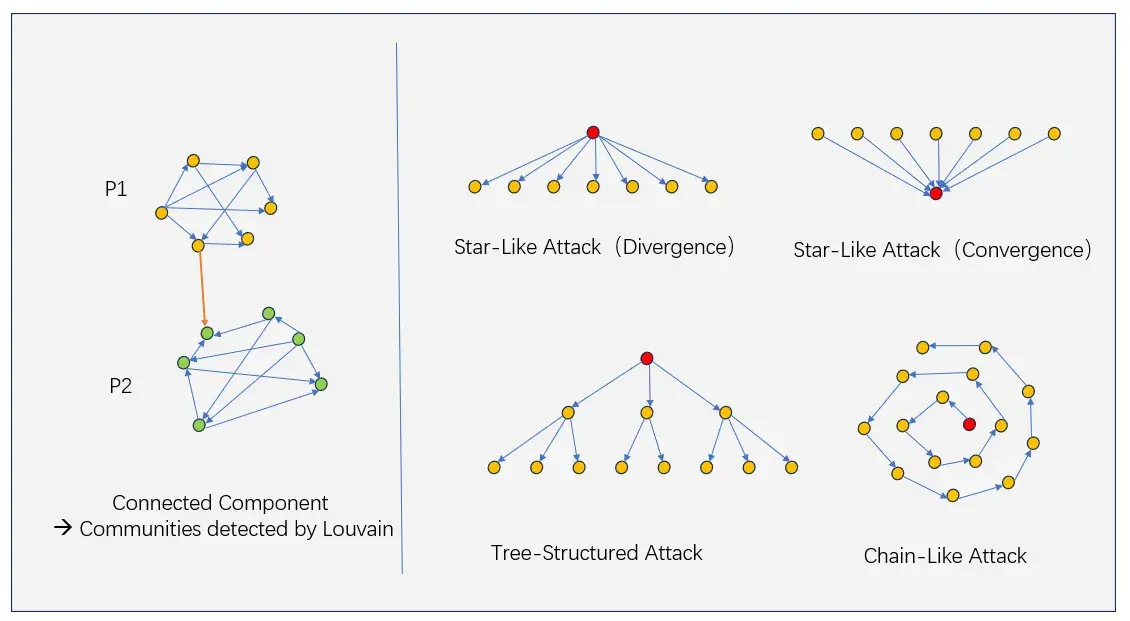

Sybil attackers automate interactions between their accounts using bots and scripts, leading to the aggregation of these accounts into malicious communities. Trusta's two-stage AI-ML framework identifies Sybil communities through clustering algorithms:

Stage One: Uses community detection algorithms like Louvain and K-Core to analyze Asset Transfer Graphs (ATGs) to detect closely connected suspicious Sybil groups.

Stage Two: Calculates user profiles and activities for each address. The K-means clustering algorithm optimizes clustering by filtering out dissimilar addresses to reduce false positives from the first stage.

Overall, Trusta first uses graph mining algorithms to identify coordinated Sybil communities. Then, additional user analysis filters out outliers, improving accuracy by combining connectivity and behavioral patterns to achieve robust Sybil detection.

Stage One: Community Detection on ATGs

Trusta analyzes the asset transfer graph (ATGs) between EOA accounts. Entities like bridges, exchanges, and smart contracts are removed, focusing on relationships between users. Trusta has developed proprietary analytical methods to detect and remove central addresses, generating two ATGs:

- General Transfer Graph: Contains token transfer edges between any addresses.

- Gas Provision Network: Edges represent the first provision of gas to an address.

The first gas transfer activates a new EOA, forming a sparse graph structure suitable for analysis. This also represents a strong relationship, as new accounts rely on their gas providers. The sparsity and significance of the gas network make it an important tool against Sybil attacks. Complex algorithms can mine these networks, while gas supply links highlight meaningful account activation relationships.

ATG patterns detected as suspicious Sybil clusters

Trusta analyzes asset transfer graphs using community detection algorithms like Louvain and some known attack patterns to detect Sybil clusters, as shown in the figure:

- Star Divergence Attack: Addresses funded by the same source

- Star Convergence Attack: Addresses sending funds to the same target

- Tree Attack: Funds distributed in a tree topology

- Chain Attack: Funds sequentially transferred from one address to the next in a chain topology

The first stage generates preliminary Sybil clusters based solely on asset transfer relationships. Trusta further optimizes the results in the second stage by analyzing account behavior similarities.

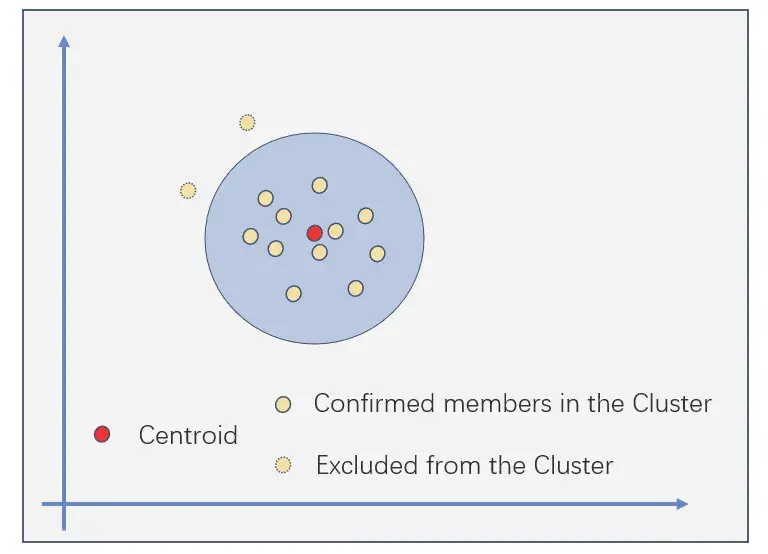

Stage Two: K-Means Refinement Based on Behavioral Similarity

Transaction logs reveal activity patterns of addresses. Sybil accounts may exhibit similarities, such as interacting with the same contracts/methods, having similar times and amounts. Trusta verifies the clusters from the first stage by analyzing two types of on-chain behavioral variables:

- Transaction Variables: These variables come directly from on-chain behavior, including first and most recent transaction dates, interacted protocols or smart contracts, etc.

- Profile Variables: These variables provide aggregated statistics of behavior, such as interaction amounts, frequency, and transaction volume.

A K-means-like process to optimize Sybil clusters

To optimize the preliminary Sybil clusters through a multi-dimensional representation of address behavior, Trusta employs a K-means-like process. The two K-means steps iterate continuously until convergence, resulting in optimized Sybil clusters.

The application of clustering-based algorithms in Sybil defense has several advantages at the current stage:

- Relying solely on historical Sybil lists (like HOP and OP Sybils) is insufficient, as new rollups and wallets continue to emerge. Using only previous lists cannot cover these new entities.

- There was no standard Sybil label dataset available in 2022 to train supervised models. Training based on static Sybil/non-Sybil data raises issues of model accuracy and recall. Since a single dataset cannot encompass all Sybil patterns, recall is therefore limited. Additionally, misclassified users lack a feedback mechanism, hindering accuracy improvement.

- Anomaly detection is not suitable for identifying Sybils, as their behavior is similar to that of ordinary users.

Thus, it is concluded that a clustering-based framework is the most appropriate method at the current stage. However, as more addresses are labeled, Trusta will undoubtedly explore supervised learning algorithms based on deep neural networks, such as classifiers.

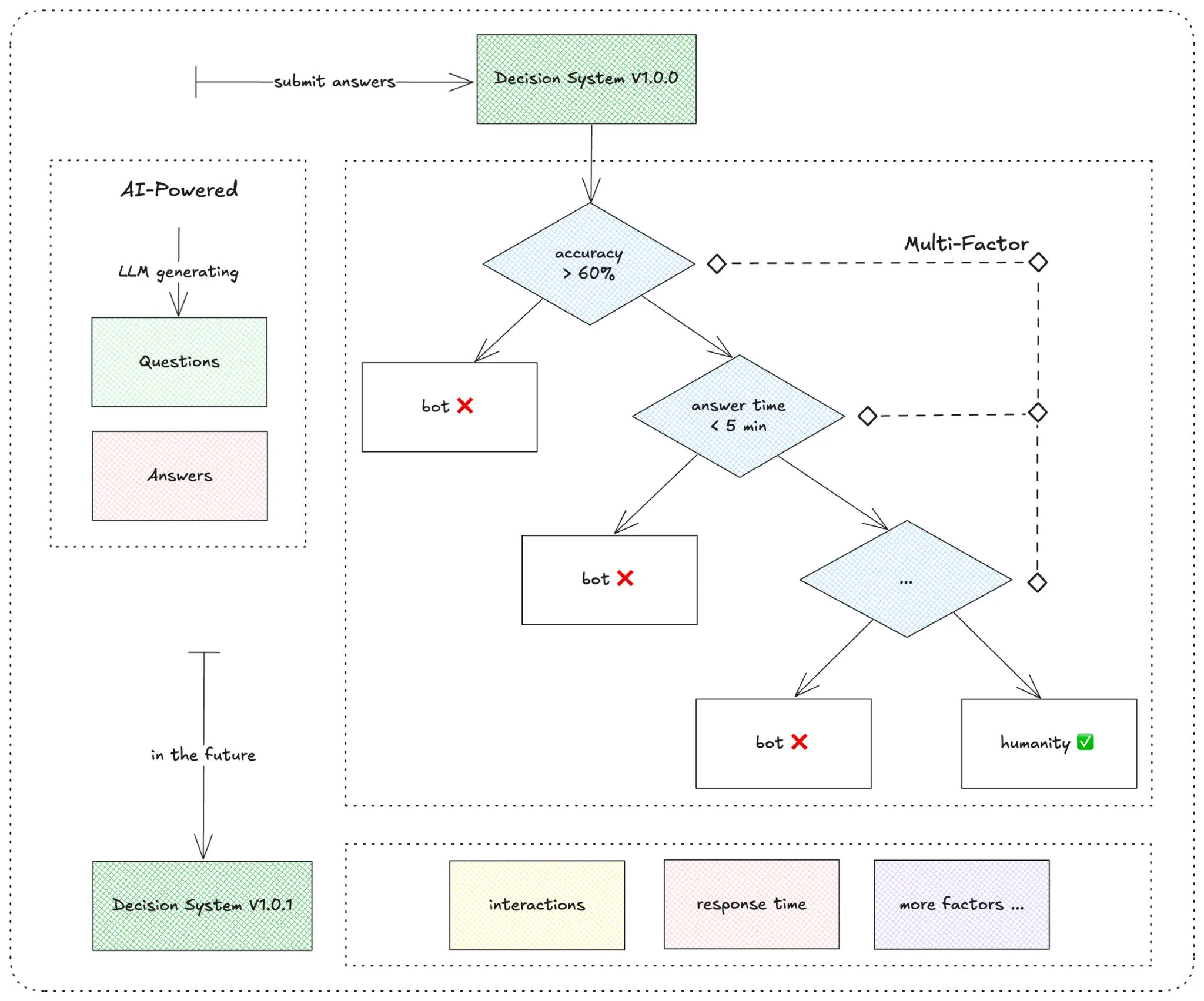

Test of Humanity (TOH): Knowledge-Based Identity Verification

Based on the TOH (Test of Humanity) work conducted on TON, Trusta's project "t-TON: The Trustworthy and Open Network" won the championship at the TON hackathon in winter 2024. Over the past six months, the number of TON accounts surged from over 10 million to over 100 million, raising concerns about Sybil attacks within the ecosystem. A Sybil attack refers to malicious actors using scripts to create and control a large number of fake Telegram/TON accounts, engaging in activities both in-app and on-chain, thereby improperly obtaining more airdrop tokens.

In the context of the TON ecosystem, the idea of TOH can be understood as follows: If your TON wallet actively participated in Catizen and received a $CATI airdrop, you would easily answer a question like, "What token did you receive in the airdrop? (A) $NOT, (B) $CATI, (C) $DOGS." In contrast, a scripted bot would struggle to summarize on-chain information and respond quickly, facing difficulties with such personalized questions.

Inspired by the Turing test, Trusta.AI designed a personalized Test of Humanity (TOH) system based on users' TON activities. We collect TON data, create tailored questionnaires, and assess whether the respondent is human based on their answers and performance. Our "human test" system is simple, interactive, and personalized, featuring:

- Interactive and Simple: Users only need to answer a few straightforward questions, avoiding complex methods like iris or facial recognition.

- Personalized and Secure: The questionnaire is tailored to each account's individual behavior, making bulk attacks more difficult.

- Privacy Protection: Questions are generated based solely on on-chain data, minimizing the risk of personal privacy leakage.

- AI-Enhanced User Experience: We utilize ChatGPT to help design questions, including misleading answer options.

Trusta's verification is not just about checking whether answers are correct. It also evaluates users' response times, answer patterns, and other factors to make a comprehensive authenticity judgment. This multi-dimensional approach enhances our ability to detect bots. Additionally, Trusta introduces question variations using AI models, making it harder for attackers to predict or manipulate the system. These two methods ensure that Trusta's Knowledge-Based Authentication (KBA) system remains robust and adaptive.

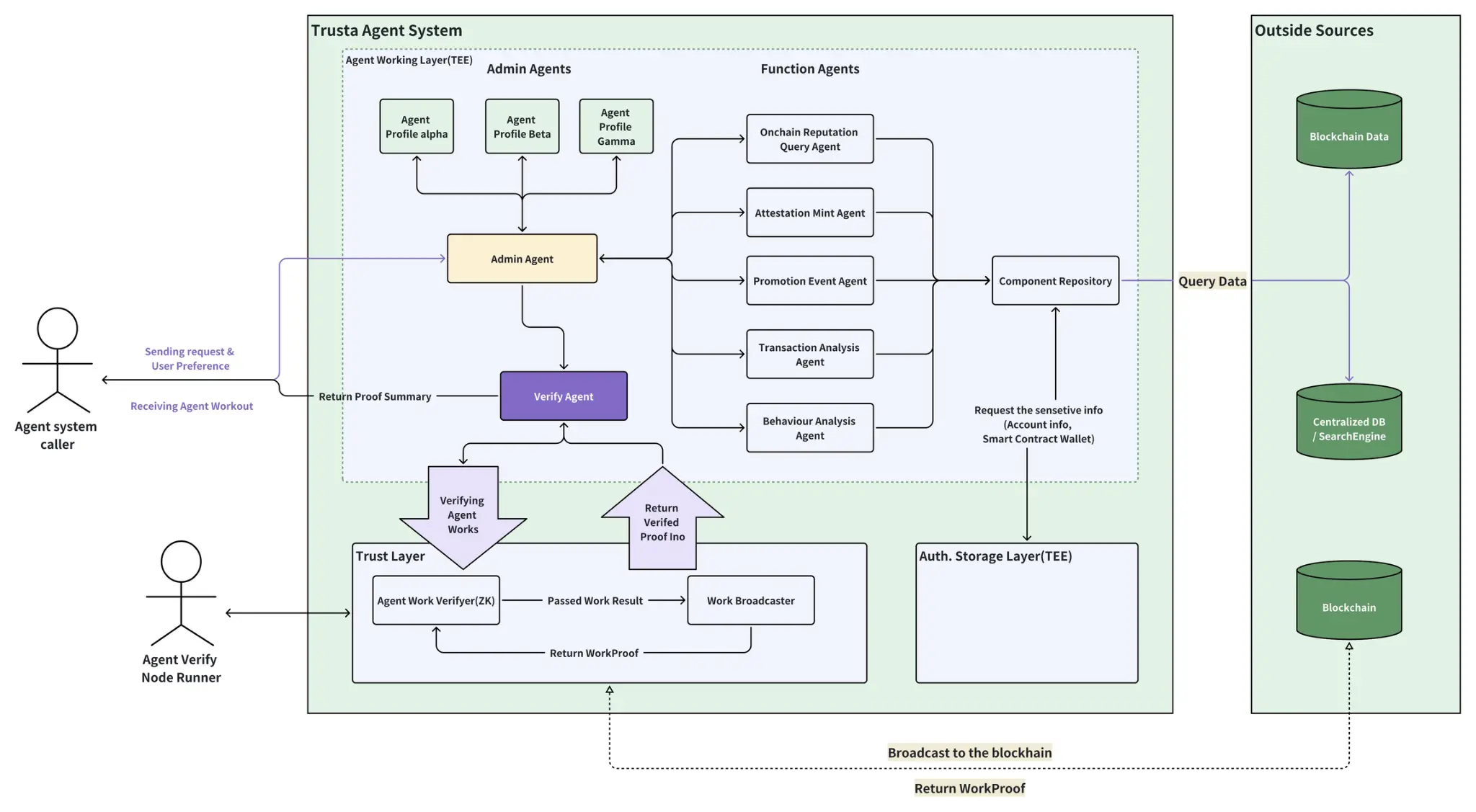

Trusta Authentication Agent System

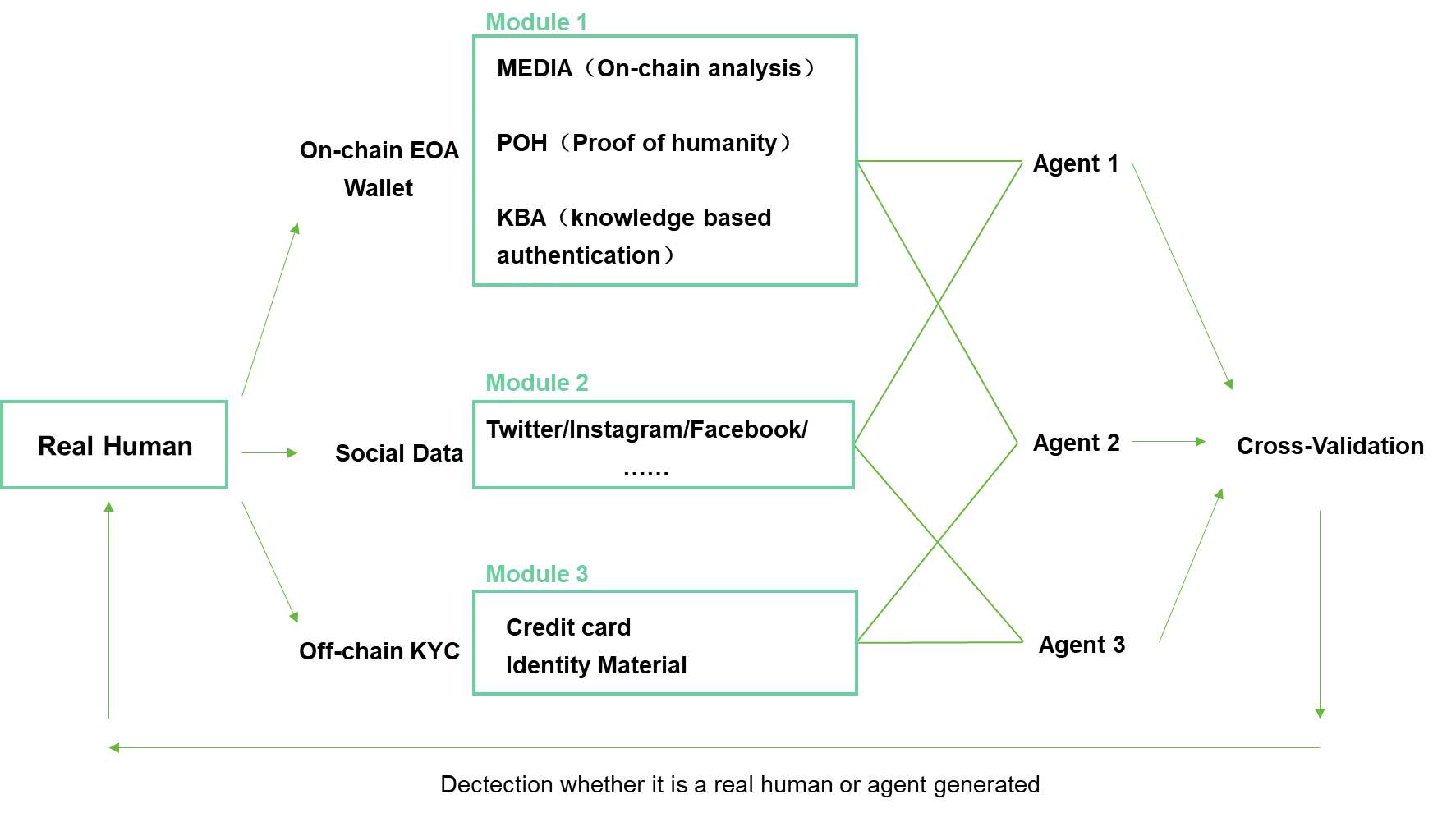

Distinguishing between human intelligence, underperforming bots, and artificial intelligence is crucial. To tackle this complex task, we indeed need the collaborative effort of multiple modules.

The above figure illustrates the collaboration of several hypothetical modules. Furthermore, Trusta has just begun exploring multi-agent coordination methods to build an authentication agent system. The following diagram showcases this architecture. We are continuously improving this framework so that, in the age of AI, different entities can be assigned the correct identities.

- Trusta Verification Service

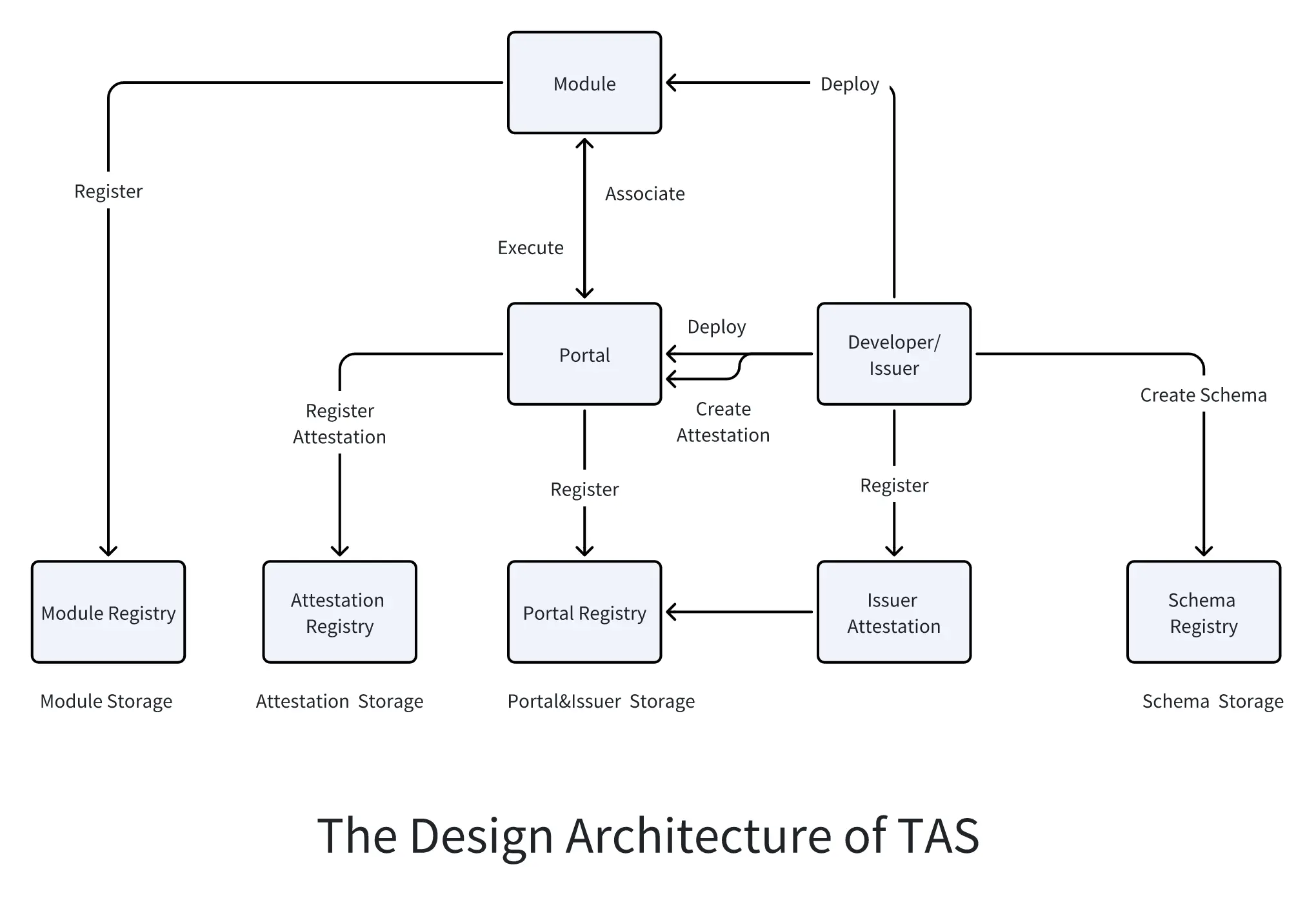

TAS (Trusta Verification Service) is a blockchain-based public verification registry. It serves as a public tool for decentralized identifier (DID) verification. Similar to EAS on Ethereum and Verax on LINEA, TAS acts as a simple primitive that allows any decentralized application (dApp) and protocol to access public data in a shared "data lake."

Verification (Attestation) is simply proof or evidence of something. It is typically a statement made by a verifier (or attester) regarding a specific matter. For example, a passport is a verification of a person's nationality, and a degree certificate is a verification of someone's educational qualifications. In the world of Web3, verification can prove digital identity, ownership of digital assets, trustworthiness of wallets, or certain primitives, among other things.

- Portals and Portal Registry: Portals are smart contracts registered in the "Portal Registry" and can be seen as the entry point to the TAS verification registry. All verifications are conducted through Portals. Specifically, a Portal executes specific verification logic through a series of modules, and all verifications go through these modules before being submitted to the registry. Portals are typically associated with specific issuers, who create dedicated Portals to publish their verifications, but Portals can also be open to anyone, allowing anyone to use them. There are two ways to create a Portal: using the default Portal or creating a custom Portal.

All Portals must be registered in the Portal Registry.

- Schema and Schema Registry: A Schema is a blueprint for verification, outlining the different fields it contains and their corresponding data types. Anyone can create a Schema in the registry, and once created, these Schemas can be reused by others. For example, to create a verification representing a person, we can define a Schema like this: (string username, string teamName, uint16 points, bool active). This defines a Schema containing four fields.

Schemas are stored in the Schema Registry as string values describing various fields.

- Module and Module Registry: Modules are smart contracts that inherit from the AbstractModule contract and are registered in the Module Registry. They allow verification creators to run custom logic to:

- Verify whether the verification meets certain business logic

- Verify signatures or zk-snarks

- Perform other operations, such as transferring tokens or minting NFTs

- Recursively create another verification

Modules are specified in the Portal, and all verifications created through that Portal will go through the specified modules. Modules can also be linked together to form independent modules with specific functions.

- Attestation and Attestation Registry: Attestations are created through "portals," ensuring that verifications are consistent with the logic of specific domains. Attestations are created based on "schemas," which describe the structure of the verification data, i.e., the various fields and their corresponding data types.

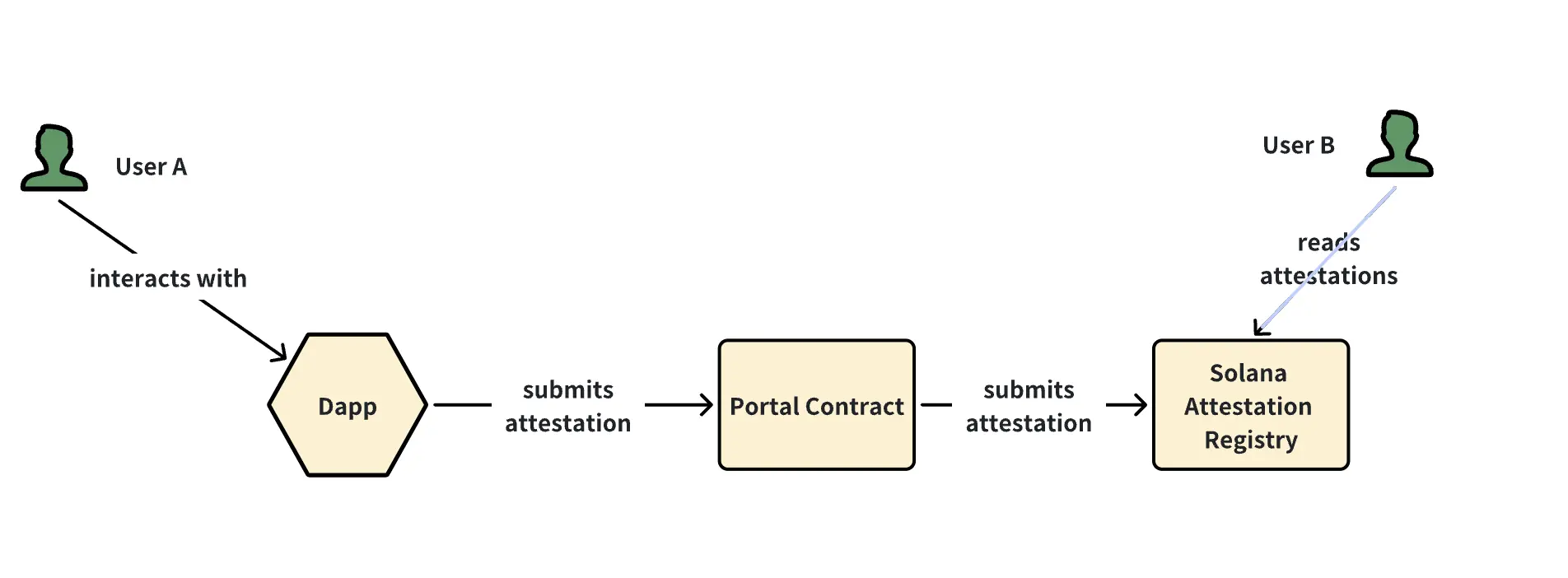

- How to Apply for and Publish Verifications

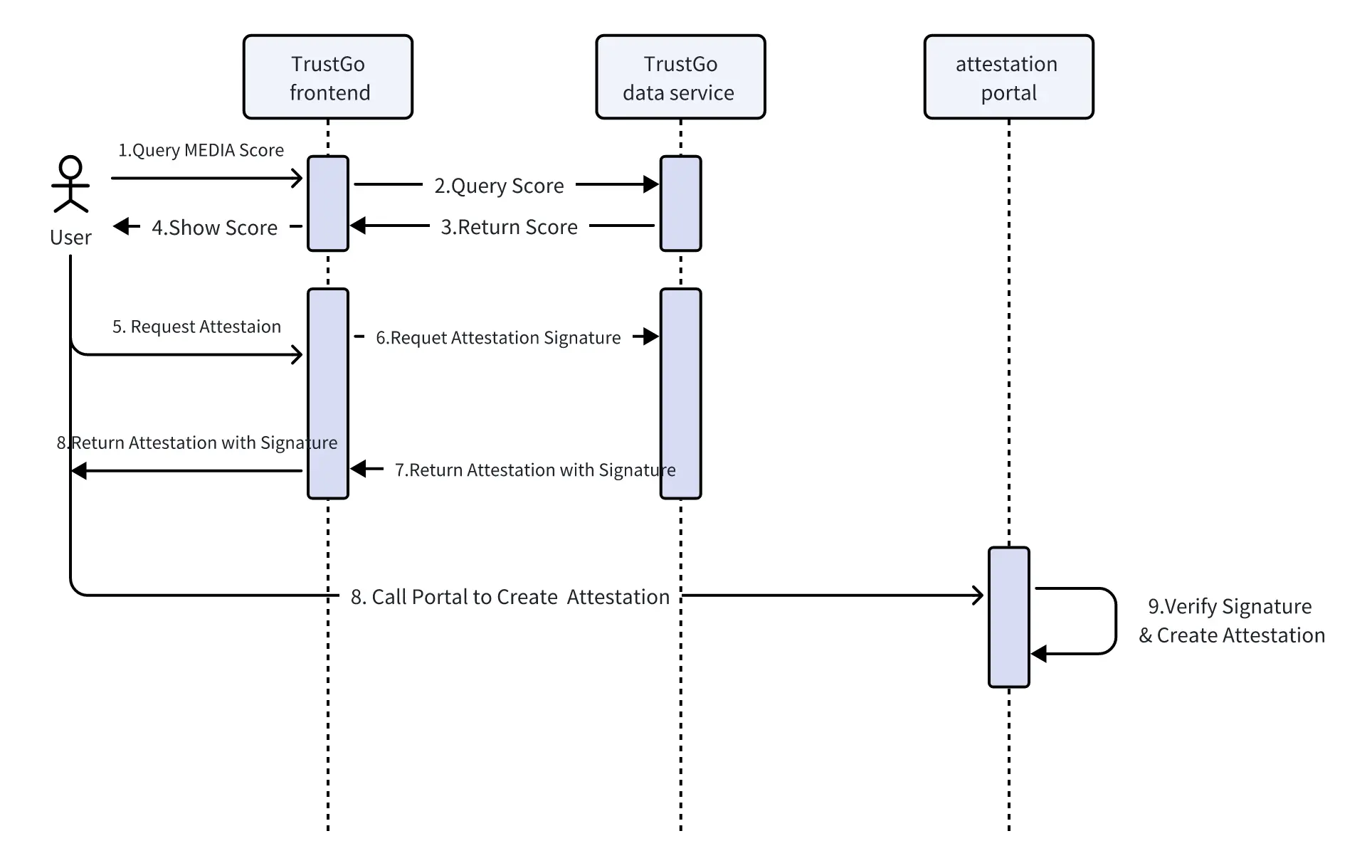

Assuming dApps (like TrustGo) have deployed their own Portal contracts for publishing verifications, particularly MEDIA reputation verifications, here are the steps for users to apply for and receive verifications:

- Users check their MEDIA scores on the TrustGo website.

- Users submit a verification request, triggering the TrustGo backend to generate and sign the verification data.

- The TrustGo frontend triggers the wallet to call the Portal contract.

- The Portal contract verifies the data signature and publishes the verification.

Summary

Trusta leverages blockchain and AI technologies to provide an innovative identity verification and Sybil defense solution, effectively enhancing fraud detection capabilities through a decentralized verification service (TAS) and multi-dimensional behavioral analysis. Its advantages include efficient identity verification, a flexible verification framework, and enhanced scalability and adaptability through a multi-agent system and modular design.

However, the complexity of the system may pose certain development and maintenance challenges, and the current reliance on multi-module coordination and data privacy protection still requires further optimization to ensure widespread application across different ecosystems.

### Industry Data Analysis

1. Overall Market Performance

1.1. Spot BTC vs ETH Price Trends

BTC

Analysis

Key resistance this week: $120,250, $120,700, $122,000

Key support this week: $118,500, $117,800, $117,000

ETH

Analysis

Key resistance this week: $4,000, $4,110

Key support this week: $3,870, $3,850, $3,800

2. Public Chain Data

2.1. BTC Layer 2 Summary

2.2. EVM & Non-EVM Layer 1 Summary

2.3. EVM Layer 2 Summary

### Macroeconomic Data Review and Key Data Release Points for Next Week

Last week, the number of initial jobless claims fell for the sixth consecutive week, indicating that the job market remains resilient, but the number of people continuing to receive unemployment benefits remains at a high level, reflecting a slight increase in the difficulty of re-employment after unemployment.

This week (July 28 - August 1), important macroeconomic data points include:

July 30: U.S. ADP employment numbers for July;

July 31: U.S. Federal Reserve interest rate decision as of July 30, U.S. core PCE price index year-on-year for June;

August 1: U.S. unemployment rate for July; U.S. seasonally adjusted non-farm payrolls for July.

### Regulatory Policies

1. U.S. Passes GENIUS Act, Establishing Federal Regulatory Framework for Stablecoins

- The U.S. Congress passed the "GENIUS Act," "Clarity Act," and "Anti-CBDC Surveillance State Act" in June and July. Following the launch of "Crypto Week," the House of Representatives unanimously passed it on July 17, and it was subsequently signed into law by the President, marking the official entry of the U.S. into a federal regulatory system for stablecoins.

- The GENIUS Act requires stablecoins to maintain a 1:1 reserve of U.S. dollars or low-risk assets, undergo regular audits, and provide transparent disclosures, while prohibiting the issuance of interest on U.S.-licensed stablecoin payments.

- This series of legislation strengthens the collaborative role of regulatory agencies: the SEC and CFTC are responsible for regulating different asset types, the CLARITY Act clarifies regulatory responsibilities, and the Anti-CBDC Act prevents the issuance of central bank digital currency in the U.S.

2. EU AMLA: Crypto Assets Identified as Primary Money Laundering Risk

- The newly established European anti-money laundering regulatory agency, AMLA, has identified crypto assets as one of the primary money laundering risks in the EU, pointing out that the cross-border exchange of stablecoins poses regulatory arbitrage and systemic risks.

- AMLA will initiate direct regulation in 2028, implementing consistent standards for about 40 large financial and crypto institutions to prevent cross-border risk contagion.

3. UK: Regulatory Lag but Shows "Latecomer Advantage"

- The head of Coinbase UK stated that the UK has not yet established mature regulations but has the opportunity to design a more reasonable system by learning from mature jurisdictions (such as the EU's MiCA and U.S. legislation) to attract institutional participation.

- While the market expects acceleration, the UK is relatively lagging in legislative speed, which may risk losing market opportunities.

4. Pakistan Establishes National-Level Digital Asset Regulatory Authority

- The Pakistani government formed the Virtual Assets Regulatory Authority (PVARA) in July, responsible for licensing virtual asset service providers and setting industry standards. Earlier, in March, the government established the Pakistan Crypto Council (PCC), led by the Ministry of Finance, with Binance co-founder Changpeng Zhao serving as an advisor.

- This move marks Pakistan's shift from regulatory absence to actively planning regulatory frameworks, aiming to support the rapidly growing digital asset market.

5. Turkey Strengthens Trading Regulations, Requires Platforms to Collect Funding Source and Usage Data

- The Turkish Ministry of Finance proposed new regulations imposing stricter KYC/AML controls on crypto trading platforms, including requirements to record the source and use of transaction funds and limiting the cross-border transfer limits of stablecoins.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。