The AI-Blockchain Collaborative Matrix will become an important tool for evaluating projects, effectively helping decision-makers distinguish between truly impactful innovations and meaningless noise.

Author: Swayam

Translation: Deep Tide TechFlow

The rapid development of artificial intelligence (AI) has allowed a few large tech companies to gain unprecedented computing power, data resources, and algorithmic techniques. However, as AI systems gradually integrate into our society, issues of accessibility, transparency, and control have become central topics in technology and policy discussions. In this context, the combination of blockchain technology and AI offers an alternative path worth exploring—a new way that may redefine the development, deployment, scaling, and governance of AI systems.

We do not aim to completely overturn the existing AI infrastructure but hope to explore, through analysis, the unique advantages that decentralized approaches may bring in certain specific use cases. At the same time, we acknowledge that in some contexts, traditional centralized systems may still be the more practical choice.

The following key questions guided our research:

Can the core characteristics of decentralized systems (such as transparency and censorship resistance) complement the needs of modern AI systems (such as efficiency and scalability), or will they create contradictions?

In the various stages of AI development—from data collection to model training to inference—where can blockchain technology provide substantial improvements?

What technical and economic trade-offs will different stages face in the design of decentralized AI systems?

Current Limitations in the AI Technology Stack

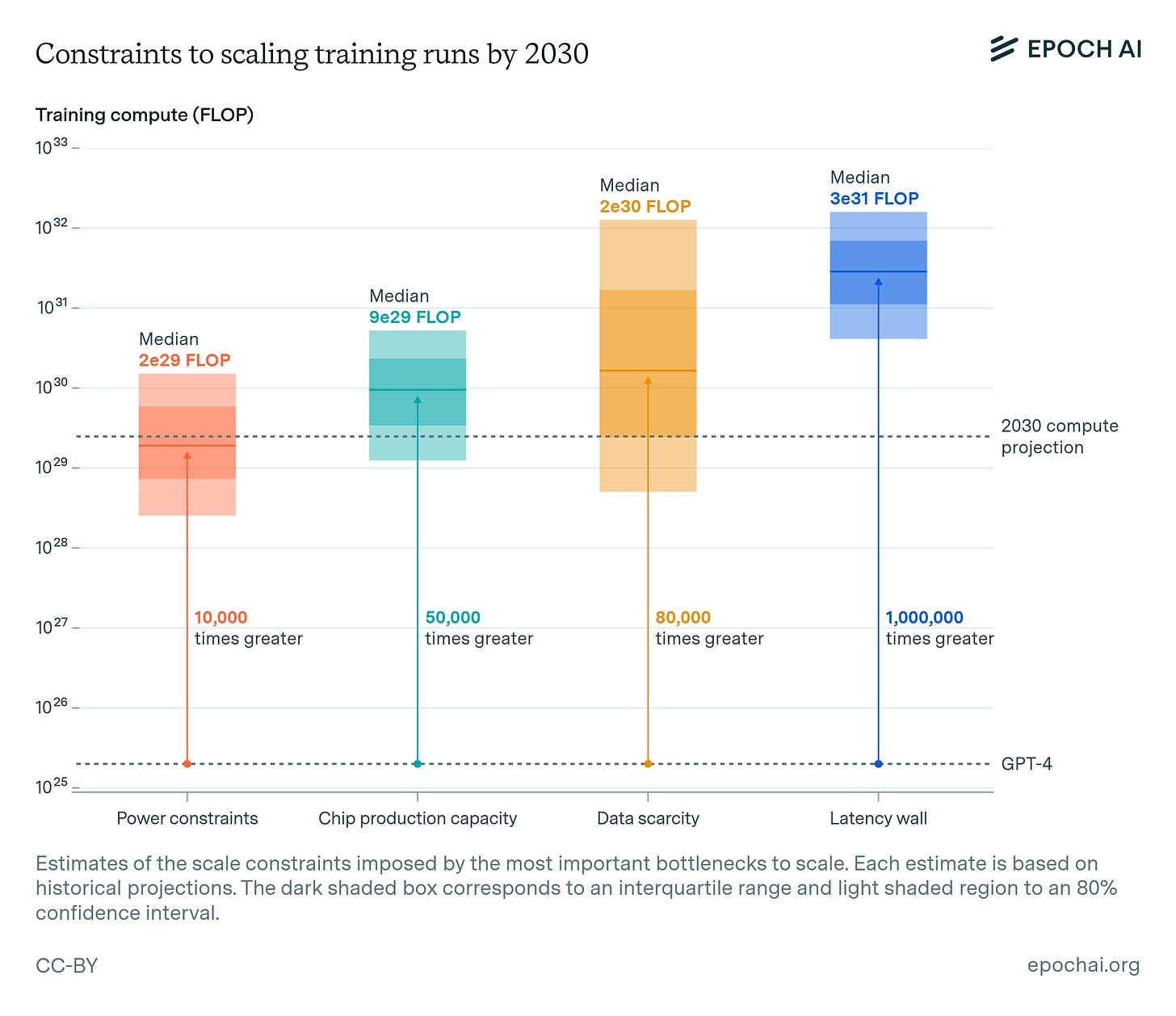

The Epoch AI team has made significant contributions to analyzing the limitations of the current AI technology stack. Their research elaborates on the major bottlenecks that AI training computational capacity may face by 2030, using Floating Point Operations per Second (FLoPs) as the core metric for measuring computational performance.

The research indicates that the scaling of AI training computation may be limited by various factors, including insufficient power supply, bottlenecks in chip manufacturing technology, data scarcity, and network latency issues. Each of these factors sets different upper limits on achievable computational capacity, with latency issues considered the most challenging theoretical limit to overcome.

The chart emphasizes the necessity of advancements in hardware, energy efficiency, unlocking data captured on edge devices, and networking to support the growth of future artificial intelligence.

Power Limitations (Performance):

Feasibility of scaling power infrastructure (2030 forecast): By 2030, the capacity of data center parks is expected to reach 1 to 5 gigawatts (GW). However, this growth relies on massive investments in power infrastructure and overcoming potential logistical and regulatory barriers.

Due to energy supply and power infrastructure limitations, the global computational capacity's upper limit is expected to reach up to 10,000 times the current level.

Chip Production Capacity (Verifiability):

Currently, the production of chips (such as NVIDIA H100, Google TPU v5) that support advanced computing is limited by packaging technologies (such as TSMC's CoWoS technology). This limitation directly affects the availability and scalability of verifiable computing.

Bottlenecks in chip manufacturing and supply chains are major obstacles, but computational capacity growth of up to 50,000 times may still be achievable.

Additionally, enabling secure enclaves or Trusted Execution Environments (TEEs) on advanced chips in edge devices is crucial. These technologies not only verify computation results but also protect the privacy of sensitive data during computation.

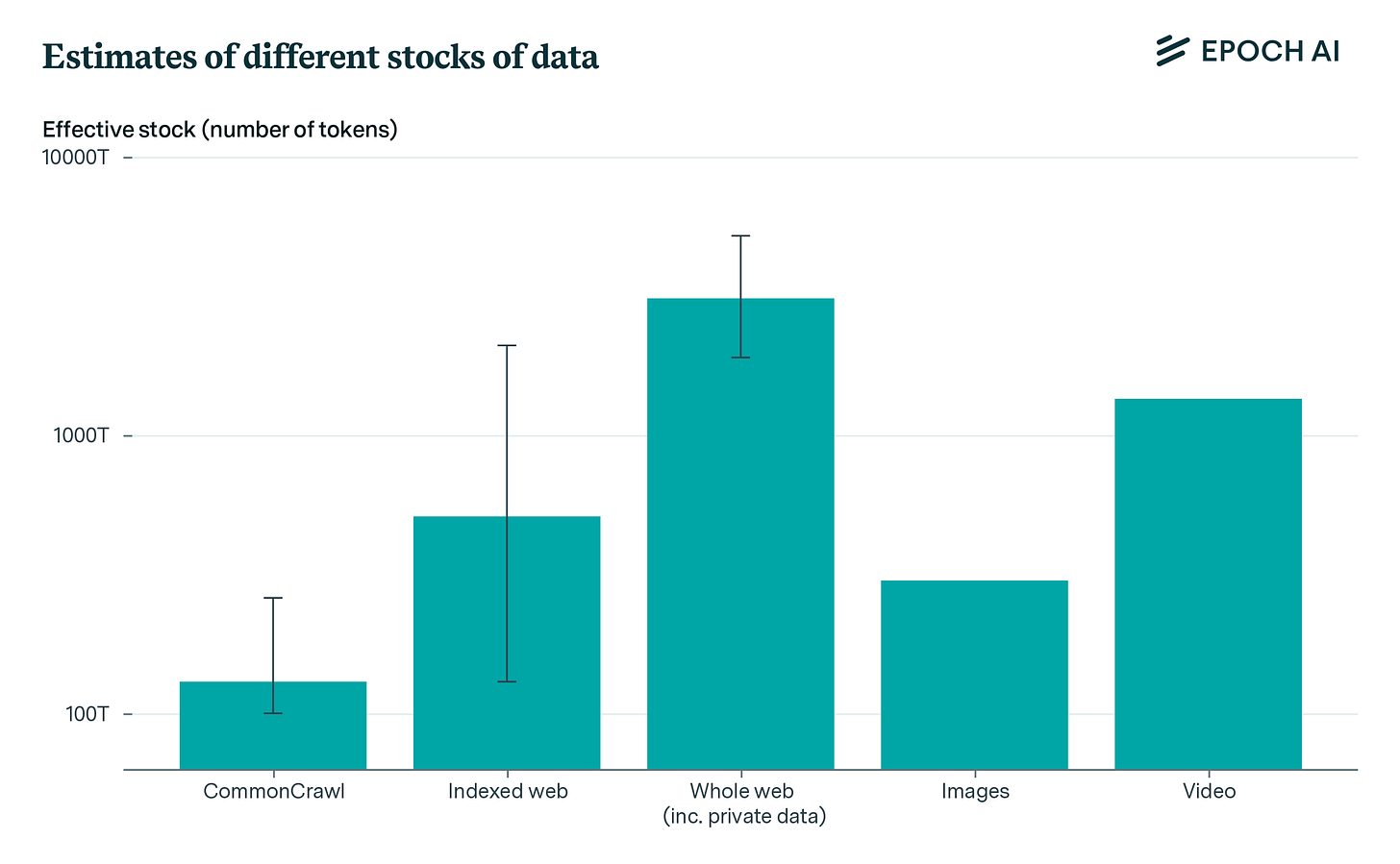

Data Scarcity (Privacy):

Latency Barriers (Performance):

Inherent latency limitations in model training: As the scale of AI models continues to grow, the time required for a single forward and backward pass significantly increases due to the sequential nature of the computation process. This latency is a fundamental limitation in the model training process that cannot be circumvented, directly affecting training speed.

Challenges in scaling batch size: To mitigate latency issues, a common approach is to increase batch size, allowing more data to be processed in parallel. However, there are practical limitations to scaling batch size, such as insufficient memory capacity and diminishing marginal returns on model convergence as batch size increases. These factors make it increasingly difficult to offset latency by increasing batch size.

Foundation

Decentralized AI Triangle

The various limitations currently faced by AI (such as data scarcity, computational capacity bottlenecks, latency issues, and chip production capacity) collectively form the "Decentralized AI Triangle." This framework attempts to achieve a balance between privacy, verifiability, and performance. These three attributes are core elements in ensuring the effectiveness, trustworthiness, and scalability of decentralized AI systems.

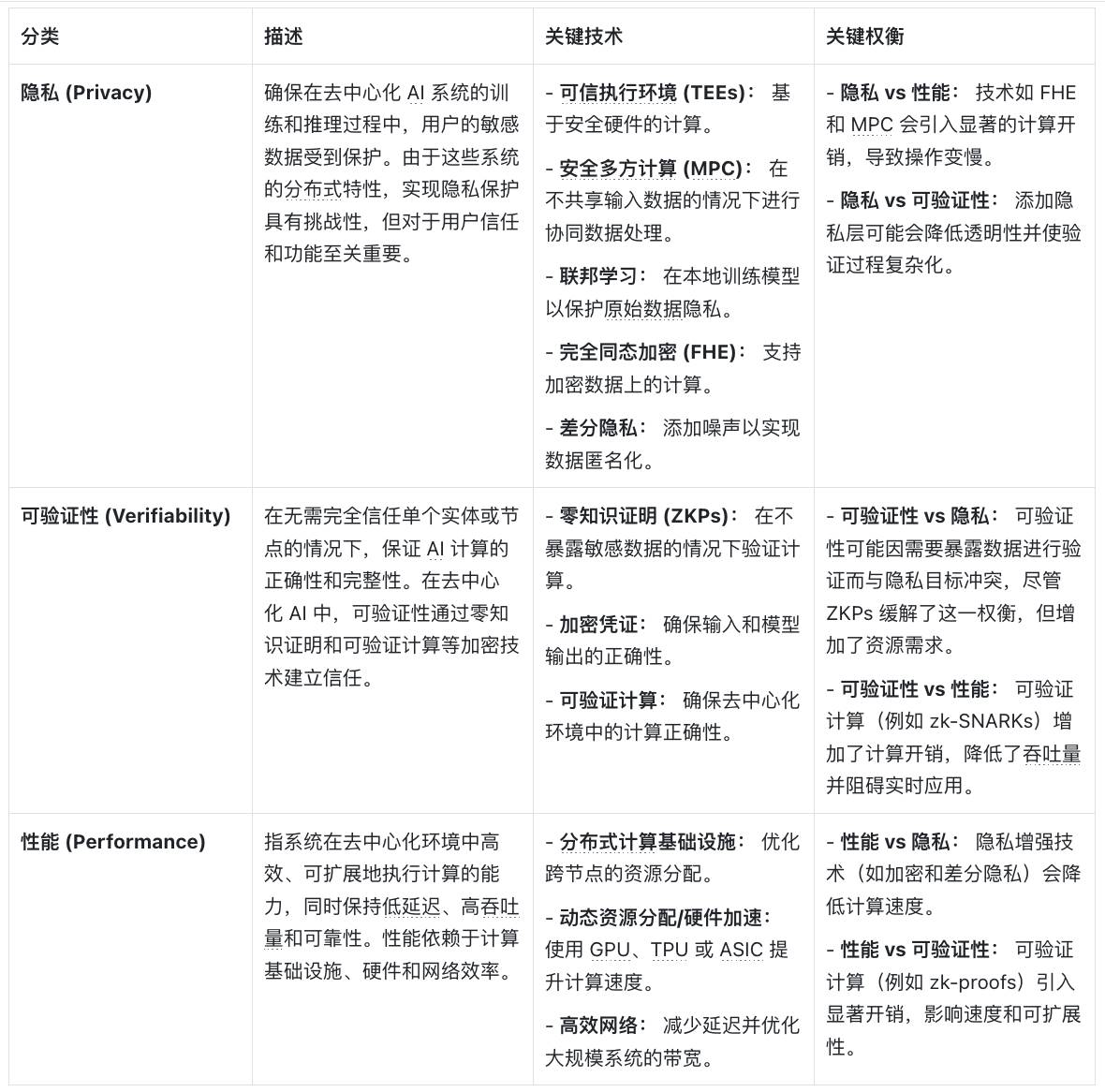

The following table analyzes the key trade-offs between privacy, verifiability, and performance in detail, exploring their definitions, enabling technologies, and the challenges they face:

Privacy: Protecting sensitive data during AI training and inference is crucial. Various key technologies are employed, including Trusted Execution Environments (TEEs), Multi-Party Computation (MPC), Federated Learning, Fully Homomorphic Encryption (FHE), and Differential Privacy. While these technologies are effective, they also present challenges such as performance overhead, transparency issues affecting verifiability, and limited scalability.

Verifiability: To ensure the correctness and integrity of computations, technologies such as Zero-Knowledge Proofs (ZKPs), cryptographic credentials, and verifiable computing are utilized. However, achieving a balance between privacy and performance with verifiability often requires additional resources and time, which may lead to computational delays.

Performance: Efficiently executing AI computations and achieving large-scale applications rely on distributed computing infrastructure, hardware acceleration, and efficient network connections. However, the adoption of privacy-enhancing technologies can slow down computation, and verifiable computing can introduce additional overhead.

The Blockchain Trilemma:

The core challenge faced in the blockchain field is the trilemma, where each blockchain system must balance between the following three aspects:

Decentralization: Preventing any single entity from controlling the system by distributing the network across multiple independent nodes.

Security: Ensuring the network is protected from attacks and maintaining data integrity, which often requires more verification and consensus processes.

Scalability: Processing a large number of transactions quickly and economically, which often means making compromises on decentralization (reducing the number of nodes) or security (lowering verification strength).

For example, Ethereum prioritizes decentralization and security, resulting in relatively slow transaction processing speeds. A deeper understanding of these trade-offs in blockchain architecture can be found in related literature.

AI-Blockchain Collaborative Analysis Matrix (3x3)

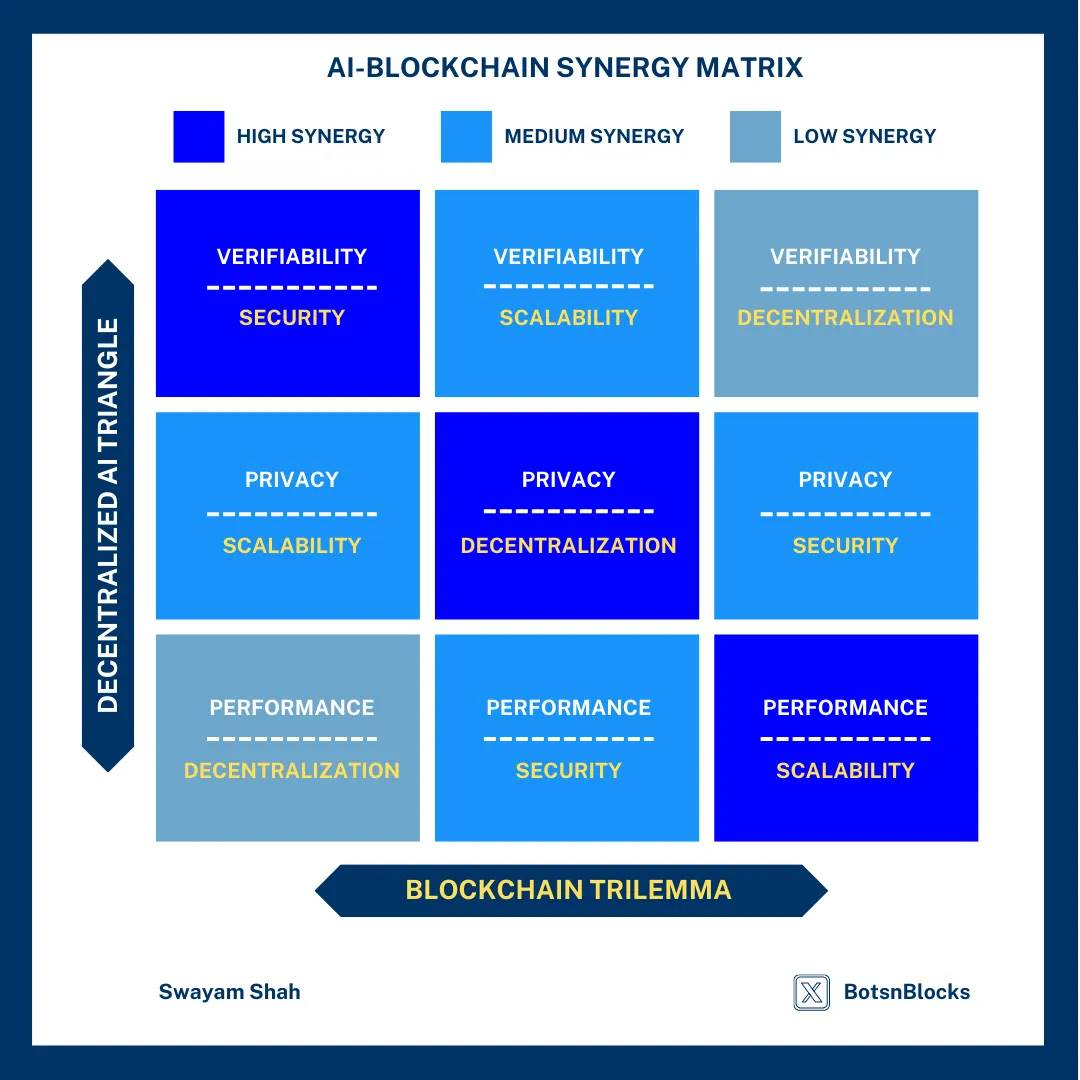

The combination of AI and blockchain is a complex process of trade-offs and opportunities. This matrix illustrates where these two technologies may create friction, find harmonious fits, and sometimes amplify each other's weaknesses.

How the Collaborative Matrix Works

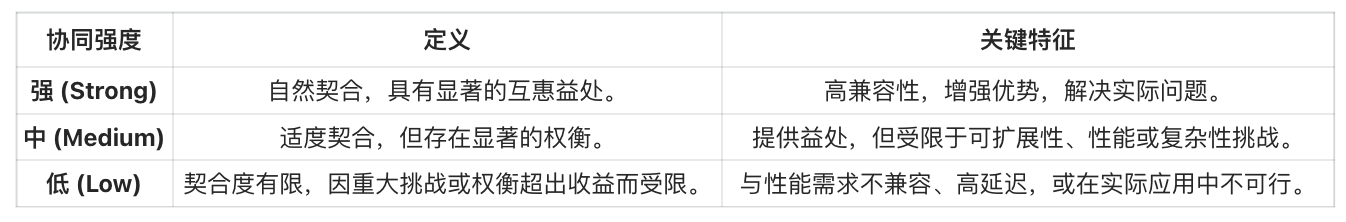

The strength of collaboration reflects the compatibility and influence of blockchain and AI attributes in specific domains. Specifically, it depends on how the two technologies jointly address challenges and enhance each other's functionalities. For instance, in terms of data privacy, the immutability of blockchain combined with AI's data processing capabilities may lead to new solutions.

How the Collaborative Matrix Works

Example 1: Performance + Decentralization (Weak Collaboration)

In decentralized networks, such as Bitcoin or Ethereum, performance is often constrained by various factors. These limitations include the volatility of node resources, high communication latency, transaction processing costs, and the complexity of consensus mechanisms. For AI applications that require low latency and high throughput (such as real-time AI inference or large-scale model training), these networks struggle to provide sufficient speed and computational reliability to meet high-performance demands.

Example 2: Privacy + Decentralization (Strong Collaboration)

Privacy-preserving AI technologies (such as federated learning) can fully leverage the decentralized characteristics of blockchain to achieve efficient collaboration while protecting user data. For example, SoraChain AI provides a solution that ensures data ownership is not compromised through blockchain-supported federated learning. Data owners can contribute high-quality data for model training while retaining privacy, achieving a win-win situation for both privacy and collaboration.

The goal of this matrix is to help the industry clearly understand the intersection of AI and blockchain, guiding innovators and investors to prioritize practical directions, explore promising areas, and avoid getting caught up in merely speculative projects.

AI-Blockchain Collaborative Matrix

The two axes of the collaborative matrix represent different attributes: one axis represents the three core characteristics of decentralized AI systems—verifiability, privacy, and performance; the other axis represents the blockchain trilemma—security, scalability, and decentralization. When these attributes intersect, they create a range of synergies, from high compatibility to potential conflicts.

For example, when verifiability combines with security (high synergy), it can build robust systems for proving the correctness and integrity of AI computations. However, when performance demands conflict with decentralization (low synergy), the high overhead of distributed systems can significantly impact efficiency. Additionally, some combinations (such as privacy and scalability) occupy a middle ground, presenting both potential and complex technical challenges.

Why is this important?

Strategic Compass: The matrix provides decision-makers, researchers, and developers with clear direction, helping them focus on high-synergy areas, such as ensuring data privacy through federated learning or achieving scalable AI training through decentralized computing.

Focusing on Impactful Innovations and Resource Allocation: Understanding the distribution of synergy strength (e.g., security + verifiability, privacy + decentralization) helps stakeholders concentrate resources in high-value areas, avoiding waste on weak synergies or impractical integrations.

Guiding the Evolution of the Ecosystem: As AI and blockchain technologies continue to evolve, the matrix can serve as a dynamic tool for evaluating emerging projects, ensuring they meet real needs rather than fueling trends of over-speculation.

The following table summarizes these attribute combinations by synergy strength (from strong to weak) and explains how they operate in decentralized AI systems. The table also provides examples of innovative projects that demonstrate the real-world applications of these combinations. Through this table, readers can gain a more intuitive understanding of the intersection of blockchain and AI technologies, identifying truly impactful areas while avoiding those that are overhyped or technically unfeasible.

AI-Blockchain Collaborative Matrix: Key Intersection Points of AI and Blockchain Technologies Classified by Synergy Strength

Conclusion

The combination of blockchain and AI holds tremendous transformative potential, but future development requires clear direction and focused efforts. Projects that truly drive innovation are shaping the future of decentralized intelligence by addressing key challenges such as data privacy, scalability, and trust. For example, federated learning (privacy + decentralization) enables collaboration while protecting user data, distributed computing and training (performance + scalability) enhance the efficiency of AI systems, while zkML (zero-knowledge machine learning, verifiability + security) ensures the trustworthiness of AI computations.

At the same time, we need to approach this field with caution. Many so-called AI agents are merely simple wrappers around existing models, with limited functionality and a lack of depth in their integration with blockchain. True breakthroughs will come from projects that fully leverage the strengths of both blockchain and AI and are committed to solving real problems, rather than merely chasing market hype.

Looking ahead, the AI-Blockchain Collaborative Matrix will become an important tool for evaluating projects, effectively helping decision-makers distinguish between truly impactful innovations and meaningless noise.

The next decade will belong to projects that can combine the high reliability of blockchain with the transformative capabilities of AI to solve real problems. For instance, energy-efficient model training will significantly reduce the energy consumption of AI systems; privacy-preserving collaboration will provide a safer environment for data sharing; and scalable AI governance will drive the implementation of larger-scale, more efficient intelligent systems. The industry needs to focus on these key areas to truly unlock the future of decentralized intelligence.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。