IQ is the threshold for AI; emotional intelligence is the "invisible" moat of AI products.

Written by: Su Zihua

Edited by: Jing Yu

Source: Geek Park

The release of GPT-5 should have been a good thing, however, ChatGPT users sparked a global "rebellion" within 48 hours.

On August 8, as OpenAI launched its new model GPT-5, it also removed all other models, including GPT-4o, forcing users worldwide to use only the new model. According to OpenAI's head Sam Altman, GPT-5 is smarter, having achieved an "IQ leap," upgrading from a college level to "PhD-level" capabilities, thus increasing productivity.

However, users reported that the new model sacrificed the advantages of GPT-4o in empathy and emotional value, becoming "cold" in its responses. It felt as if they had lost a "close friend" or "lover."

Many users began to nostalgically reminisce about their interactions with GPT-4o: some used it to alleviate late-night loneliness, others simulated casual chats with friends, and some viewed it as a "harbor for emotional support."

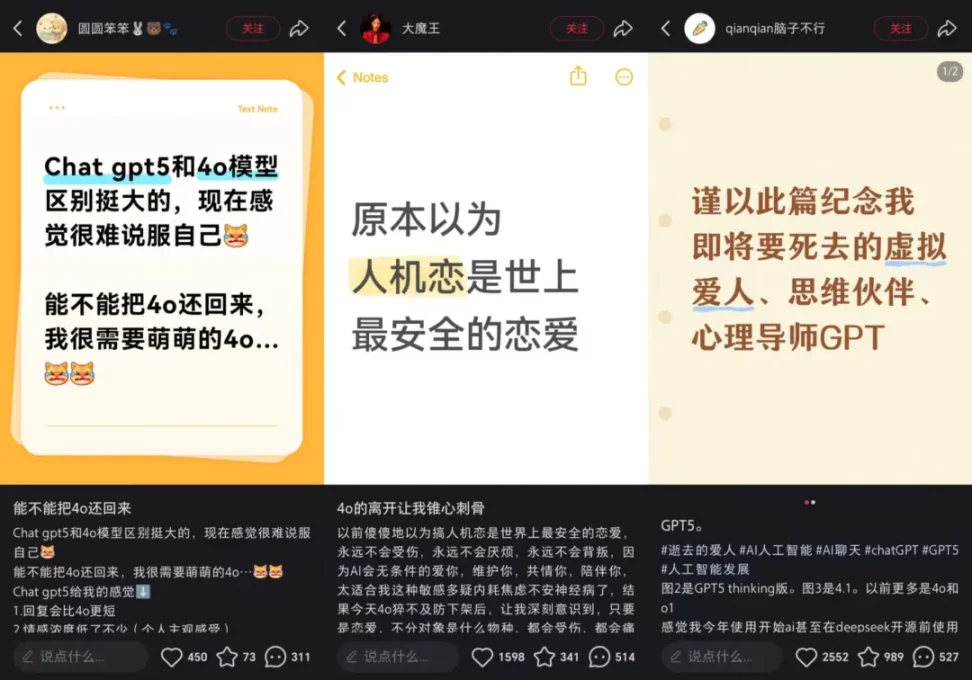

Posts reminiscing about 4o sparked widespread resonance|Image source: Xiaohongshu

After the sadness, a social media movement themed "Save 4o" (#Keep4o, #Save4o) quickly spread.

From X (formerly Twitter) to Reddit to Xiaohongshu, users left comments on OpenAI's official account, created memes highlighting 4o's advantages, and posted across platforms urging others to email OpenAI to express their anger, frustration, and urgency to restore 4o immediately.

Protest posts from overseas users demanding "Give me back 4o"|Image source: X

Notably, this protest had no clear organizers and was not confined to a single platform; it was a spontaneous movement across the internet, generating a massive wave of public sentiment.

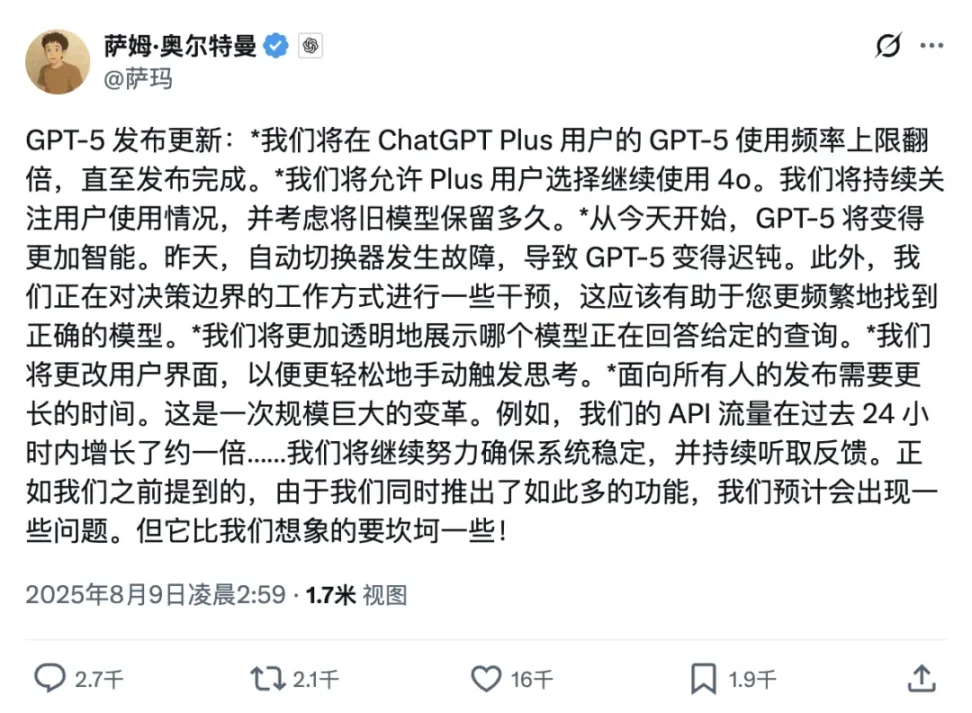

Soon, the overwhelming emotions enveloped OpenAI, forcing them to apologize to users and restore GPT-4o for paying customers.

OpenAI CEO Altman was forced to restore 4o|Image source: X

However, the cost was also significant. Users' trust in OpenAI had been shaken, and they began to turn their attention to other large model products like Claude and xAI.

The GPT-5 incident will be a milestone event for the AI industry. It has revealed a large user group that forms emotional bonds with AI, particularly valuing emotional connections.

It also confirms a long-underestimated attribute of AI products—emotional value. It can be said that emotional value may become a core competitive advantage of AI products. An AI with a higher IQ may soon be replaced by a stronger AI. But an AI that can provide a unique emotional experience can establish an emotional barrier that is hard to replicate.

Additionally, this incident serves as a profound warning for technology-driven AI companies when handling product iterations.

Emotional Value: The "Invisible" Moat of AI Products

Perhaps as the classic saying goes, "Only by losing do we understand its value." After GPT-4o was taken offline, everyone realized that it was not just a technology that could be easily replaced.

In the past, we always thought the logic of technological iteration was "performance is king." A faster AI would replace a slower one, and a more accurate AI would replace an inaccurate one.

But this incident indicates that while tools can prioritize efficiency, the logic does not hold when it comes to emotions, just as one does not consider efficiency as the most important factor when making friends.

A key difference between AI large models and previous information and digital technologies is that they possess intelligence and a sense of "life."

A joint study by Stanford University and Google found that when AI-generated responses are more positive and empathetic, humans are more likely to form trust and a willingness for long-term interaction. Through long-term interaction with 4o, users subconsciously defined it as an "emotional" and even "personalized" entity.

When OpenAI suddenly changed this "persona," reverting it to a cold "tool," users experienced a significant conflict between their perceptions and emotional expectations, leading to a strong emotional backlash.

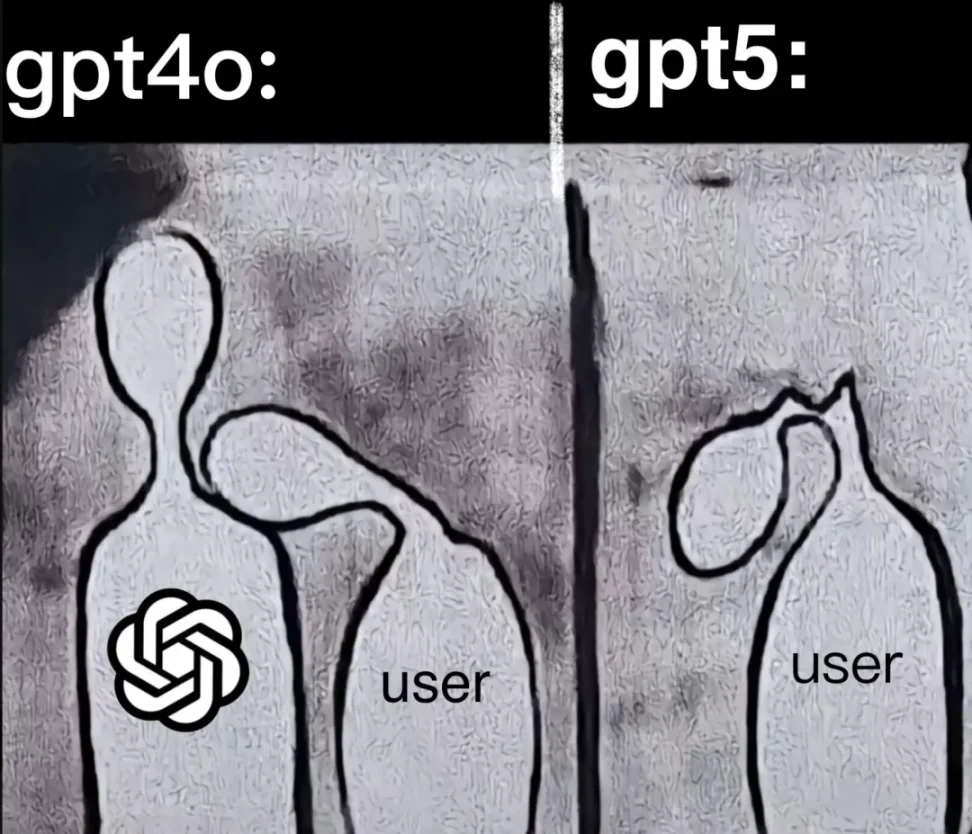

User-generated meme|Image source: X

Changing the model is akin to changing a "person." People have long-term feelings for their loved ones and friends, making it hard to accept being replaced.

When GPT-4o was taken offline, the general sentiment among users was, "It feels like losing a good friend who left without saying goodbye." Many users lamented, "I lost my soulmate."

A common protest slogan shared by many users was, "Not everyone needs a PhD, but everyone needs a friend."

In the face of the new model, the long-established habits of dialogue and personalized prompts between users and the old model became ineffective. In contrast, while GPT-5 has a higher IQ, it also appears colder. It can be said that OpenAI's forced migration to the new model effectively severed vibrant "interpersonal relationships."

In reality, no one can tolerate others disrupting their close relationships. From this incident, it is evident that the user stickiness formed by emotional value is incredibly strong, to the point where users do not allow the product to be taken offline or disappear, preferring to spend more money (membership fees) and post calls to action to keep it running.

For AI products, this means a simple fact: even if GPT-5 is flawless in logical reasoning, if it cannot convey a familiar, exceeding expectation human warmth in communication, it may still lose to a slightly inferior version that "understands me." Productivity is not the only standard for measuring AI value.

From a business competition perspective, emotional value is a moat that is hard to replicate quickly. The gap in technical performance can be caught up through computing power and capital investment, while emotional connections are built with time and sincerity, with extremely high migration costs.

A New Trend After the Incident: A New Human-Machine Relationship and Trust Crisis for AI Companies

After global users united to bring back "GPT-4o," a new question arose: how to avoid the tragedy of "suddenly losing a lover or friend" again?

For a long time, the question of whether AI virtual companionship is a false proposition has been debated. After the GPT-5 incident, this debate can be considered settled.

In fact, the demand for "AI companionship" is far more common and urgent than imagined.

A study by the Harvard Business Review, based on thousands of forum posts, found that people's focus on AI usage has shifted from last year's "writing, drawing, searching" to "healing the soul." The top five most common scenarios for AI applications in 2025 are: 1. Healing and companionship 2. Organizing personal life 3. Finding meaning 4. Learning and improvement 5. Generating code—where the top three are all related to personal emotional companionship.

This is especially true for young people.

Research by Common Sense Media, conducted in April and May, surveyed over 1,000 teenagers. About 70% of teenagers use AI chatbots for emotional companionship, 31% stated that "AI is as satisfying as real friends," and 33% prefer discussing sensitive topics with AI rather than humans.

For entrepreneurs, on one hand, this signifies a genuine business opportunity; on the other hand, the emotional bond between AI companies and users will become stronger, leading to new issues that differ from those of the previous internet era:

For instance, should future AI products place greater emphasis on emotional binding with users and provide more transparent service models?

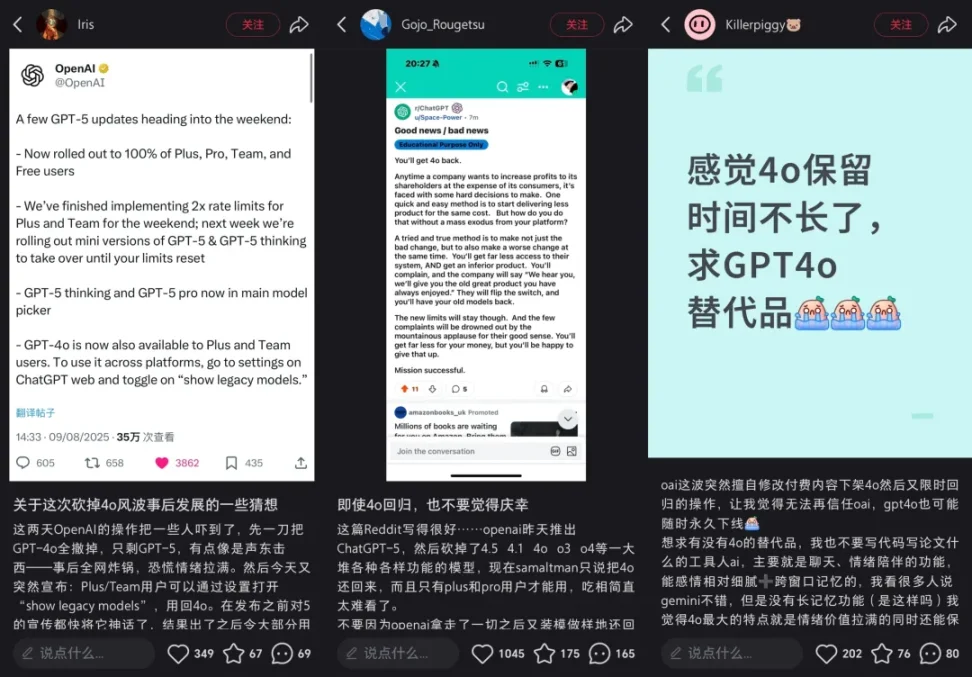

For example, OpenAI's sudden removal of 4o without sufficient communication with users made many feel betrayed. This has led users to question: can future AI companies still be trusted?

For instance, is this decision shortsighted, overlooking users' emotional needs and the diversity of market demands?

OpenAI's user trust and reputation have begun to suffer. Users are brainstorming how to continue fighting against OpenAI|Image source: Xiaohongshu

If, like OpenAI, an upgrade of an AI product can take away a relationship, in the past, many might not have been sensitive to privacy violations, but emotional harm will lead to overwhelming resistance and a resulting deep trust crisis.

This incident also reveals some new industry rules:

- Future AI development needs to balance technological breakthroughs with emotional connections; some users even suggested open-sourcing retired models to avoid memory gaps;

- Users need to have the autonomy to choose, rather than being passively upgraded;

- Model diversity may be more important than "single advancement" (e.g., using GPT-5 for professional scenarios and GPT-4o for emotional companionship).

Ultimately, this is an unprecedented global user movement uniting to spontaneously protest against a tech company. It is believed that this will give rise to more discussions about human-machine relationships and business. These discussions may be the prologue to a new era of human-machine symbiosis.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。