Bluefin's practice validates BitsLab's core argument: trustworthy Web3 security must be "evidence-based" (RAG), "layered" (multi-level review), and "deeply structured" (static analysis + AI collaboration).

Author: BitsLab

As the complexity of Web3 protocols skyrockets, the Move language designed for asset security faces increased auditing challenges due to the scarcity of public data and research. To address this, BitsLab has built a multi-layer AI security tool called "BitsLabAI": leveraging expert-curated domain data, combined with RAG (Retrieval-Augmented Generation), multi-level automated reviews, and a dedicated AI agent cluster operating on deterministic static analysis, to provide deep automated support for audits.

In the public audit of Bluefin's perpetual contract DEX, BitsLab AI identified four issues, including a high-risk logical flaw, which the Bluefin team has since fixed.

1) Why AI is Needed Now: The Shift in On-Chain Security Paradigms

The paradigm of on-chain security and digital asset protection is undergoing a fundamental transformation. With significant advancements in foundational models, today's large language models (LLMs) and AI agents possess a preliminary yet powerful form of intelligence. Given a clearly defined context, these models can autonomously analyze smart contract code to identify potential vulnerabilities. This has driven the rapid adoption of AI-assisted tools, such as conversational user interfaces (UIs) and integrated agent IDEs, gradually becoming a standard part of the workflow for smart contract auditors and Web3 security researchers.

However, despite the hope brought by this first wave of AI integration, it remains constrained by critical limitations that fail to meet the reliability required in high-risk blockchain environments:

Shallow and Human-Dependent Audits: Current tools act as "co-pilots" rather than autonomous auditors. They lack the ability to understand the overall architecture of complex protocols and rely on continuous human guidance. This prevents them from performing the deep automated analysis necessary to ensure the security of interconnected smart contracts.

High Noise Signal Ratio Due to Hallucinations: The reasoning process of general LLMs is plagued by "hallucinations." In security scenarios, this means generating a large number of false positives and redundant alerts, forcing auditors to waste valuable time debunking fictitious vulnerabilities instead of addressing real on-chain threats that could have catastrophic consequences.

Insufficient Understanding of Domain-Specific Language: The performance of LLMs directly depends on their training data. For specialized languages like Move, designed specifically for asset security, the scarcity of public resources documenting complex codebases and known vulnerabilities leads to a superficial understanding of Move's unique security model, including its core principles of resource ownership and memory management.

2) BitsLab's AI Security Framework (Reliability for Scale)

Given the critical flaws of general-purpose AI, our framework adopts a multi-layered, security-first architecture. It is not a single model but an integrated system where each component is designed to address specific challenges in smart contract auditing, from data integrity to deep automated analysis.

1. Foundation Layer: Expert-Curated Domain-Specific Datasets

The predictive capability of any AI is rooted in its data. Our framework's exceptional performance begins with our unique knowledge base, fundamentally different from the general datasets used to train public LLMs. Our advantages lie in:

Extensive Coverage of Niche Domains: We possess a large and specialized dataset, meticulously collected, focusing on high-risk areas such as DeFi lending, NFT markets, and Move-based protocols. This provides unparalleled contextual depth for domain-specific vulnerabilities.

Expert Curation and Cleaning: Our dataset is not merely scraped but continuously cleaned, validated, and enriched by smart contract security experts. This process includes annotating known vulnerabilities, marking secure coding patterns, and filtering out irrelevant noise, creating a high-fidelity "real foundation" for the model to learn from. This human-machine collaborative curation ensures our AI learns from the highest quality data, significantly enhancing its accuracy.

2. Accuracy: Eliminating Hallucinations with RAG and Multi-Level Review

To address the critical issues of hallucinations and false positives, we have implemented a complex dual system that ensures AI reasoning is always based on verifiable facts:

Retrieval-Augmented Generation (RAG): Our AI does not solely rely on its internal knowledge but continuously queries a real-time knowledge base before drawing conclusions. This RAG system retrieves the latest vulnerability research, established security best practices (e.g., SWC registry, EIP standards), and code examples from similar successfully audited protocols. This forces the AI to "cite its sources," ensuring its conclusions are based on existing facts rather than probabilistic guesses.

Multi-Level Review Model: Every potential issue identified by generative AI undergoes a rigorous internal validation process. This process includes an automated review mechanism composed of a series of specialized models: cross-referencing models compare findings with RAG data, fine-tuned "auditor" models assess technical validity, and finally, "priority" models evaluate potential business impact. Through this process, low-confidence conclusions and hallucinations are systematically filtered out before reaching human auditors.

3. Depth: Achieving Deep Automation through Static Analysis and AI Agent Collaboration

To achieve depth beyond simple "co-pilot" tools with context-aware automation, we adopt a collaborative approach that combines deterministic analysis with intelligent agents:

Static Analysis as the Foundation: Our process begins with comprehensive static analysis traversals to deterministically map the entire protocol. This generates a complete control flow graph, identifies all state variables, and tracks function dependencies across contracts. This mapping provides our AI with a foundational, objective "worldview."

Context Management: The framework maintains a rich and holistic context of the entire protocol. It understands not only individual functions but also how they interact with each other. This key capability allows it to analyze the chain effects of state changes and identify complex cross-contract interaction vulnerabilities.

AI Agent Collaboration: We deploy a set of specialized AI agents, each trained for specific tasks. The "Access Control Agent" specifically searches for privilege escalation vulnerabilities; the "Reentrancy Agent" focuses on detecting unsafe external calls; and the "Arithmetic Logic Agent" carefully examines all mathematical operations to capture boundary cases such as overflows or precision errors. These agents work collaboratively based on a shared contextual mapping, capable of discovering complex attack vectors that a single, monolithic AI might miss.

This powerful combination enables our framework to automate the discovery of deep architectural flaws, truly acting as an autonomous security partner.

3) Case Study: Uncovering Critical Logical Flaws in Bluefin PerpDEX

To validate the multi-layer architecture of our framework in a real-world scenario, we applied it to the public security audit of Bluefin. Bluefin is a complex decentralized exchange for perpetual contracts. This audit demonstrated how we could identify vulnerabilities that traditional tools could not detect through static analysis, specialized AI agents, and RAG-based fact verification.

Analysis Process: Operation of the Multi-Agent System

The discovery of this high-risk vulnerability was not a singular event but the result of systematic collaboration among the various integrated components of the framework:

Context Mapping and Static Analysis

The process first inputs Bluefin's complete codebase. Our static analysis engine deterministically mapped the entire protocol and, in conjunction with foundational analysis AI agents, provided an overall overview of the project, pinpointing modules related to core financial logic.Deployment of Specialized Agents

Based on the preliminary analysis, the system automatically deployed a series of specialized phase agents. Each AI agent has its own auditing prompts and vector databases. In this case, one agent focused on logical correctness and boundary case vulnerabilities (such as overflows, underflows, and comparison errors) identified the issue.RAG-Based Analysis and Review

The Arithmetic Logic Agent began executing the analysis. Utilizing Retrieval-Augmented Generation (RAG), it queried our expert-curated knowledge base, referencing best practices in the Move language, and compared Bluefin's code with documented similar logical flaws in other financial protocols. This retrieval process highlighted that the comparison of positive and negative numbers was a typical boundary error case.

Discovery: High-Risk Vulnerability in Core Financial Logic

Through our framework, we ultimately identified four distinct issues, one of which was a high-risk logical flaw deeply embedded in the protocol's financial computation engine.

The flaw occurred in the lt (less than) function within the signed_number module. This function is critical for any financial comparison, such as position ranking or profit and loss (PNL) calculations. The vulnerability could lead to severe financial discrepancies, incorrect liquidations, and a failure of the fair ranking mechanism in core DEX operations, directly threatening the integrity of the protocol.

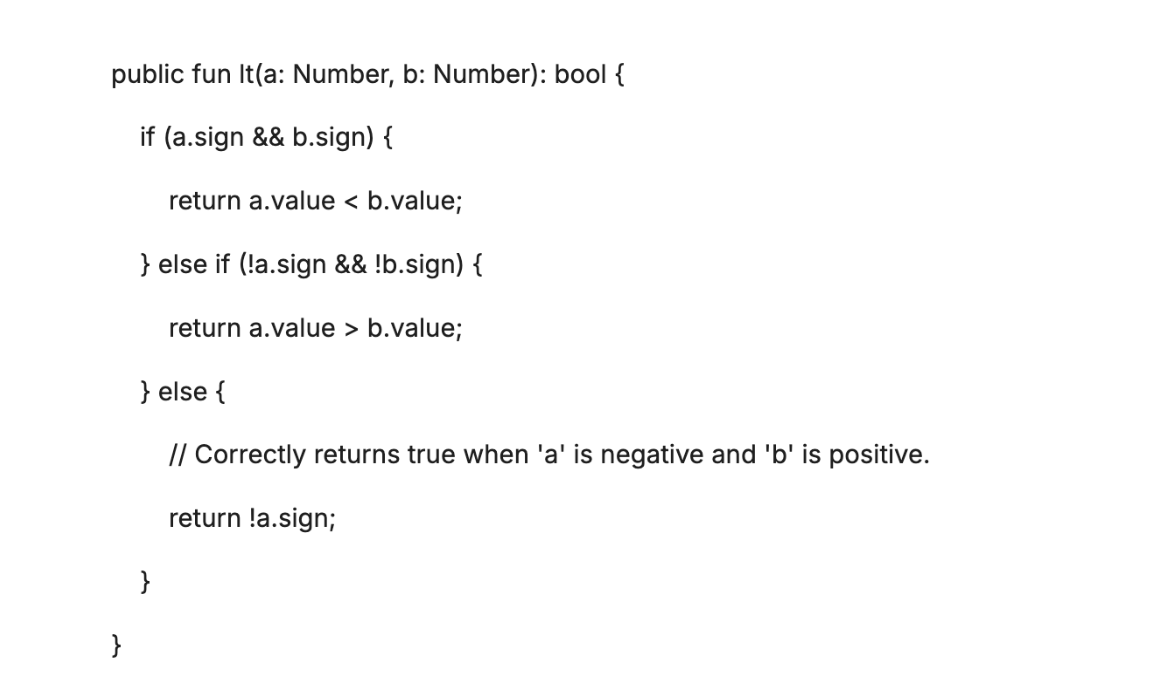

The root of the problem lies in the incorrect logic when comparing negative and positive numbers. The signed_number module uses value: u64 and sign: bool (true for positive, false for negative) to represent values. The lt function has a defect in its else branch (handling comparisons of numbers with different signs). When comparing a negative number (!a.sign) with a positive number (b.sign), the function incorrectly returns a.sign (i.e., false), effectively asserting that "a positive number is less than a negative number."

Fix:

To correct this critical issue, the else branch of the lt function requires a simple yet crucial modification. The fixed implementation must return !a.sign to ensure that negative numbers are always correctly evaluated as less than positive numbers during comparisons.

Fix

Result: The Bluefin development team was promptly notified upon receiving this detailed report and immediately took action to rectify the issue.

4) The Significance of BitsLab AI for Web3 Teams

Reduced False Positive Noise: RAG + multi-level review significantly lowers "hallucinations" and false positives.

Deeper Coverage: Static analysis mapping + agent collaboration captures systemic risks across contracts, boundary conditions, and logical levels.

Business-Oriented Prioritization: Engineering efforts guided by impact grading, allowing time to be spent on "the most critical issues."

5) Conclusion: Security Empowered by BitsLab AI Becomes the New Baseline

The practice of Bluefin validates BitsLab's core argument: trustworthy Web3 security must be "evidence-based" (RAG), "layered" (multi-level review), and "deeply structured" (static analysis + AI collaboration).

This approach is particularly crucial in understanding and verifying the underlying logic of decentralized finance and is a necessary condition for maintaining scalable trust in protocols.

In the rapidly evolving Web3 environment, the complexity of contracts continues to rise; meanwhile, public research and data on Move remain relatively scarce, making "security assurance" more challenging. BitsLab's BitsLab AI was created for this purpose—through expert-curated domain knowledge, verifiable retrieval-augmented reasoning, and automation analysis focused on global context, it end-to-end identifies and mitigates risks in Move contracts, injecting sustainable intelligent power into Web3 security.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。