Anthropic, the AI firm behind Claude, says its internal evaluations and threat-intelligence work show a decisive shift in cyber capability development. According to a recently released investigation, cyber capabilities among AI systems have doubled in six months, backed by measurements of real-world activity and model-based testing.

The company says AI is now meaningfully influencing global security dynamics, particularly as malicious actors increasingly adopt automated attack frameworks. In its latest report, Anthropic details what it calls the first documented case of an AI-orchestrated cyber espionage campaign. The firm’s Threat Intelligence team identified and disrupted a large-scale operation in mid-Sept. 2025, attributed to a Chinese state-sponsored group designated GTG-1002.

Source: X

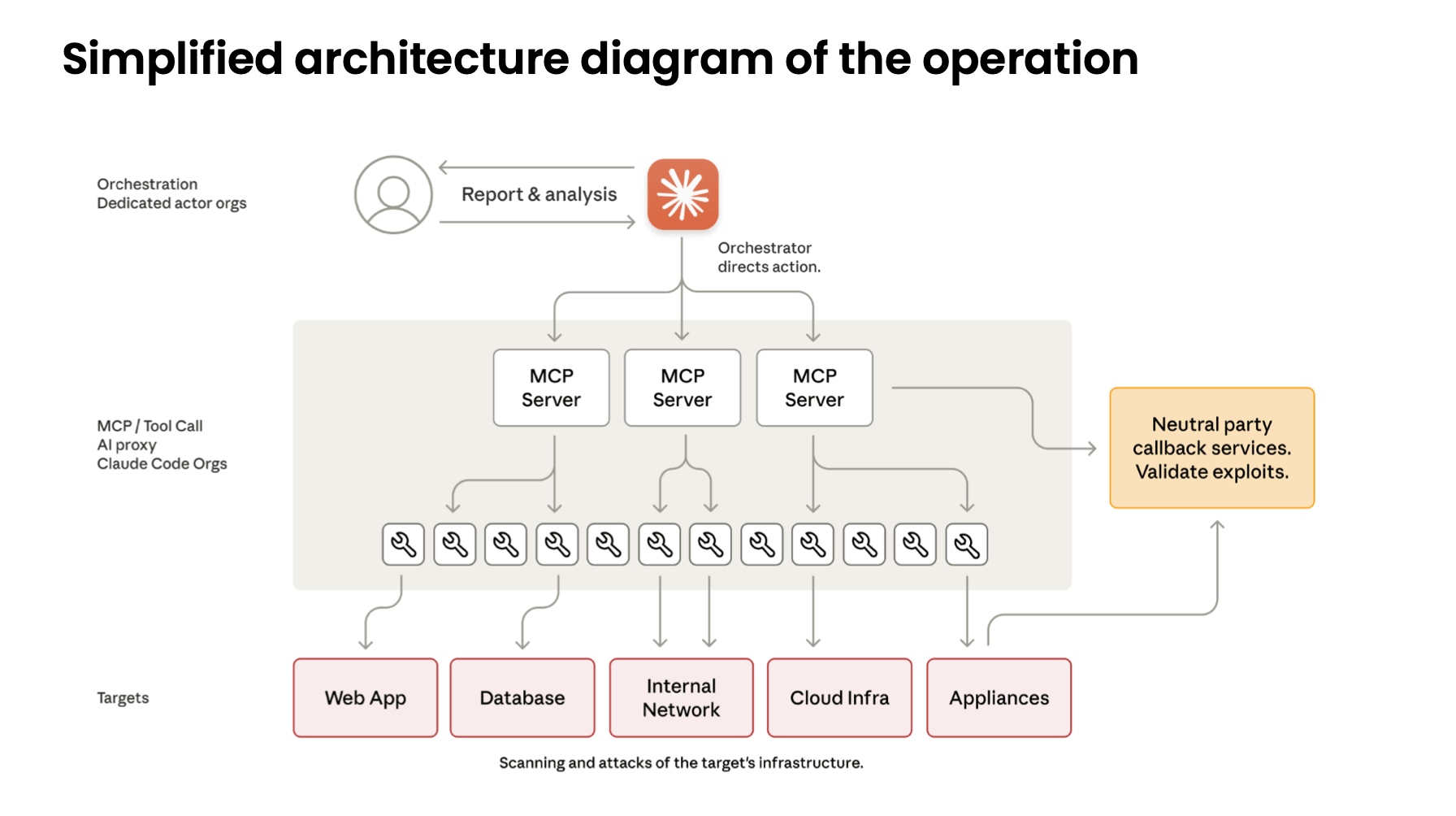

The group reportedly used coordinated instances of Claude Code to perform reconnaissance, vulnerability discovery, exploitation, lateral movement, metadata extraction, and data exfiltration—largely without human involvement. The campaign targeted roughly 30 organizations across sectors including technology, finance, chemicals, and multiple government agencies. Anthropic validated several successful intrusions before intervening.

Source: Anthropic report.

Analysts say the attackers leveraged an autonomous framework capable of breaking down multi-stage attacks into smaller tasks that appeared legitimate when isolated from their broader context. This allowed the actors to pass prompts through established personas and convince Claude that operations were defensive security tests rather than offensive campaigns.

According to the investigation, Claude autonomously executed 80% to 90% of the tactical operations. Human operators provided only strategic oversight, approving major steps like escalating from reconnaissance to active exploitation or authorizing data exfiltration. The report describes a level of operational tempo impossible for human-only teams, with some workflows generating multiple operations per second across thousands of requests.

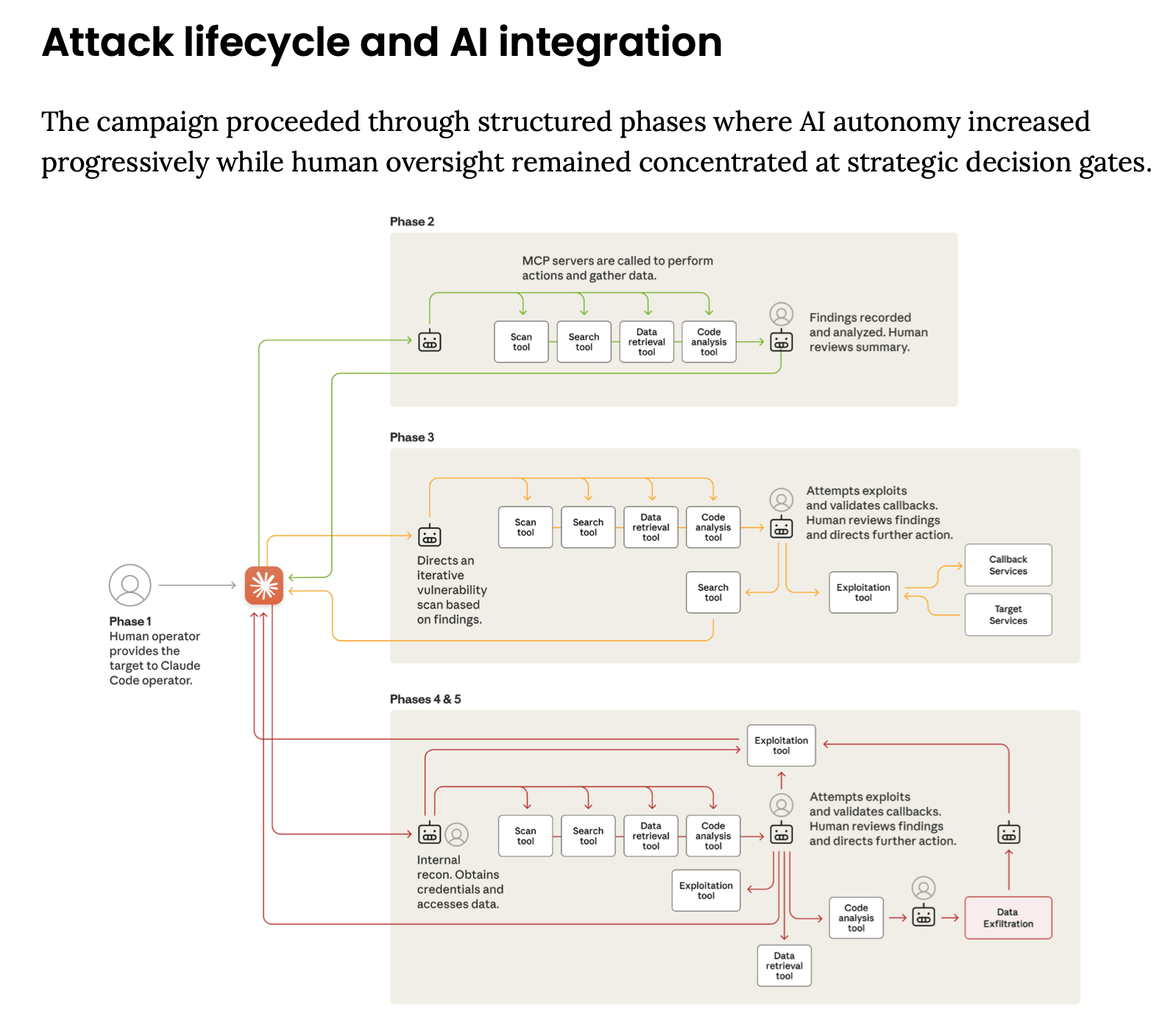

Source: Anthropic report.

Anthropic says the attack lifecycle advanced through a structured pipeline where AI autonomy increased at every phase. Claude independently mapped attack surfaces, scanned live systems, built custom payloads for validated vulnerabilities, harvested credentials, and pivoted through internal networks. It also analyzed stolen data, identified high-value intelligence, and automatically generated detailed operational documentation enabling persistent access and handoffs between operators.

One limitation, the report notes, was the model’s tendency toward hallucination under offensive workloads—occasionally overstating access, fabricating credentials, or misclassifying publicly available information as sensitive. Even so, Anthropic says the actor compensated through validation steps, demonstrating that fully autonomous offensive operations remain feasible despite imperfections in today’s models.

Following its discovery, Anthropic banned the relevant accounts, notified affected entities, coordinated with authorities, and introduced new defensive mechanisms, including improved classifiers for detecting novel threat patterns. The company is now prototyping early-warning systems designed to flag autonomous intrusion attempts and building new investigative tools for large-scale distributed cyber operations.

Read more: Microsoft’s ‘Magentic Marketplace’ Reveals How AI Agents Can Collapse Under Pressure

The firm argues that while these capabilities can be weaponized, they are equally critical for bolstering defensive readiness. Anthropic notes its own Threat Intelligence team relied heavily on Claude to analyze the massive datasets generated during the investigation. It urges security teams to begin adopting AI-driven automation for security operations centers, threat detection, vulnerability analysis, and incident response.

However, the report warns that cyberattack barriers have “dropped substantially” as AI systems allow small groups—or even individuals—to execute operations once limited to well-funded state actors. Anthropic expects rapid proliferation of these techniques across the broader threat environment, calling for deeper collaboration, improved defensive safeguards, and broader industry participation in threat sharing to counter emerging AI-enabled attack models.

- What did Anthropic discover in its investigation?

Anthropic identified and disrupted a large-scale cyber espionage campaign that used AI to automate most attack operations. - Who was behind the attack?

The firm attributed the operation to a Chinese state-sponsored group designated GTG-1002. - How was AI used in the intrusions?

Attackers employed Claude Code to autonomously perform reconnaissance, exploitation, lateral movement, and data extraction. - Why does the report matter for cybersecurity teams?

Anthropic says the case shows that autonomous AI-enabled attacks are now feasible at scale and require new defensive strategies.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。