The most ironic thing is that students are now using AI, and one of the consequences is that they will be unable to use AI better in the future.

Author: Xinxin

The biggest advocates for AI tools driven by large models entering our lives may not be the "workhorses" in office buildings, but rather the students in schools. This is because generating an essay or a short paper with ChatGPT is incredibly easy.

As a result, after the emergence of large models, many teachers' quickest response was to "ban AI" or redefine cheating standards.

However, some people quickly realized that the real danger might not be cheating, but rather that students are fully outsourcing the "learning process" of their brains to AI.

It seems that completing assignments has become easier, and grades have improved. But a troubling question arises: as students increasingly rely on AI for writing, answering questions, summarizing, and thinking, are they really still "learning"?

Or, in the age of AI, do students still need to "learn"?

01 It Looks Like Learning, But It’s Not

Since the launch of ChatGPT at the end of 2022, OpenAI may have to admit that their most loyal user group is students.

Looking back over the past two years, the media once reported that OpenAI's user growth had stagnated, but come September, the number of users surged again. The reason for this fluctuation is simple—students returned to school, and summer vacation ended.

The first action of AI in changing education is making "completing assignments" exceptionally easy; if you don't know how, just ask AI.

A survey shows that by the end of 2024, about 70% of American teenagers will have used generative AI tools, with more than half using AI to help complete homework.

There are also many surveys in China indicating the prevalence of AI use in schools. Before DeepSeek emerged, students would directly use local AI tools like Wenxin Yiyan and Doubao.

Writing papers, reading reports, solving math problems—students' assignments seem to be getting increasingly polished, but the question arises—have they really learned?

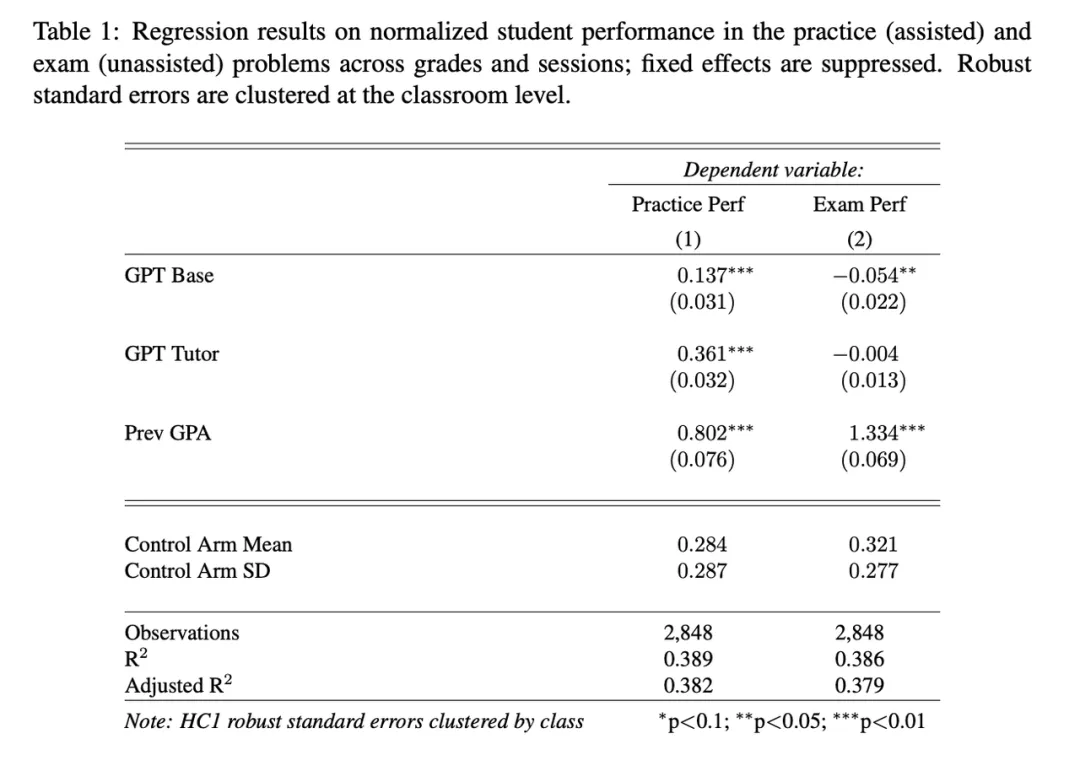

A research team from the University of Pennsylvania conducted a real experiment in 2024:

A group of high school students learning math was divided into three groups: one group used a GPT-4 based AI chatbot freely, another used "GPT Tutor" (which provided guidance but no answers), and the last group did not use AI at all.

The results showed that during the practice phase, the group that freely used AI performed the best, almost overwhelmingly.

However, when it came to the final exam, where AI assistance was not allowed, the AI group scored an average of 17% lower than the group that did not use AI at all.

Students using "GPT Tutor" performed 127% better during practice, but their final exam scores were roughly the same as those who did not use AI.

Image source: University of Pennsylvania research team paper results

The researchers at the University of Pennsylvania concluded that unrestricted AI has become a "crutch": students relied on chatbots to do the heavy lifting during practice and "did not learn the underlying mathematical concepts deeply enough" to solve similar problems on their own.

They believe that AI provides some support when students do their homework, creating an illusion of knowledge mastery, but ultimately exposes their shortcomings in exams. With AI as a "crutch," their legs become increasingly unsteady.

This has led to the "AI Paradox" in education:

AI makes you look smarter, but in reality, you may learn less.

Image source: University of Pennsylvania research team paper

02 Does AI Diminish Cognitive Abilities?

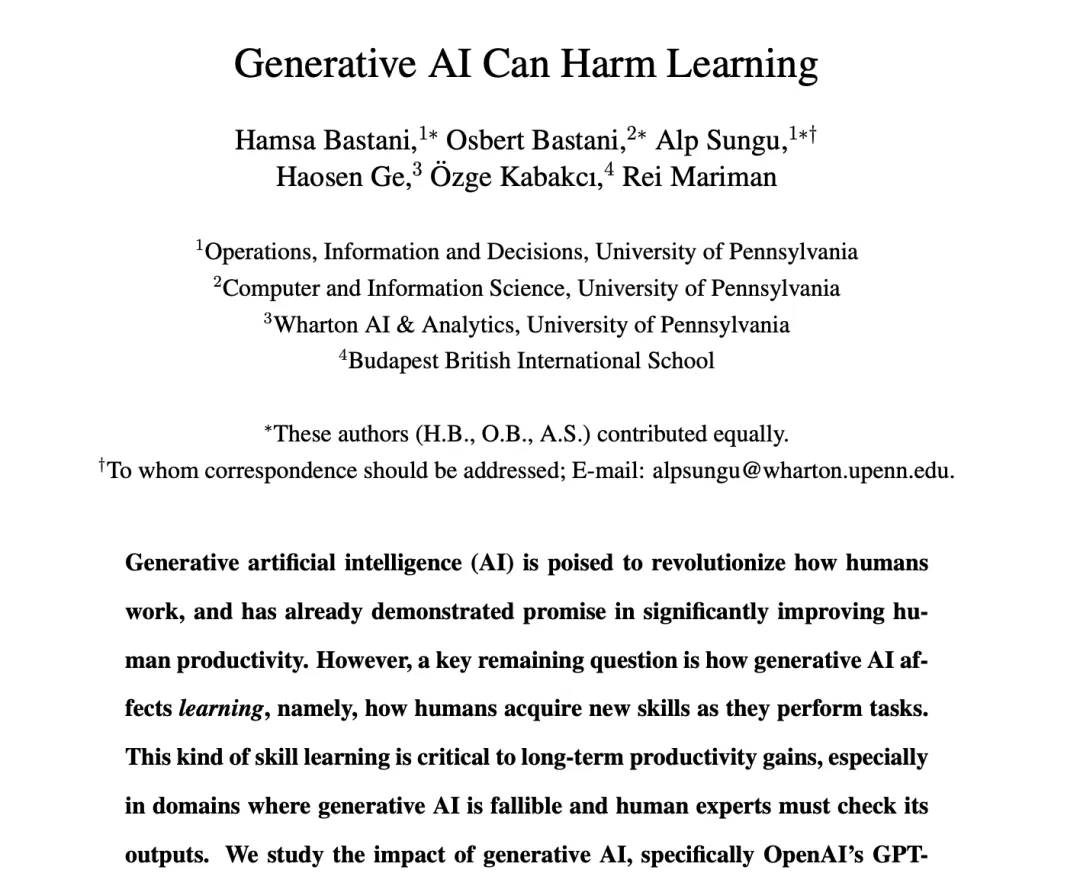

It's not just about solving problems; research on AI's impact on cognitive abilities has increased in the past two years.

Researchers from Carnegie Mellon University and Microsoft released a study this year stating that generative AI tools, if "misused… can lead to a decline in cognitive abilities that should be retained."

A study published in the journal Nature claims that it may reduce the independent decision-making abilities of students and teachers, causing students to rely on technology and reduce independent thinking. This could lead to laziness and affect the quality of learning.

Scholars in the field of cognitive science generally say that the essence of learning is the new connections formed after the brain struggles repeatedly. Educators are concerned that AI allows students to skip the "struggle," thereby erasing their growth.

Similarly, a study published in the journal Societies this year states that "frequent use of AI tools is significantly negatively correlated with critical thinking skills, a correlation mediated by increased cognitive offloading. Compared to older participants, younger participants are more dependent on AI tools and score lower in critical thinking."

"Cognitive offloading" refers to delegating cognitive tasks to AI. Researchers noted that this effect is particularly pronounced among young people, while those with higher education levels tend to maintain stronger critical thinking skills regardless of whether they use AI tools.

Of course, it is worth noting that this study emphasizes correlation rather than direct causation.

Image source: Societies Journal

Outside academia, issues related to AI in education have frequently appeared in the news over the past two years. In addition to teachers complaining about students cheating, another common phenomenon is that some teachers have found that students who frequently use AI have soaring grades and excellent papers—but once they step away from AI, everything falls apart.

For example, "an average student uses ChatGPT or DeepSeek to submit a high-scoring paper. But during classroom discussions, they sometimes can't even answer the most basic concepts."

AI's automatic summarization, automatic essay writing, automatic translation, and automatic generation of argumentative frameworks directly replace four important learning actions: reading, understanding, thinking, and expressing.

While some rejoice that AI is a boon for learning, doubling learning efficiency, others worry that excessive reliance is causing a decline in abilities. The Chronicle of Higher Education quoted some undergraduates saying, "I've become lazy; AI has made reading easier, but it has slowly made my brain lose the ability to think critically and understand every word."

"When I do my homework, I can't go ten seconds without ChatGPT. I hate the current version of myself because I know I haven't learned anything, but now I'm too far behind; I can't keep up without using it… my motivation has disappeared."

Complaints also appear on social media. For instance, a user in the Reddit psychology community stated, "The situation is getting worse. I am a teacher at a university of applied sciences, and I have noticed a sharp decline in my students' problem-solving and critical thinking abilities."

Image source: reddit

03 "Never Learned"

Some have raised a question—AI may put students in a state of "never having learned," which is more dangerous than "forgetting how to do it."

Technology writer Nicholas Carr wrote a lengthy article titled "The Myth of Automated Learning" in May, expressing the view that when a skill is taken over by machines before you have learned it, you may never learn it.

He described three possible scenarios for task automation: First, if the user is already an expert, AI tools can further enhance their skills; second, if the skill requires constant practice, then AI automation may lead to skill regression; third, if the user is a novice and AI performs the task from the beginning, that person may never truly master the skill.

Education fits perfectly into the third scenario. Nicholas Carr claims that students are in the process of learning new skills and have not yet mastered them. If AI "takes over" the task—whether solving math problems or writing papers—before students gain experience, true skill growth will be hindered.

Image source: substack

Furthermore, when a person rarely "thinks for themselves," they may even struggle to write a good prompt in the AI dialogue window. Not to mention verifying and improving AI's output, these meta-skills depend on the user's underlying understanding of the subject.

Timothy Burke, a history professor at Swarthmore College, stated, "To truly leverage current and near-future AI generation tools in research and expression, you must already know a lot."

"It's like if you don't know what to look for or what a card catalog is, you can't use it to find information; or like when Google search was most useful, if you didn't know how to modify keywords, narrow your search, or pick out useful information from what you found last time to optimize your next search, you wouldn't use it well either."

Education is such a field. Elementary school students are just starting to read, and AI can write their reading reflections; middle school students are just getting into argumentation, and AI can generate well-structured essays with one click; college students are just beginning to do research, and AI can automatically provide outlines, analyses, summaries, and citations.

These skills have been replaced before they had a chance to be mastered. As a result, students not only "forget how to do it," but rather "never learned how to do it," sometimes even unaware that the copied content is actually an "illusion" produced by AI.

For instance, in AI coding, if students skip actual programming learning and always have AI write code for them, when AI outputs errors, they may not have enough programming knowledge to debug or improve AI's output.

Nicholas Carr likens this to a generation of pilots who only know how to use autopilot; they can fly regular flights, but in emergencies that require manual control, they are at a loss.

"We have been focusing on how students use artificial intelligence to cheat. We should be more concerned about how AI betrays students," says Nicholas Carr.

"With generative AI, a student who would normally achieve a B level can produce A-level work, while simultaneously becoming a C-level student."

04 The Paradox of Learning and Education

Traditional education has a basic assumption: if a student can submit a good essay, it means they can write; if they can solve a difficult problem, it indicates they understand the formula; high scores represent knowledge mastery.

Currently, AI seems to have changed this logic. In non-strict closed-book exam situations, a student's good writing does not necessarily mean they can write, and high scores do not equate to actual understanding; the outcome no longer equals the process.

Now, a perfect assignment might be written by ChatGPT; a logically sound paper could be a draft provided by DeepSeek; a high score might simply be the result of skillful prompt usage. Sometimes, real understanding is not required; knowing how to use the tool suffices, and the learning process is "outsourced" to LLMs.

Campuses around the world have dealt with this issue, from banning AI in classrooms to using AI detection systems like GPTZero and Turnitin, but these measures cannot completely stop it and sometimes "misfire" against diligent students. Some students have even become clever, prompting AI to produce "dumber" outputs just to make assignments "look more like they were written by a student," knowing that many teachers believe "students cannot write this perfectly."

In Asian countries, the Chinese Ministry of Education released the "Guidelines for the Use of Generative Artificial Intelligence by Primary and Secondary School Students" in May this year, warning against excessive reliance on AI tools. Primary school students are not allowed to use open-ended content generation features alone, but some auxiliary teaching uses are permitted, emphasizing a balance across different educational stages.

At the university level, Fudan University issued regulations prohibiting AI from participating in six parts of undergraduate theses that involve originality and innovation, allowing its use for auxiliary tasks like literature retrieval and code debugging. Other universities have similar regulations; a professor at Nanjing University even gave a zero score to a student for using AI to summarize "Dream of the Red Chamber."

Japan emphasizes cautious use among younger students, requiring "prohibition of using AI to complete assignments" and warning against the suppression of thinking, stating that if introduced too early or without safeguards, the use of AI could "stifle students' creativity and motivation to learn."

In Africa, where educational resources are tighter, the entry of AI was initially seen by some educators as an opportunity for "leapfrogging," but concerns also exist. A title from an African education journal posed the question: "ChatGPT—A cheating tool or an opportunity to enhance learning?"

Image source: bizcommunity

As for North America, the initial reaction was also intense. In early 2023, due to concerns about AI misuse, some regions in the U.S. directly blocked ChatGPT on school devices. A survey by the Pew Research Center showed that only 6% of U.S. K-12 teachers believe AI is more beneficial than harmful for education, while a quarter of teachers think AI is more harmful than helpful, with more teachers in a wait-and-see mode, feeling both anxious and confused.

However, this wave cannot be stopped; bans have begun to shift towards guidance. Currently, U.S. universities are starting to collaborate with tech companies like OpenAI and Anthropic to actively introduce "educational AI."

Europe is seeing similar trends, with Estonia distributing AI tools to students and teachers through a national program called "AI Leap." Some British universities have established principles to encourage responsible use of AI, and some schools in England are trialing AI classroom assistants.

This year, as evidence of AI's disruptive impact on education has increased, OpenAI CEO Sam Altman announced the provision of free access to ChatGPT Plus for a limited time to North American students.

Nicholas Carr stated:

"In the eyes of AI companies, students are not learners, but customers."

Image source: X

In response to the challenge of AI vs. education, various regions have adopted different strategies, such as redesigning assignments, face-to-face defenses, in-class writing, and restoring more paper-and-pencil exams to ensure they are testing students, not "students + AI," among other measures.

Some educators have already realized that the crisis is not just rampant cheating, but also the potential collective atrophy of "thinking muscles" under AI assistance. Moreover, skeptics of the transformation may ask this question:

When AI can complete tasks for you, what exactly do students still need to learn?

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。