Without a work history, how does DeepSeek select its candidates? The answer is, they look for potential.

Author: Sam Gao, Author of ElizaOS

0. Introduction

Recently, the continuous emergence of DeepSeek V3 and R1 has caused a FOMO among AI researchers, entrepreneurs, and investors in the United States. This feast is as astonishing as the launch of ChatGPT at the end of 2022.

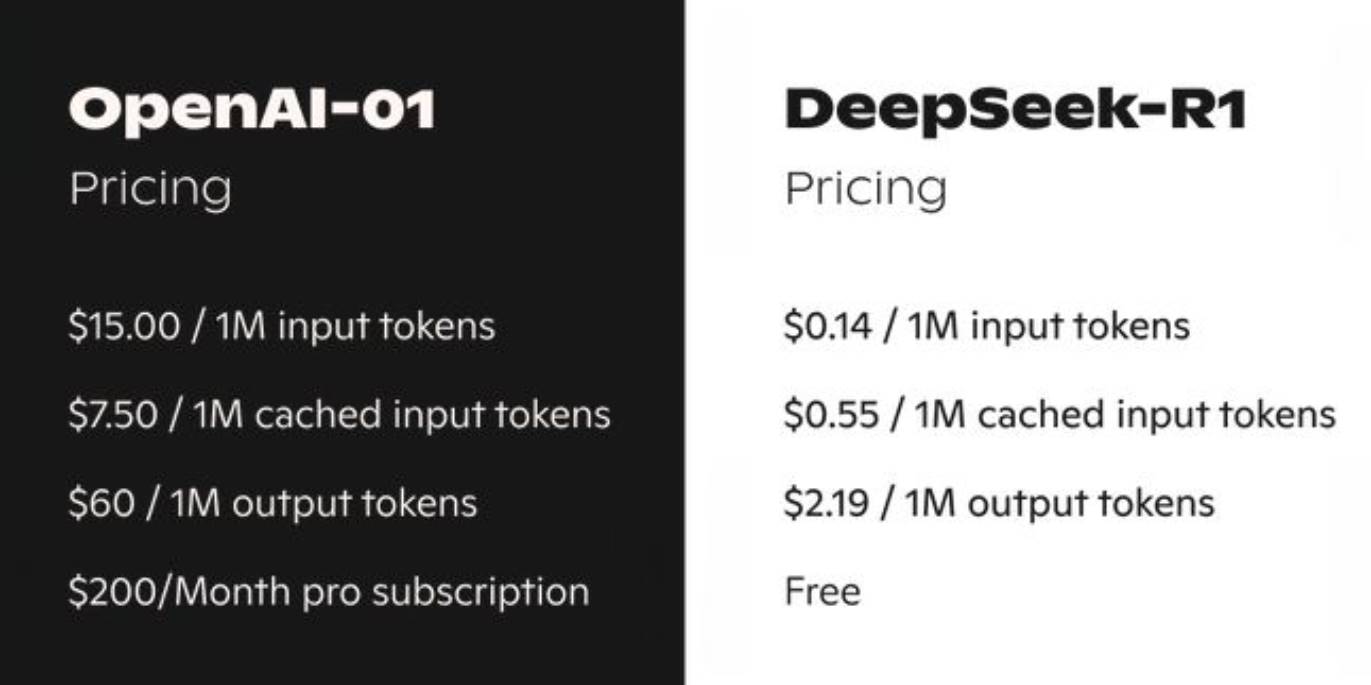

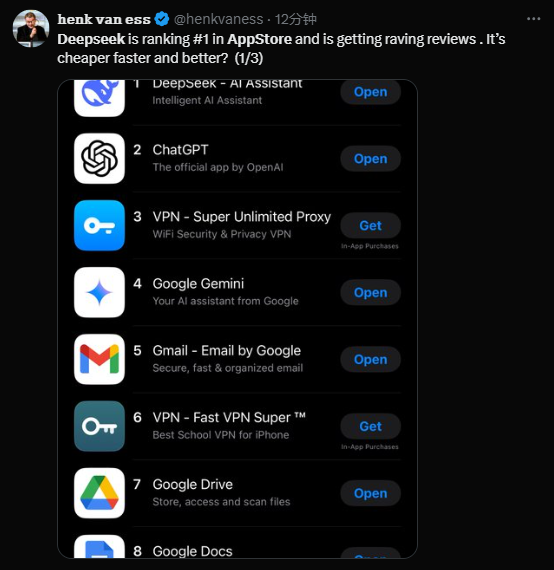

With the complete open-source nature of DeepSeek R1 (the model can be downloaded for local inference from HuggingFace) and its extremely low price (1/100 of OpenAI's o1), DeepSeek quickly rose to the top of the Apple App Store in the U.S. within just five days.

So, where does this mysterious new AI force, incubated by a Chinese quantitative company, come from?

1. The Origin of DeepSeek

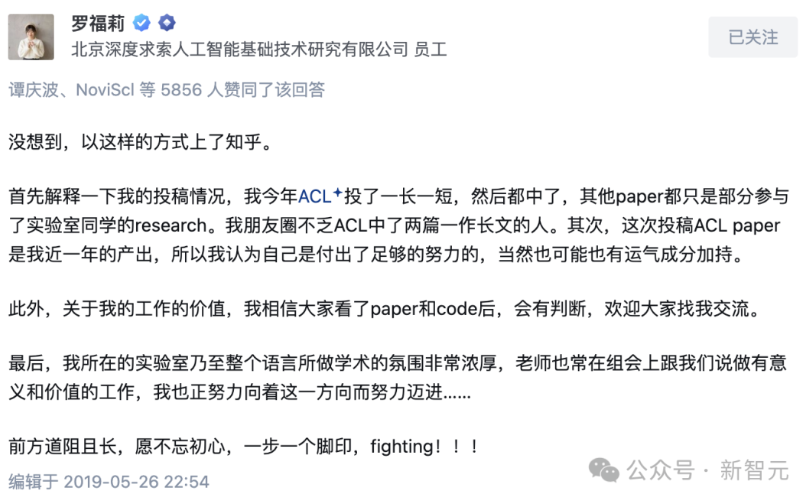

I first heard about DeepSeek in 2021 when a genius girl from the neighboring team at Damo Academy, Luo Fuli, a master's student from Peking University who published eight papers at ACL (the top conference in natural language processing) in a year, left to join Huanshuo Quant (High-Flyer Quant). Everyone was very curious why such a profitable quantitative company would recruit talent from the AI field: Did Huanshuo also need to publish papers?

At that time, as far as I knew, most of the AI researchers recruited by Huanshuo were working independently, exploring some cutting-edge directions, with the core focus being large models (LLM) and text-to-image models (like OpenAI's Dall-e at the time).

Fast forward to the end of 2022, Huanshuo gradually began to attract more and more top AI talent (most of whom were students from Tsinghua and Peking University). Stimulated by ChatGPT, Huanshuo's CEO Liang Wenfeng was determined to enter the field of general artificial intelligence: "We established a new company, starting with language large models, and there will also be visual aspects later."

Yes, this company is DeepSeek, which in early 2023, along with companies like Zhipu, Moonlight, and Baichuan Intelligence, gradually moved to the center stage. In the bustling and prosperous areas of Zhongguancun and Wudaokou, DeepSeek's presence was largely overshadowed by these companies that attracted hot money and captured "attention."

Therefore, in 2023, as a pure research institution without a star founder (like Li Kaifu's Zero One Everything, Yang Zhilin's Moonlight, Wang Xiaochuan's Baichuan Intelligence, etc.), DeepSeek found it difficult to independently raise funds from the market. As a result, Huanshuo decided to spin off DeepSeek and fully fund its development. In this fiery era of 2023, no venture capital firms were willing to provide funding for DeepSeek, partly because most of the team consisted of newly graduated PhDs without well-known top researchers, and partly due to the long wait for capital exit.

In this noisy and restless environment, DeepSeek began to write its own stories in AI exploration:

November 2023, DeepSeek launched DeepSeek LLM, with up to 67 billion parameters, its performance approaching GPT-4.

May 2024, DeepSeek-V2 officially went live.

December 2024, DeepSeek-V3 was released, with benchmark tests showing its performance surpassing Llama 3.1 and Qwen 2.5, while being comparable to GPT-4o and Claude 3.5 Sonnet, igniting industry attention.

January 2025, the first generation of reasoning-capable large model DeepSeek-R1 was released, with a price less than 1/100 of OpenAI o1 and outstanding performance, causing the global tech community to tremble: the world truly realized that Chinese power has arrived… Open source always wins!

2. Talent Strategy

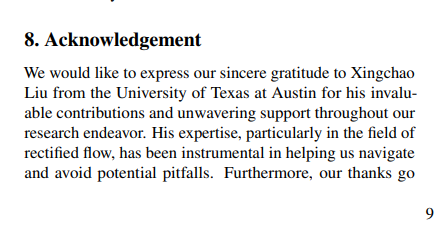

I got to know some DeepSeek researchers early on, mainly those researching AIGC, such as the authors of Janus released in November 2024 and DreamCraft3D, including one who helped me optimize my latest paper @xingchaoliu.

From my observations, most of the researchers I know are very young, primarily current PhD students or those who graduated within the last three years.

Most of these individuals are students pursuing graduate or doctoral studies in the Beijing area, with strong academic achievements: many have published 3-5 papers at top conferences.

I asked a friend at DeepSeek why Liang Wenfeng only recruits young people.

They relayed Liang Wenfeng's words, which are as follows:

The mystery surrounding the DeepSeek team intrigues people: what is its secret weapon? Foreign media say, this secret weapon is "young geniuses," who are capable of competing with financially powerful American giants.

In the AI industry, hiring experienced veterans is the norm, and many local Chinese AI startups prefer to recruit senior researchers or those with overseas PhDs. However, DeepSeek goes against the trend, favoring young people without work experience.

A headhunter who has worked with DeepSeek revealed that DeepSeek does not hire senior technical personnel, stating, "3-5 years of work experience is the maximum; those with over 8 years basically get passed." Liang Wenfeng also mentioned in a May 2023 interview with 36Kr that most of DeepSeek's developers are either fresh graduates or just starting their careers in artificial intelligence. He emphasized: "Most of our core technical positions are held by fresh graduates or those with one or two years of work experience."

Without a work history, how does DeepSeek select its candidates? The answer is, they look for potential.

Liang Wenfeng once said, when doing something long-term, experience is not that important; compared to that, foundational abilities, creativity, and passion are more important. He believes that perhaps the top 50 AI talents in the world are not currently in China, "but we can cultivate such talents ourselves."

This strategy reminds me of OpenAI's early approach. When OpenAI was founded at the end of 2015, Sam Altman's core idea was to find young and ambitious researchers. Therefore, aside from President Greg Brockman and Chief Scientist Ilya Sutskever, the remaining four core founding technical team members (Andrew Karpathy, Durk Kingma, John Schulman, Wojciech Zaremba) were all fresh PhD graduates from Stanford University, the University of Amsterdam, UC Berkeley, and New York University, respectively.

From left to right: Ilya Sutskever (former Chief Scientist), Greg Brockman (former President), Andrej Karpathy (former Technical Lead), Durk Kingma (former Researcher), John Schulman (former Reinforcement Learning Team Lead), and Wojciech Zaremba (current Technical Lead)

This "young wolf strategy" has allowed OpenAI to reap the benefits, nurturing talents like Alec Radford, the father of GPT (equivalent to a private college graduate), Aditya Ramesh, the father of the text-to-image model DALL-E (an NYU undergraduate), and Prafulla Dhariwal, the multimodal lead for GPT-4o and a three-time Olympiad gold medalist. This enabled OpenAI, which initially had an unclear mission to save the world, to carve out a path through the boldness of youth, transforming from an unknown player next to DeepMind into a giant.

Liang Wenfeng recognized this successful strategy of Sam Altman and firmly chose this path. However, unlike OpenAI, which waited seven years to see ChatGPT, Liang Wenfeng's investment yielded results in just over two years, showcasing the speed of China.

3. Speaking for DeepSeek

In the article about DeepSeek R1, its various metrics are impressively excellent. However, it has also raised some doubts: there are two points of concern,

① The expert mixture (MoE) technology it uses has high training requirements and data demands, which raises valid questions about DeepSeek's use of OpenAI data for training.

② DeepSeek employs reinforcement learning (RL) techniques that have high hardware requirements, but compared to Meta and OpenAI's massive clusters, DeepSeek's training only used 2048 H800 GPUs.

Due to the limitations of computing power and the complexity of MoE, the fact that DeepSeek R1 succeeded with just $5 million raises some suspicions. However, regardless of whether you admire R1's "low-cost miracle" or question its "flashy but impractical" nature, one cannot ignore its dazzling functional innovations.

BitMEX co-founder Arthur Hayes expressed in a post: Will the rise of DeepSeek lead global investors to question American exceptionalism? Is the value of American assets severely overestimated?

Stanford University professor Andrew Ng publicly stated at this year's Davos Forum: "I am impressed by DeepSeek's progress. I believe they are able to train models in a very economical way. Their latest released reasoning model is outstanding… 'Keep it up!'"

A16z founder, Marc Andreessen, stated, "DeepSeek R1 is one of the most amazing and impressive breakthroughs I have ever seen—and as open source, it is a profound gift to the world."

In 2023, DeepSeek, standing in the corner of the stage, finally reached the pinnacle of global AI before the Lunar New Year in 2025.

4. Argo and DeepSeek

As a technical developer of Argo and an AIGC researcher, I have DeepSeek-ified the important functions within Argo: as a workflow system, the rough original workflow generates work, and Argo is powered by DeepSeek R1. Additionally, Argo has integrated LLM as the standard DeepSeek R1 and has chosen to abandon the expensive closed-source OpenAI models. The reason is that workflow systems typically involve a large amount of token consumption and contextual information (averaging >=10k tokens), which leads to very high execution costs if using the expensive OpenAI or Claude 3.5 models. This pre-spending expense is detrimental to the product before web3 users truly capture value.

As DeepSeek continues to improve, Argo will collaborate more closely with the Chinese power represented by DeepSeek: including but not limited to the localization of Text2Image/Video interfaces and the localization of LLMs.

In terms of collaboration, Argo will invite DeepSeek researchers to share technical achievements in the future and provide grants for top AI researchers, helping web3 investors and users understand AI advancements.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。