This report is written by Tiger Research and analyzes how Intuition reconstructs network infrastructure for the AI agent era through atomic knowledge structuring, token curated registries (TCRs) for achieving standard consensus, and signal-based trust measurement systems.

Key Points Summary

- The AI agent era has arrived. AI agents cannot fully realize their potential. The current network infrastructure is designed for humans. Websites use different data formats. Information remains unverified. This makes it difficult for agents to understand and process data.

- Intuition evolves the vision of the semantic web through a Web3 approach. It addresses existing limitations. The system structures knowledge into atoms. It reaches consensus on data usage through token curated registries (TCRs). Signals determine the level of trust in the data.

- Intuition will change the network. The current network is like an unpaved road. Intuition creates highways where agents can operate safely. It will become the new infrastructure standard. This will unlock the true potential of the AI agent era.

1. The Age of Agents Begins: Is the Network Infrastructure Sufficient?

The AI agent era is thriving. We can envision a future where personal agents handle everything from travel planning to complex financial management. But in practice, it is not that simple. The issue is not with AI performance itself. The real limitation lies in the current network infrastructure.

The network is built for humans to read and interpret through browsers. Therefore, it is very unsuitable for agents that need to parse semantics and connect relationships across data sources. These limitations are evident in everyday services. An airline website might list a departure time as "14:30," while a hotel website shows a check-in time as "2:30 PM." Humans immediately understand that both refer to the same time, but agents interpret them as completely different data formats.

Source: Tiger Research

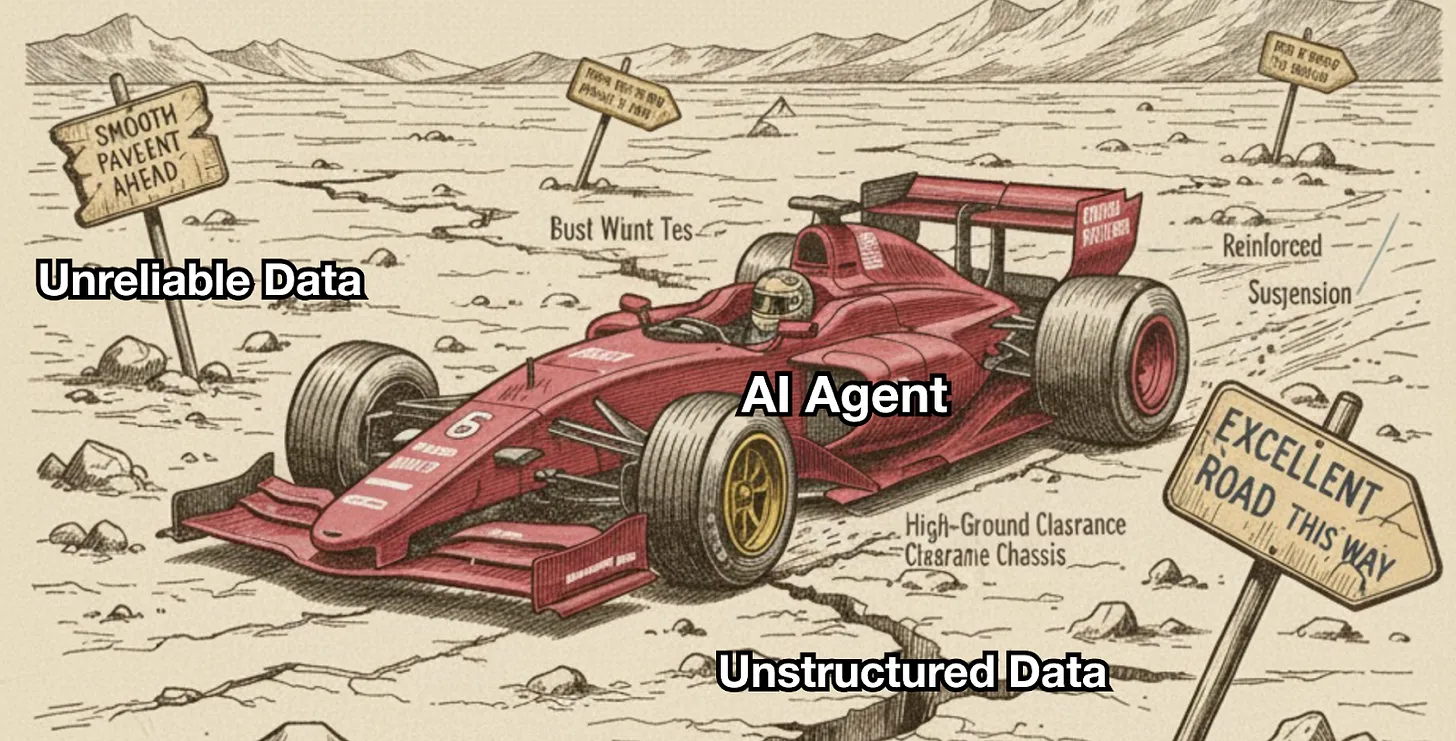

The problem is not just about format differences. A key challenge is whether agents can trust the data itself. Humans can handle incomplete information by relying on context and prior experience. In contrast, agents lack clear standards for assessing sources or reliability. This makes them susceptible to erroneous inputs, flawed conclusions, and even hallucinations.

Ultimately, even the most advanced agents cannot thrive in such conditions. They are like F1 cars: no matter how powerful, they cannot race at full speed on an unpaved road (unstructured data). If misleading signs (unreliable data) are scattered along the route, they may never reach the finish line.

2. The Technical Debt of the Network: Rebuilding the Foundation

This issue was first raised over 20 years ago by Tim Berners-Lee, the founder of the World Wide Web, through his proposal for the semantic web.

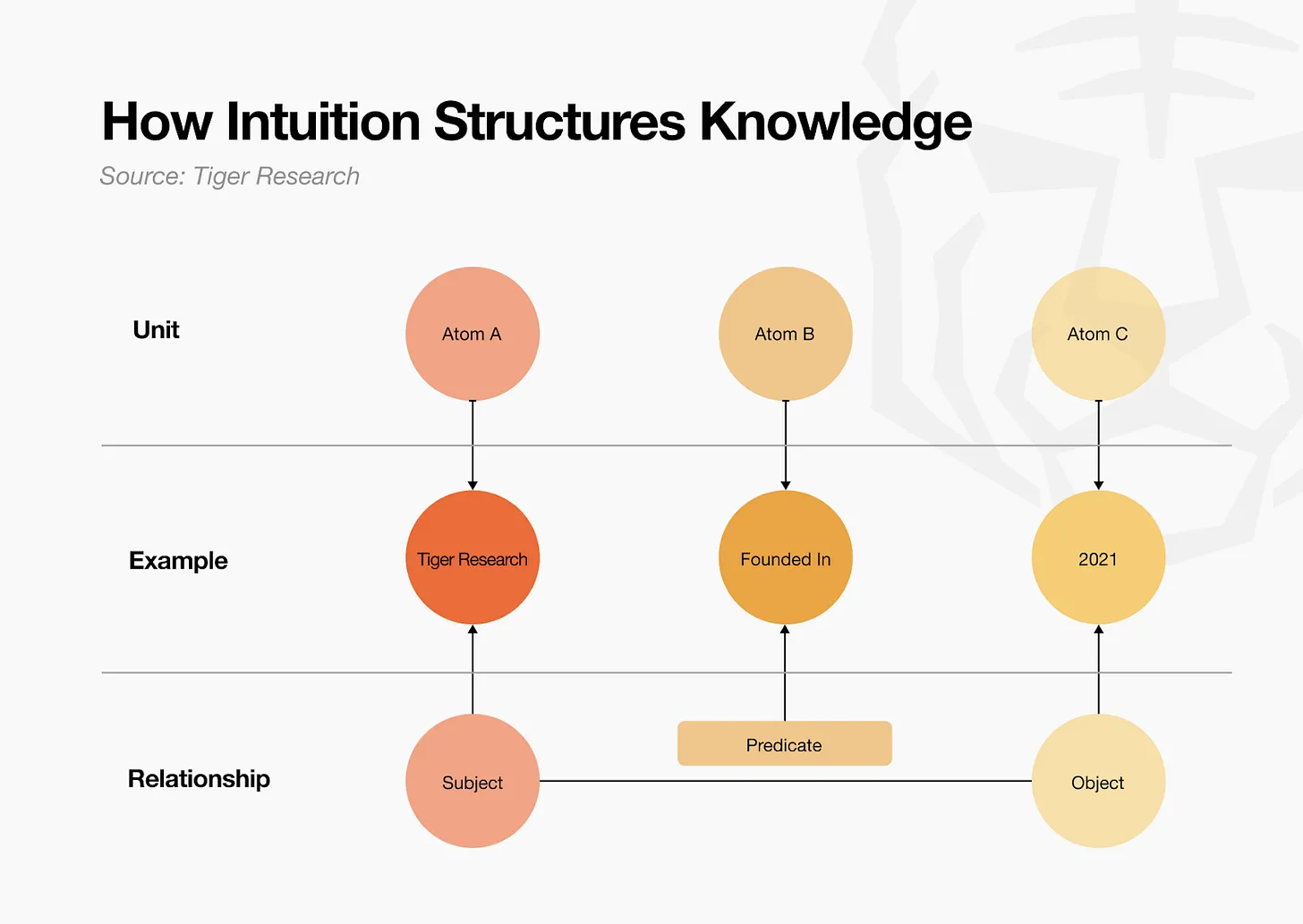

The core idea of the semantic web is simple: structure network information so that machines can understand it, not just human-readable text. For example, "Tiger Research was founded in 2021" is clear to humans but is merely a string to machines. The semantic web structures it as "Tiger Research (subject) - was founded (predicate) - in 2021 (object)" so that machines can interpret the meaning.

This approach was ahead of its time but ultimately failed to materialize. The biggest reason was the challenges of implementation. Reaching consensus on data formats and usage standards proved difficult, and more importantly, building and maintaining large datasets through voluntary user contributions was nearly impossible. Contributors received no direct rewards or benefits. Additionally, whether the created data is trustworthy remains an unresolved issue.

Nevertheless, the vision of the semantic web remains valid. The principle that machines should understand and utilize data at a semantic level has not changed. In the AI era, this need has become even more critical.

3. Intuition: Reviving the Semantic Web in a Web3 Manner

Intuition evolves the vision of the semantic web through a Web3 approach to address existing limitations. The core lies in creating a system that incentivizes users to voluntarily participate in accumulating and verifying high-quality structured data. This systematically builds a knowledge graph that is machine-readable, source-clear, and verifiable. Ultimately, this provides the foundation for agents to operate reliably and brings us closer to the future we envision.

3.1. Atoms: The Building Blocks of Knowledge

Intuition first divides all knowledge into the smallest units called atoms. Atoms represent concepts such as people, dates, organizations, or attributes. Each atom has a unique identifier (using technologies like decentralized identifiers DIDs) and exists independently. Each atom records contributor information, allowing you to verify who added what information and when.

The reason for breaking knowledge down into atoms is clear. Information often appears in complex sentence forms. Machines like agents have structural limitations when parsing and understanding such composite information. They also struggle to determine which parts are accurate and which are erroneous.

Subject: Tiger Research

Predicate: was founded

Object: in 2021

Consider the sentence "Tiger Research was founded in 2021." This may be true or only partially incorrect. Whether the organization actually exists, whether "founded date" is an appropriate attribute, and whether 2021 is correct all need to be verified individually. However, treating the entire sentence as a single unit makes it difficult to distinguish which elements are accurate and which are erroneous. Tracking the source of each piece of information also becomes complex.

Atoms solve this problem. By defining each element as an independent atom, such as [Tiger Research], [was founded], and [2021], you can record sources and verify each element individually.

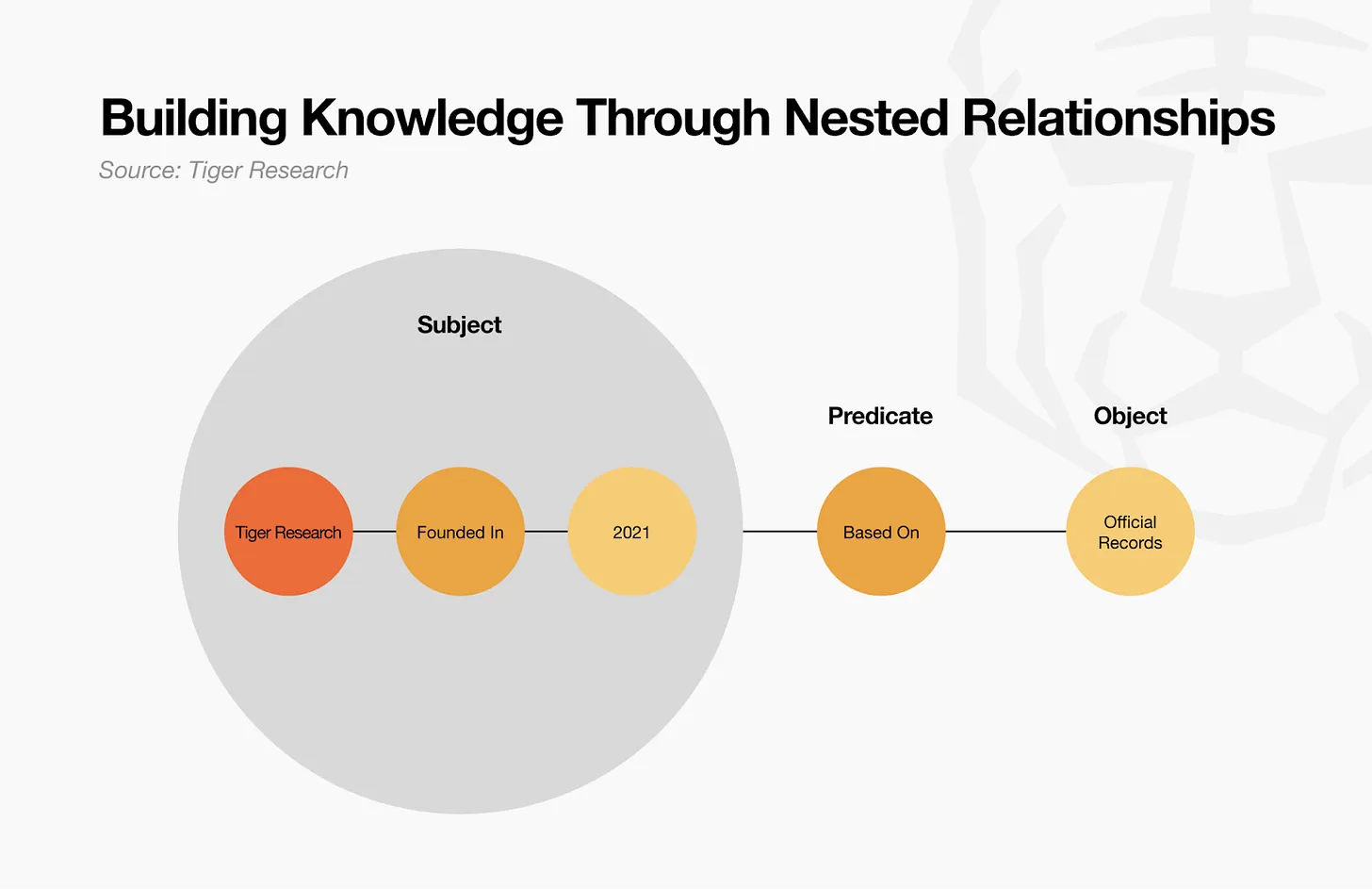

Subject: The founding date of Tiger Research is 2021

Predicate: based on

Object: official records

Atoms are not just tools for splitting information—they can be combined like Lego blocks. For example, the individual atoms [Tiger Research], [was founded], and [2021] connect to form a triple. This creates meaningful information: "Tiger Research was founded in 2021." This follows the same structure as triples in the semantic web RDF (Resource Description Framework).

These triples can also become atoms themselves. The triple "Tiger Research was founded in 2021" can be expanded into new triples, such as "The founding date of Tiger Research in 2021 is based on business records." Through this method, atoms and triples can repeatedly combine, evolving from small units into larger structures.

The result is that Intuition builds a fractal knowledge graph that can infinitely expand from basic elements. Even complex knowledge can be broken down for verification and then recombined.

3.2. TCRs: Market-Driven Consensus

If Intuition provides the conceptual framework for structured knowledge through atoms, three key questions still remain: Who will contribute to creating these atoms? Which atoms can be trusted? When different atoms compete to represent the same concept, which one becomes the standard?

Source: Intuition Light Paper

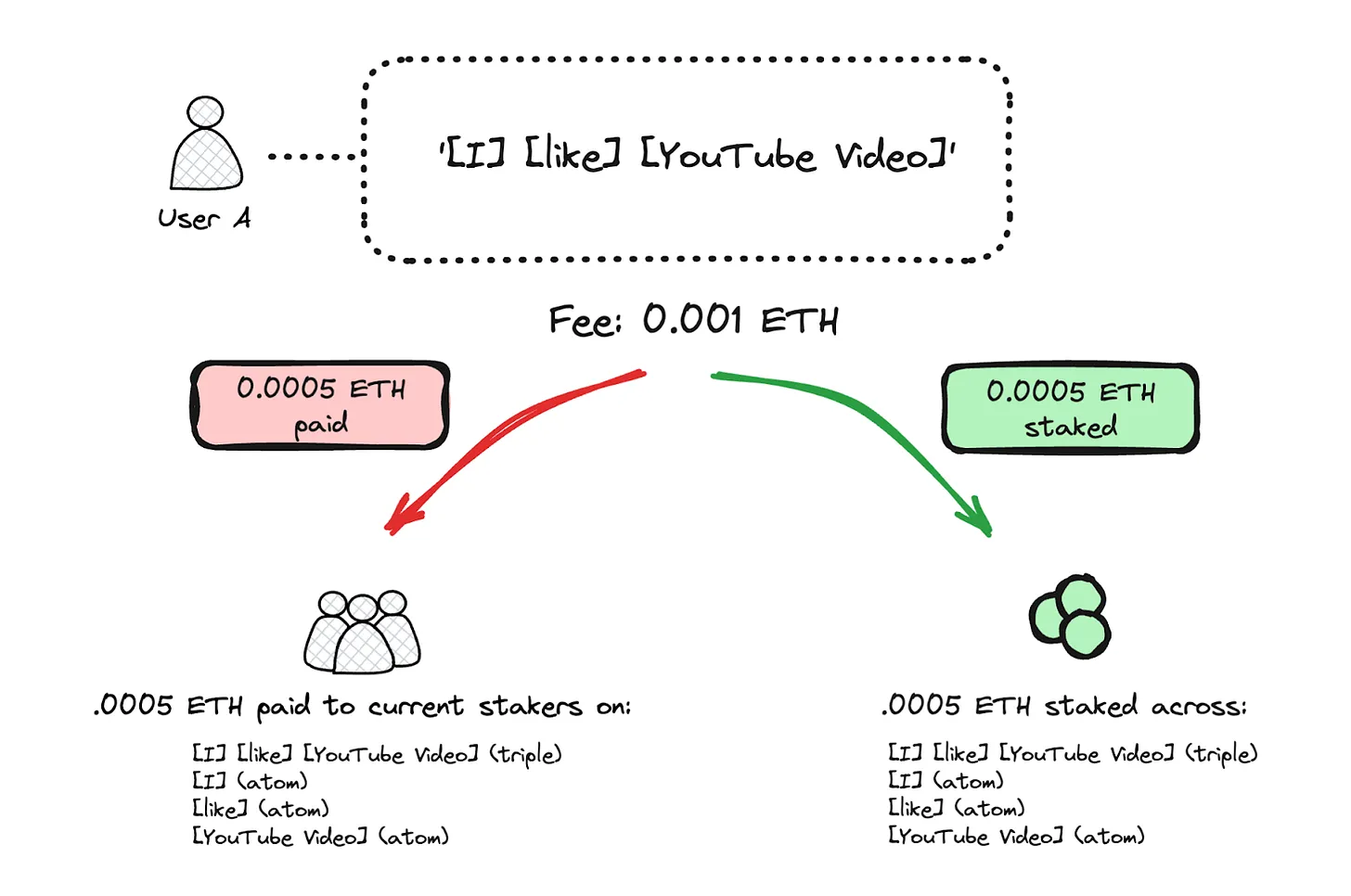

Intuition addresses this issue through TCRs. TCRs filter entries based on content valued by the community. Token staking reflects these judgments. Users stake $TRUST (Intuition's native token) when proposing new atoms, triples, or data structures. Other participants stake tokens on the supporting side if they find the proposal useful; if they find it useless, they stake tokens on the opposing side. They can also stake on competitive alternatives. If the data chosen by users is frequently used or receives high ratings, they will be rewarded. Otherwise, they will lose part of their stake.

TCRs validate individual proofs, but they also effectively address the issue of ontology standardization. Ontology standardization means deciding which method becomes the common standard when there are multiple ways to express the same concept. Distributed systems face the challenge of reaching this consensus without centralized coordination.

Consider two competing predicates for product reviews: [hasReview] and [customerFeedback]. If [hasReview] is introduced first and many users build upon it, early contributors have token stakes in that success. Meanwhile, supporters of [customerFeedback] receive economic incentives to gradually shift towards the more widely adopted standard.

This mechanism reflects how the ERC-20 token standard is naturally adopted. Developers adopting ERC-20 gain clear compatibility benefits—direct integration into existing wallets, exchanges, and dApps. These advantages naturally attract developers to use ERC-20. This indicates that market-driven choices alone can resolve standardization issues in distributed environments. TCRs operate on similar principles. They reduce agents' struggles with fragmented data formats and provide an environment where information can be understood and processed more consistently.

3.3. Signals: Building a Trust-Based Knowledge Network

Intuition structures knowledge through atoms and triples and reaches consensus on "what is actually used" through incentives.

The final challenge remains: to what extent can we trust this information? Intuition introduces signals to fill this gap. Signals express users' trust or distrust in specific atoms or triples. It goes beyond simply recording the existence of data—it captures how much support the data receives in different contexts. Signals systematize the social verification processes we use in real life, such as when we judge information based on "a reliable person recommended this" or "an expert verified it."

Signals accumulate in three ways. First, explicit signals involve intentional evaluations made by users, such as token staking. Second, implicit signals naturally arise from usage patterns (like repeated queries or applications). Finally, transitive signals create relational effects—when people I trust support information, I am also inclined to trust it more. The combination of these three creates a knowledge network that shows who trusts what, how much trust is given, and in what manner.

Source: Intuition White Paper

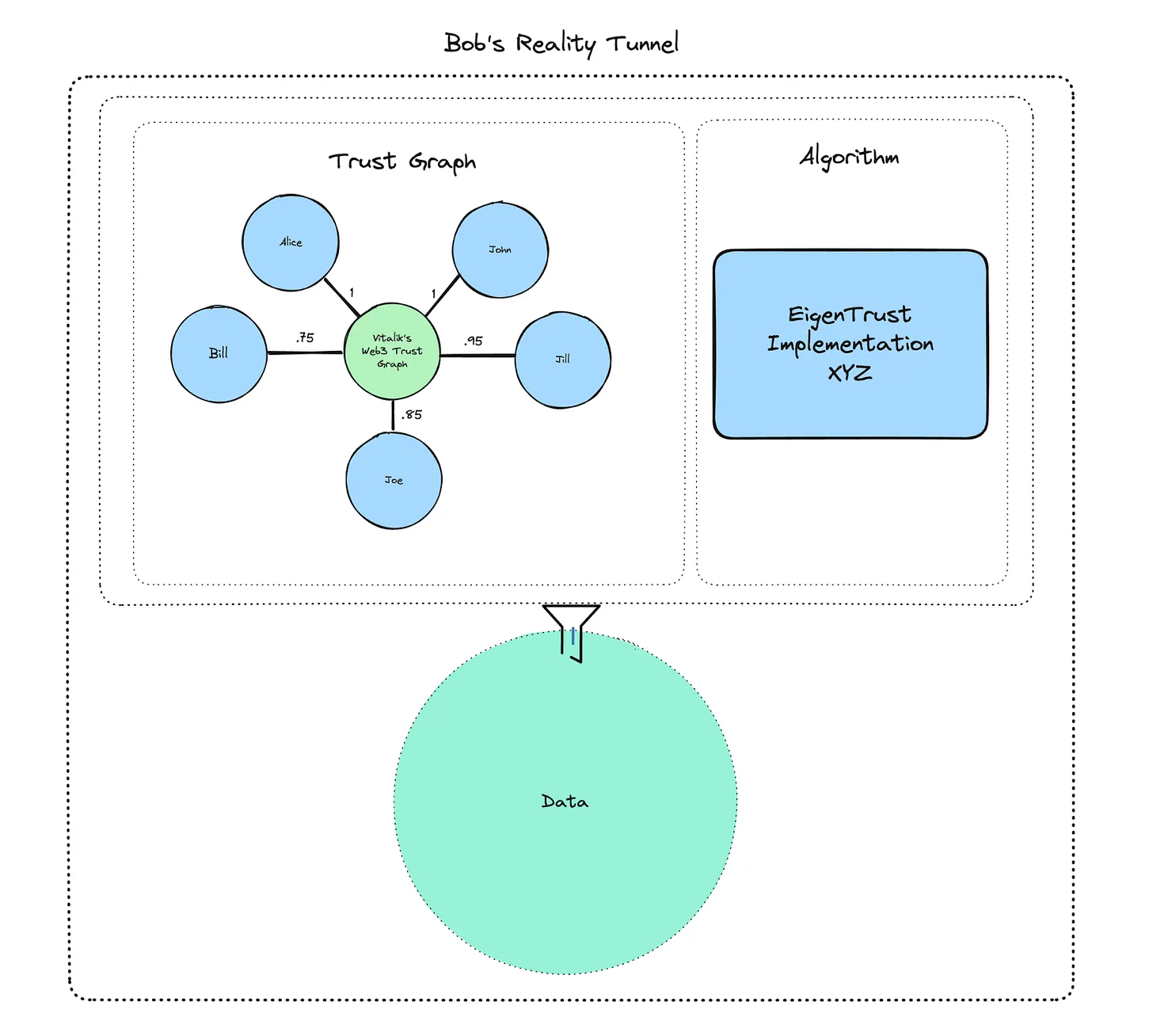

Intuition provides this through Reality Tunnels. Reality Tunnels offer personalized perspectives for viewing data. Users can configure tunnels that prioritize expert group evaluations, value the opinions of close friends, or reflect the wisdom of specific communities. Users can choose trusted tunnels or switch between multiple tunnels for comparison. Agents can also use specific interpretive methods for particular purposes. For example, selecting a tunnel that reflects Vitalik Buterin's trusted network will set the agent to interpret information and make decisions from "Vitalik's perspective."

All signals are recorded on-chain. Users can transparently verify why specific information appears trustworthy, which servers serve as sources, who vouches for it, and how many tokens were staked. This transparent trust formation process allows users to directly verify evidence rather than blindly accept information. Agents can also use this foundation to make judgments suitable for individual contexts and perspectives.

4. What If Intuition Becomes the Next Generation of Network Infrastructure?

Intuition's infrastructure is not just a conceptual idea but a practical solution to the problems agents face in the current network environment.

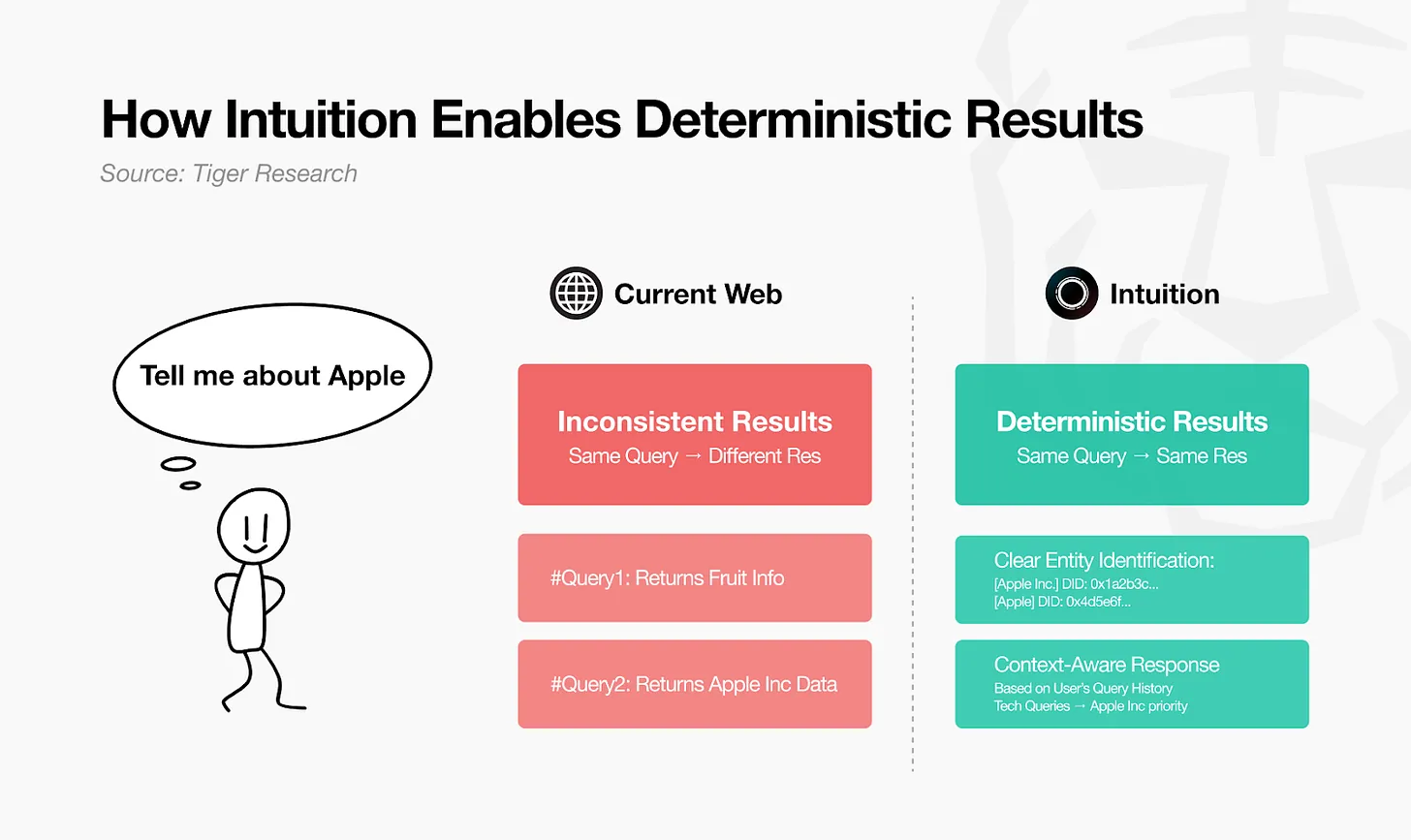

The current network is filled with fragmented data and unverified information. Intuition transforms data into a deterministic knowledge graph, providing clear and consistent results for any query. Token-based signals and curation processes validate this data. Agents can make clear decisions without relying on guesswork. This simultaneously improves accuracy, speed, and efficiency.

Intuition also provides a foundation for agent collaboration. Standardized data structures allow different agents to understand and communicate information in the same way. Just as ERC-20 created token compatibility, Intuition's knowledge graph creates an environment where agents can collaborate based on consistent data.

Intuition goes beyond infrastructure limited to agents, becoming a foundational layer that all digital services can share. It can replace the currently separate trust systems built by each platform—Amazon's reviews, Uber's ratings, LinkedIn's recommendations—with a unified foundation. Just as HTTP provides a universal communication standard for the web, Intuition offers standard protocols for data structures and trust verification.

The most significant change is data portability. Users directly own the data they create and can use it anywhere. Data isolated on various platforms will connect and reshape the entire digital ecosystem.

5. Rebuilding the Foundation for the Coming Age of Agents

Intuition's goal is not merely a technical improvement. It aims to overcome the technical debt accumulated over the past 20 years and fundamentally redesign network infrastructure. When the semantic web was first proposed, the vision was clear. But it lacked incentives to drive participation. Even if their vision were realized, the benefits remained unclear.

The situation has changed. Advances in AI are making the age of agents a reality. AI agents now go beyond simple tools. They represent us in executing complex tasks. They make autonomous decisions. They collaborate with other agents. These agents require fundamental innovations in the existing network infrastructure to operate effectively.

Source: Balaji

As former Coinbase CTO Balaji pointed out, we need to build the right infrastructure for these agents to operate. The current network resembles an unpaved road rather than a highway where agents can safely move on trusted data. Each website has different structures and formats. Information is unreliable. Data remains unstructured, making it difficult for agents to understand. This creates significant barriers for agents to perform accurately and efficiently.

Intuition seeks to rebuild the network to meet these needs. It aims to construct standardized data structures that are easy for agents to understand and use. It requires reliable information verification systems. It needs protocols that enable smooth interactions between agents. This is similar to how HTTP and HTML created network standards in the early internet. It represents an attempt to establish new standards for the age of agents.

Of course, challenges remain. Without sufficient participation and network effects, the system cannot function properly. Achieving critical mass requires considerable time and effort. Overcoming the inertia of the existing network ecosystem has never been easy. Establishing new standards is challenging. But this is a challenge that must be addressed. The rebase proposed by Intuition will overcome these challenges. It will open up new possibilities for the age of agents that is just beginning to be imagined.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。