When technology deeply intervenes in life and freedom, how can we avoid a "digital dystopia"?

Written by: Vitalik Buterin

Translated by: Saoirse, Foresight News

Perhaps the most significant trend of this century so far can be summarized by the phrase "the internet has become real life." This trend began with email and instant messaging—private conversations that people relied on for thousands of years through word of mouth and written records have now shifted to digital infrastructure. Subsequently, digital finance emerged, encompassing both cryptocurrency finance and the digital transformation of traditional finance itself. Later, digital technology infiltrated the health sector: with smartphones, personal health tracking watches, and data inferred from consumer behavior, various types of information related to our bodies are being processed through computers and computer networks. In the next twenty years, I expect this trend to sweep through more fields, including various government affairs (eventually possibly extending to elections), monitoring physical and biological indicators and potential threats in public environments, and ultimately, through brain-computer interface technology, digital technology may even touch upon the realm of human thought itself.

I believe these trends are inevitable: the benefits they bring are too great, and in a fiercely competitive global environment, civilizations that refuse these technologies will first lose their competitive edge and subsequently cede sovereignty to those that embrace these technologies. However, in addition to bringing powerful benefits, these technologies also profoundly affect the power dynamics within and between nations.

The civilizations that will benefit the most from the new wave of technological advancements are not the "consumers" of technology, but the "producers" of technology. Projects designed for equal access, centrally coordinated against closed platforms and interfaces, can at best achieve only a small fraction of their potential value and often fail outside of the pre-set "conventional" scenarios. Moreover, in the future technological landscape, our trust in technology will significantly increase. Once this trust is broken (for example, by backdoor programs or security vulnerabilities), it can lead to serious problems. Even the mere existence of the "possibility" of broken trust will force people back into an essentially exclusive social trust model—namely, "Is this thing made by someone I trust?" This situation will create a chain reaction at various levels of the technology stack: the so-called "dominators" are those who can define "special circumstances."

To avoid these issues, various technologies in the technology stack—including software, hardware, and biotechnological fields—must possess two interrelated core characteristics: true openness (i.e., open source, including free licensing) and verifiability (ideally, end users should be able to verify directly).

The internet is real life. We hope it becomes a utopia, not a dystopia.

The Importance of Openness and Verifiability in the Health Sector

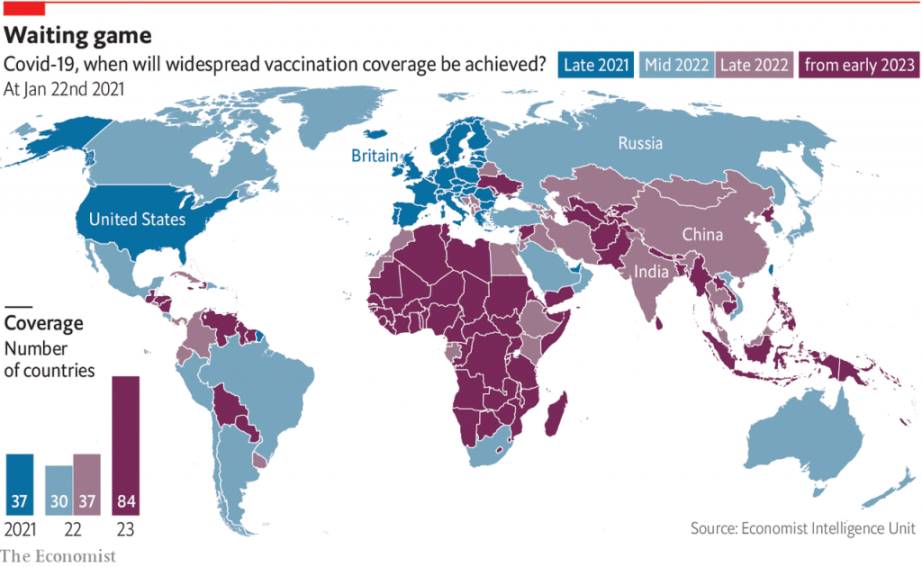

The consequences of unequal access to technological production methods were starkly revealed during the COVID-19 pandemic. Vaccines were produced in only a few countries, leading to significant disparities in vaccine access times between different nations: wealthy countries received high-quality vaccines in 2021, while others did not obtain lower-quality vaccines until 2022 or 2023. Although several initiatives attempted to ensure equal access to vaccines, the reliance on capital-intensive proprietary manufacturing processes, which could only be conducted in a few regions, severely limited the effectiveness of these initiatives.

COVID-19 vaccine coverage data from 2021-2023

The second major issue facing vaccines is the lack of transparency in the scientific research and information dissemination strategies: stakeholders attempted to assure the public that vaccines were "completely risk-free and without any side effects," a claim that was not true and ultimately greatly exacerbated public distrust in vaccines. Today, this distrust has escalated, even evolving into skepticism about scientific achievements over the past half-century.

In fact, both of these issues have solutions. For example, vaccines like PopVax, funded by Balvi, not only have lower development costs but also higher openness in the development process—this not only reduces inequality in vaccine access but also makes the analysis and verification of their safety and efficacy much easier. In the future, we could even make "verifiability" a core goal from the very beginning of vaccine design.

Similar issues exist in the digital realm of biotechnology. When you talk to longevity researchers, they almost all mention that the future direction of anti-aging medicine is "personalized" and "data-driven." To provide precise medication recommendations and nutritional adjustments for people today, it is essential to understand their real-time bodily conditions; achieving this requires large-scale, real-time digital data collection and processing.

The amount of personal data collected by a smartwatch is 1,000 times that of Worldcoin, a phenomenon that has both advantages and disadvantages.

This logic also applies to defensive biotechnologies aimed at "risk prevention," such as epidemic prevention. The earlier an epidemic is detected, the more likely it is to be contained at the source; even if containment is not possible, detecting it a week earlier can provide more time for the development of prevention and response measures. During the pandemic, real-time knowledge of where outbreaks occurred was crucial for timely deployment of control measures: if ordinary people infected with the virus could self-isolate within one hour of learning about their condition, the spread of the epidemic would be reduced by 72 times compared to the scenario of "being active for 3 days while infected and infecting others"; if it could be determined that "20% of locations caused 80% of the spread," targeted improvements to air quality in those areas could further reduce transmission risks. Achieving these goals requires two conditions: (1) deploying a large number of sensors; (2) ensuring that sensors have real-time communication capabilities to relay information to other systems.

Looking further into "sci-fi level" technological directions, we see the potential of brain-computer interfaces—they can significantly enhance human work efficiency, help people better understand each other through "telepathic communication," and pave the way for safer high-intelligence artificial intelligence.

If the infrastructure for biological and health tracking (including personal and spatial levels) is proprietary technology, then data will default to large corporations. These corporations have the right to develop various applications on top of the infrastructure, while other entities are excluded. Although they may open limited access through APIs (application programming interfaces), such access is often restricted and may be used for "monopolistic rent-seeking," and can be revoked at any time. This means that a few individuals and companies control the core resources of an important technological field in the 21st century, thereby limiting the potential for other entities to derive economic benefits from it.

On the other hand, if such personal health data lacks security guarantees, hackers, once they invade, may exploit health issues for extortion or optimize insurance and medical product pricing for profit; if the data contains location information, hackers could even use it to stalk and kidnap individuals. Conversely, your location data (frequently targeted by hackers) could also be used to infer your health status. If brain-computer interfaces are hacked, it means malicious attackers can directly "read" (or even worse—"alter") your thoughts. This is no longer a sci-fi scenario: research has shown that hacking brain-computer interfaces could lead to users losing motor control (related attack cases can be referenced here).

In summary, while these technologies can bring enormous benefits, they also come with significant risks—and strongly emphasizing "openness" and "verifiability" is an effective way to mitigate these risks.

The Importance of Openness and Verifiability in Personal and Commercial Digital Technology

Earlier this month, I needed to fill out and sign a legally binding document, but I was abroad at the time. Although the country had a national electronic signature system, I had not registered in advance. Ultimately, I had to print the document, handwrite my signature, and then go to a nearby DHL location, spending a lot of time filling out a paper shipping form, and finally paying to expedite the document internationally. The entire process took half an hour and cost $119. On the same day, I also needed to sign a digital transaction on the Ethereum blockchain—this process took only 5 seconds and cost just $0.1 (to be fair, even without relying on the blockchain, digital signatures can be completely free).

Such cases are very common in corporate or nonprofit governance, intellectual property management, and other scenarios. Over the past decade, the vast majority of blockchain startups have included similar "efficiency comparison" cases in their business plans. Additionally, the most core application scenario for "exercising personal rights digitally" is in the payment and financial sectors.

Of course, all of this comes with a significant risk: what if the software or hardware is hacked? The cryptocurrency field recognized this risk early on—blockchains have the characteristics of "permissionless" and "decentralized," and once you lose access to your funds, there is no "savior" to turn to, i.e., "no private key, no asset ownership." For this reason, the cryptocurrency field began exploring solutions such as "multi-signature wallets," "social recovery wallets," and "hardware wallets" early on. However, in reality, the "lack of a trusted third party" in many scenarios is not due to ideological choices but rather the inherent properties of the scenarios themselves. In fact, even in traditional finance, "trusted third parties" often cannot protect most people—for example, only 4% of scam victims can recover their losses. In scenarios involving "personal data custody," once data is leaked, it cannot be "recalled" in principle. Therefore, we need true verifiability and security—covering both software and ultimately hardware.

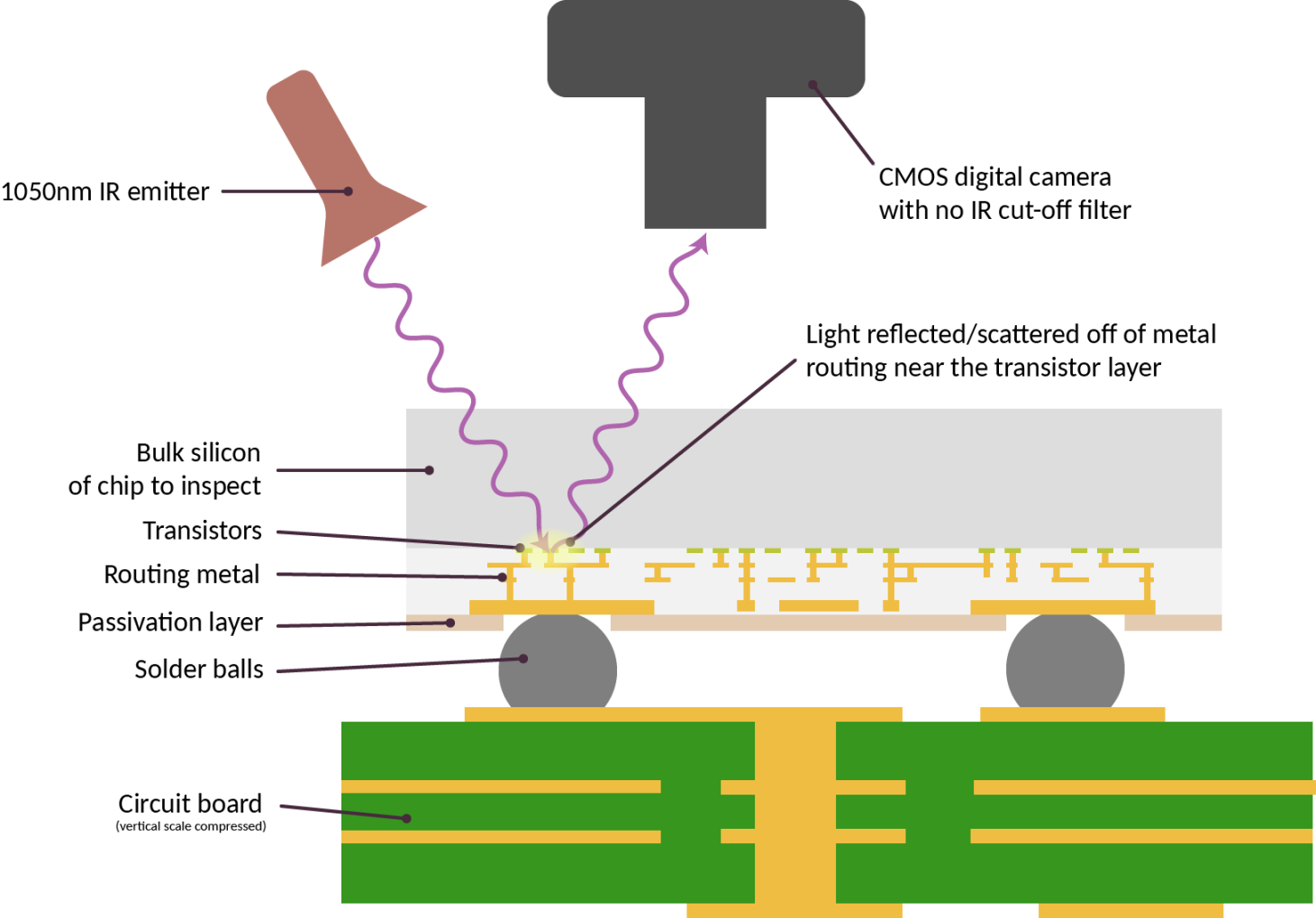

A technical solution for detecting compliance in computer chip manufacturing

Importantly, in the hardware field, the risks we seek to prevent go far beyond "whether the manufacturer is malicious." The more core issue is that hardware development relies on a large number of external components, most of which are closed-source; if any one of these components has a flaw, it could lead to unacceptable security consequences. Research (refer here) has shown that even if software is proven "safe" in an independent model, the choice of microarchitecture could undermine its side-channel resistance. Security vulnerabilities like EUCLEAK (a type of attack) are harder to detect precisely because the components it relies on are mostly proprietary technology. Furthermore, if artificial intelligence models are trained on compromised hardware, backdoors could be implanted during the training process.

Another issue is that even if a closed centralized system is secure, it can bring about other drawbacks. Centralization can create a "persistent power leverage" between individuals, businesses, or nations—if your core infrastructure is built and maintained by a "potentially untrustworthy country" or a "potentially untrustworthy company," you may easily face external pressure (for example, see Henry Farrell's research on "weaponized interdependence"). This is precisely the problem that cryptocurrencies aim to solve—but the existence of such issues extends far beyond the financial sector.

The Importance of Openness and Verifiability in Digital Citizenship Technology

I often engage with people from various fields who are exploring governance models more suited to the different scenarios of the 21st century. For example, Audrey Tang is working to upgrade existing functional political systems by empowering local open-source communities and adopting mechanisms such as "citizen assemblies," "lottery representation," and "second voting" to enhance governance levels; others are starting from "bottom-up design"—some Russian political scientists have drafted a new constitution for Russia (see here), which explicitly guarantees personal freedom and local autonomy, emphasizes a system design that "leans towards peace and opposes aggression," and grants unprecedented importance to direct democracy; still, other scholars (such as economists studying land value tax or congestion charges) are striving to improve their country's economic conditions.

Different people may have varying degrees of acceptance of these ideas, but they share a common point: they all require "high-bandwidth participation," so any feasible implementation plan must be digital. Paper and pen may suffice for "simple property registration" or "elections every four years," but they are utterly inadequate for scenarios that require higher participation frequency and information transmission efficiency.

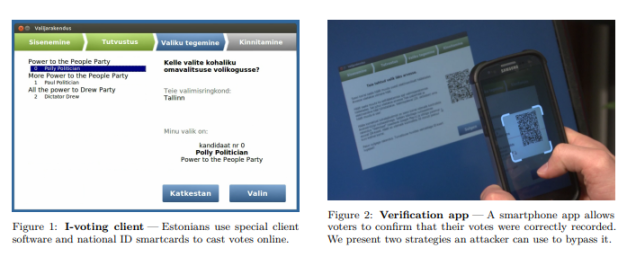

However, historically, security researchers have had attitudes ranging from skepticism to outright opposition towards digital citizenship technologies like "electronic voting." Research (see here) has well summarized the core reasons against electronic voting, mentioning:

"First, electronic voting technology relies on 'black box software'—the public cannot access the source code that controls the voting machines. Although companies claim that 'protecting the software is to prevent fraud and combat competition,' this also means the public has no way to understand how the voting software operates. If a company wants to manipulate the software and create false election results, it is not difficult. Furthermore, there is competition among voting machine suppliers, which does not guarantee that they will produce equipment from the perspective of 'voter interests' and 'ballot accuracy.'"

Numerous real-world cases (see here) demonstrate that this skepticism is not unfounded.

A critical analysis of Estonia's internet voting system in 2014

These objections also apply to other similar scenarios. However, I predict that as technology develops, the attitude of "completely rejecting digitization" will become increasingly unrealistic in more and more fields. Technology is driving the world towards greater efficiency (for better or worse), and if a system refuses to adapt to this trend, people will gradually bypass it, and its influence in personal and collective affairs will continue to diminish. Therefore, we need another solution: to confront the challenges head-on and explore how to ensure that complex technological solutions possess "security" and "verifiability."

In theory, "secure verifiability" and "open source" are two different concepts. Proprietary technology can certainly be secure—for example, aircraft technology is highly proprietary, yet commercial aviation remains a very safe mode of travel. However, what proprietary models cannot achieve is "security consensus"—the ability for mutually distrustful parties to recognize its security.

Citizen systems like elections are typical scenarios that require "security consensus." Another scenario is the collection of court evidence. Recently, a Massachusetts court ruled that a large amount of evidence from breathalyzers was invalid—the reason being that the state crime lab was found to have concealed information about widespread malfunctions of the devices. The ruling document stated:

"Are all test results problematic? Not at all. In fact, most breathalyzers in the majority of cases did not have calibration issues. However, investigators later discovered that the state crime lab concealed evidence that 'the scope of malfunctions was broader than claimed,' leading Judge Frank Gaziano to conclude that the due process rights of all relevant defendants had been violated."

The "due process" in court essentially requires not only "fairness" and "accuracy" but also "consensus on fairness and accuracy"—if the public cannot confirm that the court is "acting according to the law," society is likely to fall into a chaotic situation of "private remedies."

Moreover, "openness" itself has inherent value. Openness allows local communities to design governance, identity verification, and other systems tailored to their own goals. If the voting system is proprietary technology, a country (or province, city) wishing to try a new voting model will face significant obstacles: either persuade companies to develop their preferred rules as "new features," or start from scratch to develop and complete security verification—this undoubtedly greatly increases the cost of innovation in political systems.

In these areas, adopting an "open-source hacker ethic" (the idea of encouraging sharing, collaboration, and innovation) can empower local implementers with more autonomy—whether they are working as individuals or as part of a government or business. To achieve this, two conditions must be met: first, widely provide "easy-to-build open-source tools," and second, the infrastructure and codebase must adopt a "free licensing" model, allowing others to develop further on this basis. If the goal is to "narrow the power gap," then the "copyleft" model is particularly important (see here).

In the coming years, another important direction in the field of civic technology is physical security. Over the past two decades, surveillance cameras have proliferated, raising numerous concerns about civil liberties. Unfortunately, the rise of drone warfare has made "not adopting high-tech security measures" no longer a viable option. Even if a country's laws do not infringe on civil liberties, if that country cannot protect its citizens from "illegal interference by other countries (or malicious companies, individuals)," the so-called "freedom" is meaningless—drones make such attacks easier. Therefore, we need corresponding defensive measures, which may include a large number of "anti-drone systems," sensors, and cameras.

If these tools are proprietary technology, data collection will be both opaque and highly centralized; if these tools possess "openness" and "verifiability," we can explore better solutions: security devices only output limited data in limited scenarios and automatically delete the remaining data. In this way, the future of digital physical security will resemble a "digital watchdog" rather than a "digital panopticon." We can envision a world where public surveillance devices must be open-source and verifiable, and every citizen has the legal right to "randomly select public surveillance devices, dismantle them, and verify their compliance"; university computer clubs could even use this verification as a teaching practice activity.

Paths to Achieving Open Source and Verifiability

We cannot avoid the deep embedding of digital computing technology in all aspects of personal and collective life. If left unchecked, future digital technologies are likely to take the form of being developed and operated by centralized companies, serving the profit goals of a few, implanted with backdoors by the governments of their respective countries, while the majority of people globally cannot participate in technological creation or assess its security. However, we can certainly strive to turn towards a better path.

Let’s imagine such a world:

You own a secure personal electronic device—it combines the computing power of a smartphone with the security of a hardware wallet, and while its checkability may not match that of a mechanical watch, it is very close.

All your instant messaging applications are encrypted, message transmission paths are hidden through mixing technologies, and all code has undergone formal verification. You can be completely confident that private conversations are genuinely private and leak-free.

Your financial assets are standardized ERC-20 tokens on-chain (or stored on servers that publish hash values and verification proofs to ensure accuracy), managed by a wallet controlled by your personal electronic device. If the device is lost, you can restore access to your assets through a method of your choosing (for example, by combining your other devices, family, friends, or institutional devices—not necessarily government institutions: if the operation is convenient enough, organizations like churches may also provide such services).

Open-source Starlink-level infrastructure has been deployed, ensuring stable and reliable global communication without relying on a few operating entities.

Your device is equipped with a locally running open-source large language model (LLM) that can scan your operations in real-time, provide suggestions, automate tasks, and issue warnings when you might receive incorrect information or are about to make a mistake.

The operating system of the device is also open-source and has undergone formal verification.

You wear a personal health tracking device that works continuously 24 hours a day, which also possesses openness and checkability—you can access your health data at any time and ensure that no one can obtain this information without your permission.

We have more advanced governance models: using mechanisms such as lottery representation, citizen assemblies, and second voting to set goals through cleverly combined democratic voting methods, and using specific methods to filter expert proposals to determine the paths to achieving those goals. As a participant, you can be completely confident that the system is operating according to the rules you understand.

Public places are equipped with monitoring devices to track biological variables (such as monitoring carbon dioxide levels, air quality indices, the presence of airborne diseases, wastewater indicators, etc.). However, these devices (as well as all surveillance cameras and defensive drones) possess openness and verifiability, and there is a corresponding legal system to ensure that the public can conduct random inspections of them.

In such a world, we will have greater security, more freedom, and more equal opportunities for global economic participation than we do today. However, to realize this vision, we need to increase investment in various technological fields:

More advanced cryptographic technologies: I refer to zero-knowledge proofs (ZK-SNARKs), fully homomorphic encryption, and obfuscation techniques as the "Egyptian god cards" of the cryptographic field— their power lies in their ability to perform arbitrary program computations on data in multi-party scenarios while ensuring the reliability of output results and maintaining the privacy of data and computation processes. This lays the foundation for developing more powerful privacy protection applications. Tools related to cryptographic technologies (such as blockchains that ensure data integrity and prevent user exclusion, and differential privacy techniques that further protect privacy by adding noise to data) will also play an important role here.

Application and user-level security: An application can only be considered truly secure when its security promises can be understood and verified by users. This requires the use of software frameworks to reduce the development difficulty of high-security applications. More importantly, browsers, operating systems, and other middleware (such as locally running monitoring large language models) need to work together: verifying application security, assessing risk levels, and clearly presenting this information to users.

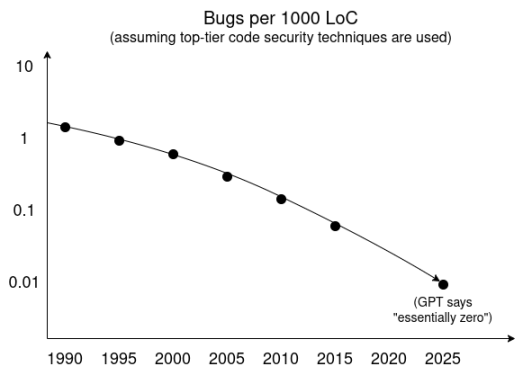

Formal verification: We can utilize automated proof methods to algorithmically verify whether programs meet key characteristics (such as not leaking data and preventing unauthorized third-party modifications). The Lean language has recently become a popular tool in this field. Currently, these technologies have begun to be used to verify zero-knowledge proof algorithms for the Ethereum Virtual Machine (EVM) and other high-value, high-risk use cases in the cryptographic field, with similar applications in broader areas. In addition, we need to achieve further breakthroughs in other more fundamental security practices.

The deterministic view that "cybersecurity cannot be fundamentally resolved" in the early 21st century is incorrect: vulnerabilities (and backdoors) can be addressed. We "only need" to learn to prioritize security over other competitive goals.

Open-source and security-centric operating systems: Such systems are continuously emerging, such as the security-focused Android derivative GrapheneOS, minimalist secure kernels (like Asterinas), and Huawei's HarmonyOS— Harmony has an open-source version and is adopting formal verification techniques. Many readers may question: "Since it's Huawei's system, there must be backdoors, right?" But this viewpoint overlooks the core logic: regardless of who develops the product, as long as it is open and anyone can verify it, the identity of the developer should not be a concern. This case illustrates that openness and verifiability can effectively counter the trend of global technological fragmentation.

Secure open-source hardware: If we cannot ensure that hardware truly runs specified software and does not leak data in the background, then even the most secure software is meaningless. In this area, I focus on two short-term goals:

Personal secure electronic devices: In the blockchain field, these are referred to as "hardware wallets," while open-source enthusiasts call them "secure phones"— but once we understand the dual demand for "security" and "universality," we will find that the core functions of these two types of devices will ultimately converge.

Physical infrastructure in public places: This includes smart locks, the aforementioned biological monitoring devices, and various IoT technologies. To gain public trust in such facilities, openness and verifiability are essential prerequisites.

Secure open-source toolchains for building open-source hardware: Nowadays, hardware design relies heavily on closed-source components. This not only significantly raises hardware R&D costs and increases the barriers to development permissions but also makes hardware verification difficult— if the tools for generating chip designs are closed-source, developers cannot determine verification standards. Even existing technologies like scan chains often cannot be practically applied due to key supporting tools being closed-source. However, this situation is not unchangeable.

Hardware verification technologies (such as IRIS technology, X-ray scanning): We need to confirm through scanning chips that their logic and design are completely consistent and that there are no additional components that can be maliciously tampered with or used to extract data. Verification can be achieved in two ways:

Destructive verification: Auditors randomly purchase products containing chips as ordinary end users, dismantle the chips, and verify whether their logic matches the design.

Non-destructive verification: Using IRIS or X-ray scanning technology, theoretically, every chip can be inspected.

To achieve "security consensus," the ideal state is to enable a broad group to master hardware verification technologies. Currently, X-ray equipment is not yet widespread, which can be improved in two ways: on one hand, optimizing verification equipment (and the verifiability design of chips) to lower the usage threshold; on the other hand, supplementing "full verification" with simpler verification methods— for example, ID tag verification that can be completed on a smartphone, or signature verification based on physically unclonable functions to generate keys. These methods can effectively verify key information such as "whether the device comes from a known manufacturer batch, and that batch has been thoroughly verified through third-party random sampling."

- Open-source, low-cost local environments and biological monitoring devices: Communities and individuals should be able to autonomously monitor their environment and health status to identify biological risks. These devices come in various forms, including personal medical devices like OpenWater, air quality sensors, general airborne disease sensors like Varro, and larger-scale environmental monitoring equipment.

Every layer of the technology stack requires openness and verifiability.

From Vision to Implementation: Paths and Challenges

Compared to traditional technological development visions, the vision of "fully open-source and verifiable" has a key difference—it places greater emphasis on safeguarding local sovereignty, empowering individual rights, and realizing freedom. In terms of security construction logic, it no longer pursues "completely eliminating all global threats," but shifts towards "enhancing the robustness of systems at all levels of the technology stack"; in the definition of "openness," it extends beyond "API open access planned by a central authority" to "every layer of the technology stack can be improved, optimized, and redeveloped"; in terms of the attribute of "verification," it is no longer the exclusive power of proprietary auditing agencies (which may even have conflicts of interest with technology vendors and governments), but becomes a basic right of the public, even a socially encouraged practice—anyone can participate in verification, rather than passively accepting "security promises."

This vision is better suited to the fragmented reality of the 21st-century global landscape, but the time window for implementation is very tight. Currently, centralized security solutions are advancing at an astonishing speed, with the core logic being "increasing centralized data collection nodes, pre-setting backdoors, while simplifying verification to a single standard—'whether it comes from a trusted developer or manufacturer'." In fact, for decades, attempts to replace "truly open access" with centralized solutions have never ceased: from the early Facebook-launched "Internet.org" (internet.org) to today's more complex technological monopoly models, each attempt has been more deceptive than the last. Therefore, we face a dual task: on one hand, we must accelerate the R&D and implementation of open-source verifiable technologies to compete with centralized solutions; on the other hand, we need to clearly communicate the concept to the public and institutions— "safer and fairer technological solutions are not a fantasy, but a practical reality."

If we can realize this vision, we will usher in a world that can be called "retro-futurism": on one hand, we can enjoy the benefits of cutting-edge technology—improving health with more powerful tools, organizing society in more efficient and robust ways, and defending against new and old threats (such as pandemics and drone attacks); on the other hand, we can reclaim the core characteristics of the technological ecosystem of the 1900s— infrastructure will no longer be "a black box that ordinary people cannot touch," but tools that can be dismantled, verified, and modified to meet individual needs; anyone can break through the limitations of being a "consumer" or "application developer" and participate in innovation at any layer of the technology stack (whether optimizing chip designs or improving operating system security logic); more importantly, people can truly trust technology—confident that the actual functions of devices align with their claims and that they will not secretly steal data or perform unauthorized operations.

Achieving "fully open-source and verifiable" is not without cost—optimizing the performance of software and hardware often comes at the expense of "lowering understandability and increasing system vulnerabilities," and the open-source model conflicts with the profit logic of most traditional businesses. Although the impact of these issues is often exaggerated, the public and market's shift in perception towards "open-source verifiability" requires time and cannot be achieved overnight. Therefore, we need to establish a pragmatic short-term goal: to prioritize building a fully open-source verifiable technology system for "high-security demand, non-performance-critical applications," covering consumer and institutional scenarios, remote and local contexts, as well as hardware and biological monitoring fields.

The rationale for this choice is that most scenarios with extremely high demands for "security" (such as health data storage, election voting systems, financial key management) do not have stringent performance requirements; even if some scenarios require certain performance, a balanced strategy can be achieved through a combination of "high-performance untrusted components + low-performance trusted components"— for example, using high-performance chips to process ordinary data while using security chips that have undergone open-source verification to handle sensitive information, ultimately ensuring security while meeting efficiency needs.

We do not need to pursue "extreme security and openness for all fields"— this is neither realistic nor necessary. But we must ensure that in those core areas directly related to personal rights, social equity, and public safety (such as healthcare, democratic participation, and financial security), "open-source verifiability" becomes a standard technical configuration, allowing everyone to enjoy secure and trustworthy digital services.

Special thanks to Ahmed Ghappour, bunnie, Daniel Genkin, Graham Liu, Michael Gao, mlsudo, Tim Ansell, Quintus Kilbourn, Tina Zhen, Balvi volunteers, and the GrapheneOS developers for their feedback and participation in discussions.

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。