Introduction

In just 30 minutes, a Spanish AI assistant capable of generating unlimited practice questions and providing real-time intelligent grading has successfully gone live. Its backend does not run on expensive central clouds but relies entirely on Gonka, an emerging decentralized AI computing network. Below are the complete practical records, core code, and online demo.

A New Paradigm for Decentralized AI Computing

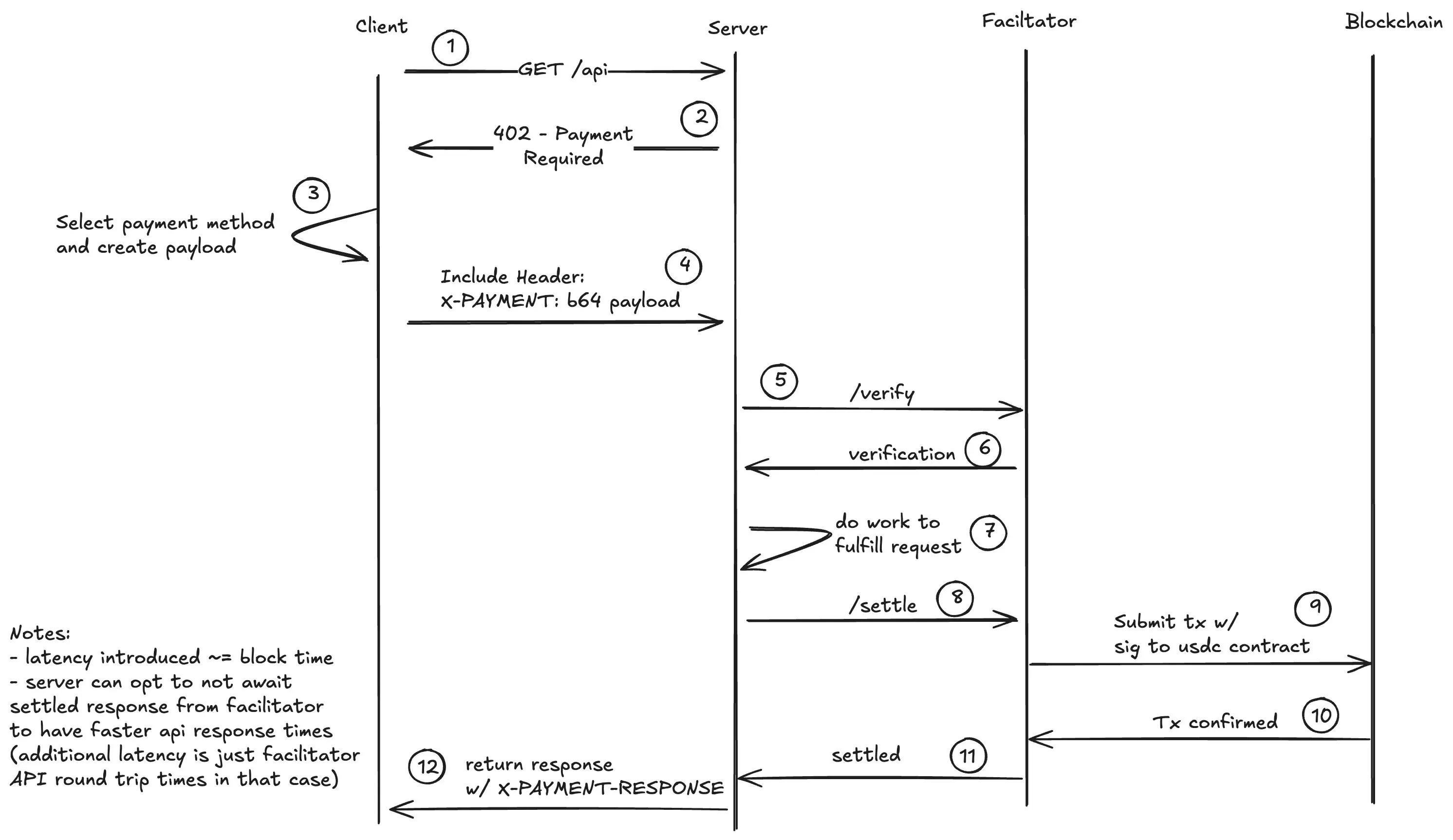

Gonka is a proof-of-work-based AI computing network that builds a brand new decentralized AI service ecosystem by achieving load balancing for OpenAI-like inference tasks among verified hardware providers. The Gonka network uses the GNK token for payment incentives and effectively prevents fraud through a random inspection mechanism. For developers, as long as they master the calling method of the OpenAI API, they can quickly access the Gonka network to deploy applications.

Practical Case: Intelligent Spanish Learning Application

We will build a Spanish learning application that continuously generates personalized exercises. Imagine a scenario like this:

- After the user clicks "Start New Practice," the AI generates a fill-in-the-blank sentence with a single blank.

- The user inputs an answer and clicks "Check," and the AI immediately provides a score and personalized analysis.

- The system automatically moves to the next exercise, creating an immersive learning loop.

Online Demo: carrera.gonka.ai

Technical Architecture Analysis

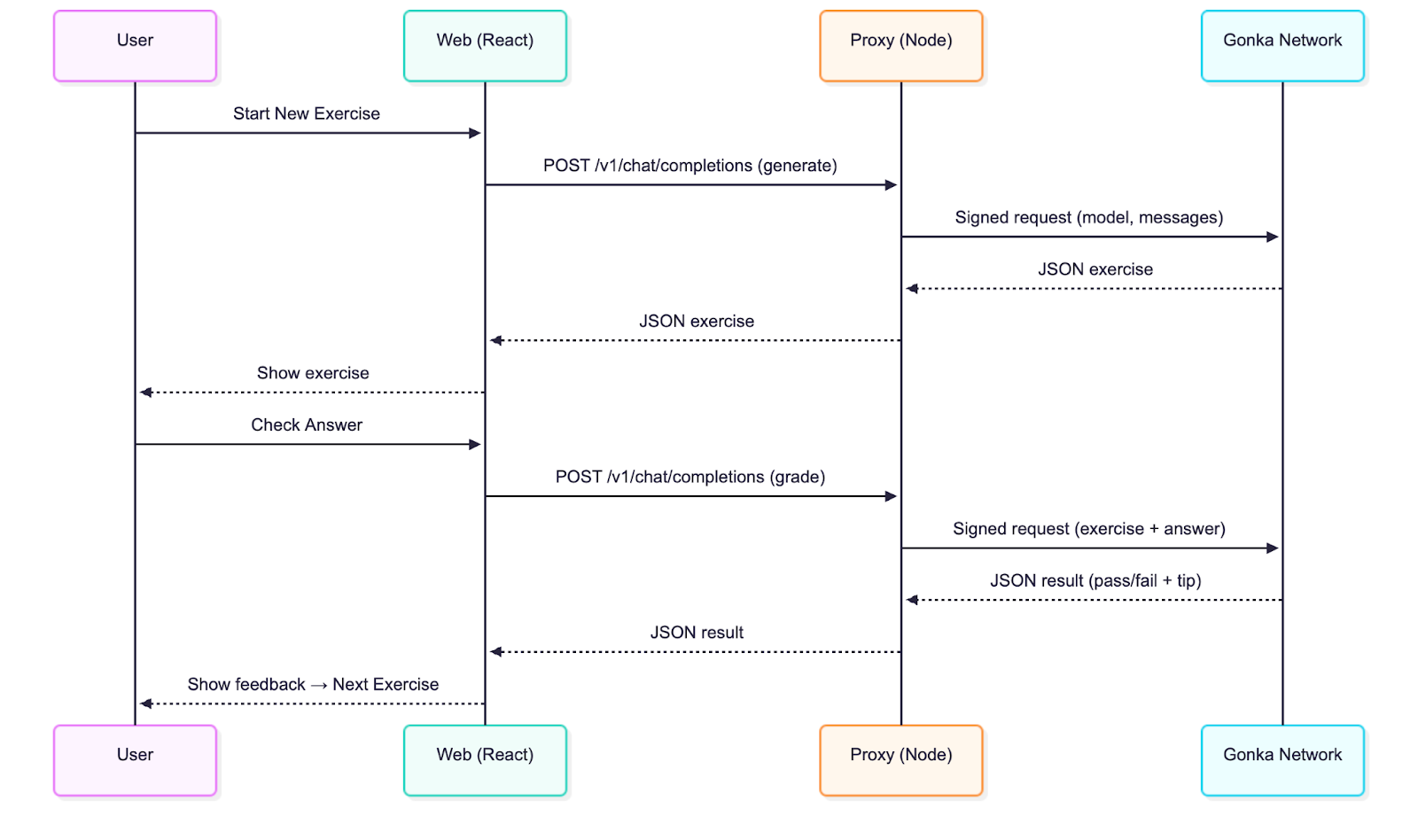

The application adopts a classic front-end and back-end separation architecture:

- React Frontend: Built on Vite, fully compatible with OpenAI interface standards.

- Node Proxy Layer: Only 50 lines of core code, responsible for request signing and forwarding.

The only difference from traditional OpenAI integration is the addition of a server-side signing step, which ensures key security, while all other operations remain consistent with standard OpenAI chat completion calls.

Quick Start Guide

Environment Requirements: Node.js 20+

Clone the Code Repository

git clone git@github.com:product-science/carrera.git

cd carrera

Create a Gonka Account and Set Environment Variables

# Create an account using the inferenced CLI

# Check the quick start documentation for CLI download methods:

# https://gonka.ai/developer/quickstart/#2-create-an-account

# ACCOUNT_NAME can be any locally unique name, serving as a readable identifier for the account key pair.

ACCOUNT_NAME="carrera-quickstart"

NODE_URL=http://node2.gonka.ai:8000

inferenced create-client "$ACCOUNT_NAME" \

--node-address "$NODE_URL"

# Export the private key (for server-side use only)

export GONKA_PRIVATE_KEY=$(inferenced keys export "$ACCOUNT_NAME" --unarmored-hex --unsafe)

Start the Proxy Service

cd gonka-proxy

npm install && npm run build

NODE_URL=http://node2.gonka.ai:8000 ALLOWED_ORIGINS=http://localhost:5173 PORT=8080 npm start

Health Check

curl http://localhost:8080/healthz

# Expected return: {"ok":true,"ready":true}

Run the Frontend Application

cd web

npm install

VITE_DEFAULT_API_BASE_URL=http://localhost:8080/v1 npm run dev

In the application, open "Settings" → Base URL is pre-filled. Choose a model (e.g., Qwen/Qwen3-235B-A22B-Instruct-2507-FP8, you can visit https://node1.gonka.ai:8443/api/v1/models to see the current list of available models), and click "Test Connection."

Core Technology Implementation

Gonka Proxy Service is the core integrated with Gonka. The proxy service signs requests with keys and then forwards OpenAI-like calls to the network. After developers deploy such a proxy for their applications, they can start processing inference tasks on Gonka. It is recommended to add an authentication mechanism during actual use to ensure that only authorized users can request inference.

Environment Variables Used by the Proxy Service:

// gonka-proxy/src/env.ts

export const env = {

PORT: num("PORT", 8080),

GONKA_PRIVATE_KEY: str("GONKA_PRIVATE_KEY"),

NODE_URL: str("NODE_URL"),

ALLOWED_ORIGINS: (process.env.ALLOWED_ORIGINS ?? "*")

.split(",")

.map((s) => s.trim())

.filter(Boolean),

};

Create a Client Using Gonka OpenAI TypeScript SDK (gonka-openai) (Go and Python versions are also available, and support for more languages is continuously updated, please pay attention to the code repository):

// gonka-proxy/src/gonka.ts

import { GonkaOpenAI, resolveEndpoints } from "gonka-openai";

import { env } from "./env";

export async function createGonkaClient() {

const endpoints = await resolveEndpoints({ sourceUrl: env.NODE_URL });

return new GonkaOpenAI({ gonkaPrivateKey: env.GONKA_PRIVATE_KEY, endpoints });

}

Expose OpenAI-Compatible Chat Completion Endpoint (/v1/chat/completions):

// gonka-proxy/src/server.ts

app.get("/healthz", (_req, res) => res.json({ ok: true, ready }));

app.post("/v1/chat/completions", async (req, res) => {

if (!ready || !client) return res.status(503).json({ error: { message: "Proxy not ready" } });

const body = req.body as ChatCompletionRequest;

if (!body || !body.model || !Array.isArray(body.messages)) {

return res.status(400).json({ error: { message: "Must provide 'model' and 'messages' parameters" } });

}

try {

const streamRequested = Boolean(body.stream);

const { stream: _ignored, …rest } = body;

if (!streamRequested) {

const response = await client.chat.completions.create({ …rest, stream: false });

return res.status(200).json(response);

}

// …

The server.ts also includes support for streaming (SSE), allowing developers to enable it in clients that support streaming by setting stream: true. Gonka also provides a Dockerfile to ensure reproducible builds and convenient deployment:

# gonka-proxy/Dockerfile

# ---- Build Stage ----

FROM node:20-alpine AS build

WORKDIR /app

COPY package*.json tsconfig.json ./

COPY src ./src

RUN npm ci && npm run build

# ---- Run Stage ----

FROM node:20-alpine

WORKDIR /app

ENV NODE_ENV=production

COPY --from=build /app/package*.json ./

RUN npm ci --omit=dev

COPY --from=build /app/dist ./dist

EXPOSE 8080

CMD ["node", "dist/server.js"]

Technical Analysis: Frontend (Vendor-Independent Design)

The React application always maintains compatibility with OpenAI, without needing to understand the specific implementation details of Gonka. It simply calls OpenAI-like endpoints and renders the aforementioned exercise loop interface.

All backend interactions are completed in web/src/llmClient.ts, where a single call is made to the chat completion endpoint:

// web/src/llmClient.ts

const url = `${s.baseUrl.replace(/\/+$/, "")}/chat/completions`;

const headers: Record

if (s.apiKey) headers.Authorization = `Bearer ${s.apiKey}`; // Optional parameter

const res = await fetch(url, {

method: "POST",

headers,

body: JSON.stringify({ model: s.model, messages, temperature }),

signal,

});

if (!res.ok) {

const body = await res.text().catch(() => "");

throw new Error(`LLM error ${res.status}: ${body}`);

}

const data = await res.json();

const text = data?.choices?.[0]?.message?.content ?? "";

return { text, raw: data };

To specify the API service provider, simply configure the base URL in the "Settings" popup at the top, where you can also set the model, defaulting to Qwen/Qwen3-235B-A22B-Instruct-2507-FP8. For local testing without a network, you can set the base URL to mock:; during actual calls, set it to the developer's proxy address (pre-filled in the quick start):

// web/src/settings.ts

export function getDefaultSettings(): Settings {

const prodBase = (import.meta as any).env?.VITE_DEFAULT_API_BASE_URL || "";

const baseUrlDefault = prodBase || "mock:";

return { baseUrl: baseUrlDefault, apiKey: "", model: "Qwen/Qwen3-235B-A22B-Instruct-2507-FP8" };

}

Application Prompt and Grading Mechanism

We use two prompt templates:

Generation Prompt: "You are a Spanish teacher… only output strict JSON format… generate a fill-in-the-blank sentence containing exactly one blank (____), including the answer, explanation, and difficulty level."

Grading Prompt: "You are a Spanish teacher grading assignments… output strict JSON format, including pass/fail status and explanation."

Example Code Snippet for Generation Process:

// web/src/App.tsx

const sys: ChatMsg = {

role: "system",

content: `You are a Spanish teacher designing interactive exercises.

Only output strict JSON format (no explanatory text, no code markers). The object must contain the following keys:

{

"type": "cloze",

"text": "A Spanish sentence containing exactly one blank marked with ____>",

"answer": "The only correct answer for the blank>",

"instructions": "Clear instructions>",

"difficulty": "beginner|intermediate|advanced"

}

Content Specifications:

- Use natural life scenarios

- Only retain one blank (precisely use the ____ marker in the sentence)

- Control sentence length to 8-20 words

- Use Spanish only for the sentence part, English for the explanation part

- Diversify exercise focus: ser/estar, past tense vs. imperfect, subjunctive mood, por/para, agreement, common prepositions, core vocabulary`

};

We added a few low-sample examples to help the model understand the output format, then parse the JSON and render the exercises. The grading phase also uses a strict JSON format to output pass/fail results and brief explanations.

The parsing mechanism is fault-tolerant—it will automatically clean code markers and extract the first JSON data block when necessary:

// web/src/App.tsx

// Fault-tolerant JSON extractor, adapting to model outputs that may contain explanatory text or code markers

function extractJsonObject

const trimmed = String(raw ?? "").trim();

const fence = trimmed.match(/```(?:json)?\s*([\s\S]*?)\s*```/i);

const candidate = fence ? fence[1] : trimmed;

const tryParse = (s: string) => {

try {

return JSON.parse(s) as TExpected;

} catch {

return undefined;

}

};

const direct = tryParse(candidate);

if (direct) return direct;

const start = candidate.indexOf("{");

const end = candidate.lastIndexOf("}");

if (start !== -1 && end !== -1 && end > start) {

const block = candidate.slice(start, end + 1);

const parsed = tryParse(block);

if (parsed) return parsed;

}

throw new Error("Unable to parse JSON from model response");

}

Follow-up Suggestions for Developers:

• Add custom exercise types and grading rules

• Enable streaming UI (proxy supports SSE)

• Add authentication and rate limiting to the Gonka proxy

• Deploy the proxy to the developer's infrastructure and set VITE_DEFAULT_API_BASE_URL during web application builds

Summary and Outlook

Why Choose Gonka?

- Cost Advantage: Decentralized computing significantly reduces inference costs compared to traditional cloud services.

- Privacy Protection: Requests are signed through the proxy, and keys are never exposed.

- Compatibility: Fully compatible with the OpenAI ecosystem, with very low migration costs.

- Reliability: The distributed network ensures high availability of services.

Gonka provides AI developers with a smooth transition to the era of decentralized computing. Through the integration methods introduced in this article, developers can enjoy the cost advantages and technical dividends brought by decentralized networks while maintaining their existing development habits. As decentralized AI infrastructure continues to improve, this development model is expected to become the standard practice for the next generation of AI applications. Meanwhile, Gonka will also launch more practical features and explore more AI application scenarios with developers, including but not limited to intelligent education applications, content generation platforms, personalized recommendation systems, automated customer service solutions, etc.

Original Link: https://what-is-gonka.hashnode.dev/build-a-productionready-ai-app-on-gonka-endtoend-guide

About Gonka.ai

Gonka is a decentralized network aimed at providing efficient AI computing power, designed to maximize the utilization of global GPU computing power to complete meaningful AI workloads. By eliminating centralized gatekeepers, Gonka provides developers and researchers with permissionless access to computing resources while rewarding all participants with its native token GNK.

Gonka is incubated by the American AI developer Product Science Inc. The company was founded by industry veterans from Web 2, including former Snap Inc. core product director Liberman siblings, and successfully raised $18 million in funding in 2023, with investors including OpenAI investor Coatue Management, Solana investor Slow Ventures, K 5, Insight, and Benchmark partners. Early contributors to the project include well-known leading companies in the Web 2-Web 3 space such as 6 blocks, Hard Yaka, Gcore, and Bitfury.

Official Website | Github | X | Discord | White Paper | Economic Model | User Manual

免责声明:本文章仅代表作者个人观点,不代表本平台的立场和观点。本文章仅供信息分享,不构成对任何人的任何投资建议。用户与作者之间的任何争议,与本平台无关。如网页中刊载的文章或图片涉及侵权,请提供相关的权利证明和身份证明发送邮件到support@aicoin.com,本平台相关工作人员将会进行核查。